Ever tried generating the "same person" twice with AI?

If you picked any of these, you're not alone.

A d2c founder who happens to be a close friend asked me last week: "Can you make me 7 product photos for Instagram? Different backgrounds, same vibe."

Easy, right?

I've been doing this AI stuff for months.

Took me 4 hours. Not because I'm slow.

Because AI has severe memory loss.

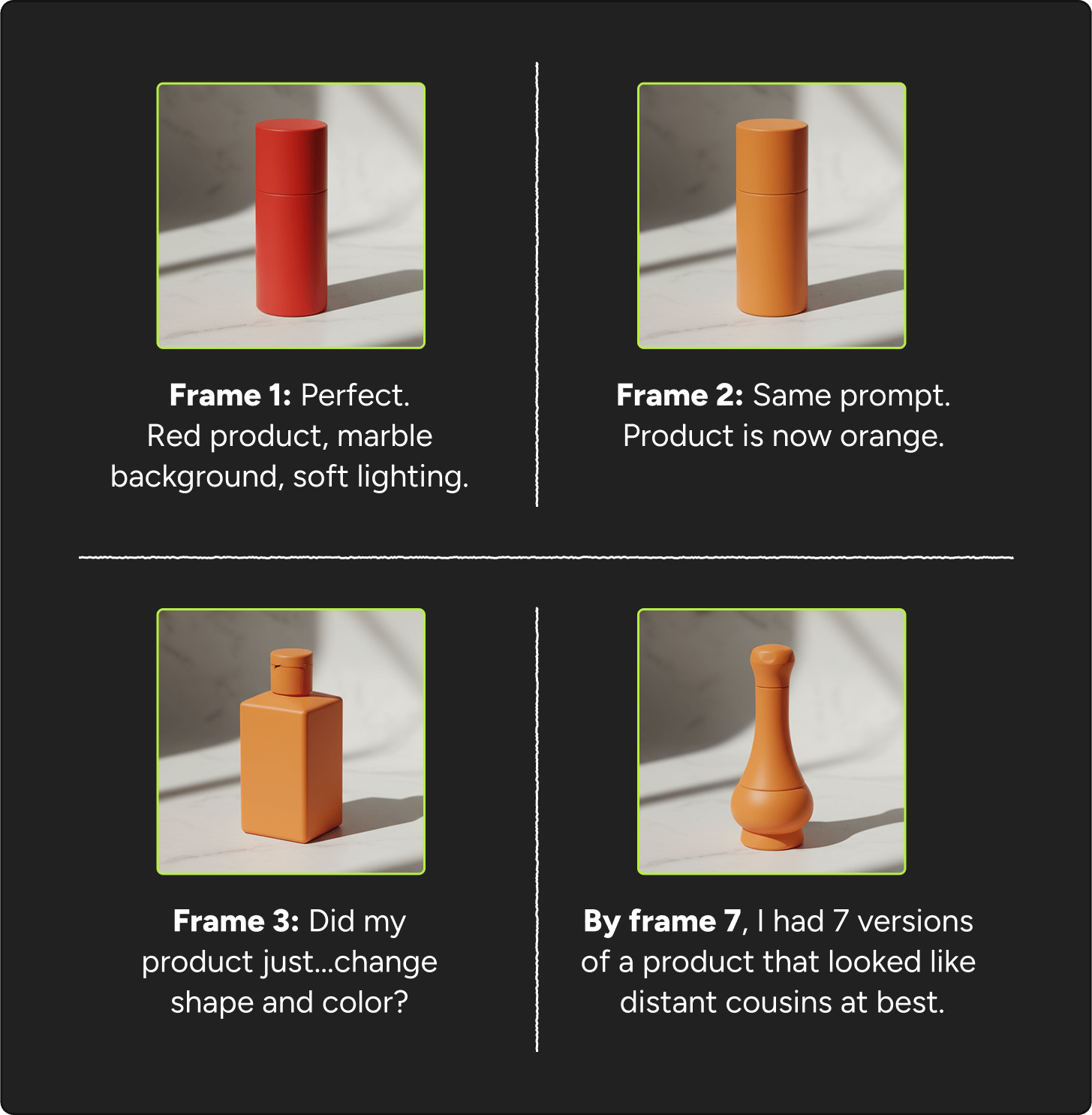

I see this everywhere:

Content creators trying to build character-based series: the character changes race between episodes.

Brands wanting consistent product shots: every photo looks like a different product line.

Animators building storyboards: their hero morphs into a different person every scene.

AI makes beautiful images now.

The problem is memory. These tools forget what they created 30 seconds ago.

And unfortunately… we've normalized this. We just accept that making a carousel means generating 50 images and hoping 7 of them kinda-sorta match.

Then last week, my Twitter feed exploded.

HiggsfieldAI launched something called Popcorn. People were posting 7-frame sequences where the character ACTUALLY looked the same throughout.

Not "close enough if you squint."

Actually the same.

Same face. Same clothes. Different scenes.

The comments were full of "finally" and "this is what we needed" and "better than Nano Banana."

I've seen these claims before. Every week there's a new "game-changer" that turns out to be the same thing with better marketing.

But the examples kept coming. And they looked... legit?

So I did what I always do. Spent the weekend testing it. Made 30+ sequences. Tried breaking it. Ran it against Nano Banana and Seedream side by side.

Here's what I found.

But before we dive into the full breakdown, let me catch you up on the AI chaos from this week:

3 TOOLS THAT MADE ME QUESTION EVERYTHING

1. Aside HQ - AI sales coach that whispers in your ear during calls

Live AI that listens to your sales calls, pulls answers from your Slack/docs/CRM, and suggests what to say in real-time. Never says "let me get back to you" again.

My take: Feels a bit like cheating? Nobody on the call knows you're getting fed answers. Also, if every sales rep starts using this, we'll all just be talking to AI feeding lines to humans. The invisible bot thing is clever for privacy, but also... weird. Probably useful for new hires who don't know the product yet. For experienced reps, this might just be distracting noise.

2. Flask - Video feedback tool for creative teams

Browser-based platform for reviewing videos together. Add time-stamped comments, attach references, tag feedback, filter by topic. Think Notion + Loom for video reviews.

My take: Finally. Every video feedback tool is either too complicated (Frame.io) or too simple (Loom comments). This hits the middle - fast enough for indie creators, organized enough for agencies. Solo-built though, so it might change fast or break. Worth testing on small projects first.

3. Infinite Talk - Turn images into talking videos with perfect lip sync

Upload an image and audio, get a video with accurate lip sync, head movements, body gestures, and facial expressions. Works for unlimited length videos.

My take: his one syncs not just lips but full body movement. Under $6 for 2 minutes of video. Great for educational content, marketing videos, or if you need a talking avatar fast. But the uncanny valley is real: works better for stylized/anime characters than photorealistic humans.

The problem every creator faces

Look, I know I just spent 300 words complaining about AI image consistency.

But the current workflow is insane:

Generate image

Download it

Upload as reference for next image

Cross fingers

Get something 70% similar

Repeat 6 more times

Spend an hour in Photoshop trying to match colors and features

We've been doing this because there was no other option.

We should talk more about this

Here's what the AI image bros won't admit:

Nano Banana and Seedream are INSANE for single shots. Like genuinely stunning images that could be in magazines.

But the second you need 3+ connected scenes? Chaos.

It's like hiring 7 photographers who've never met to shoot your story. Beautiful frames. Zero connection.

And everyone's acting like this is fine. "Just tweak the prompt." "Use img2img." "Try image references."

I've tried everything. It's exhausting.

So when Popcorn launched with claims of "perfect character consistency across 8 frames," I was skeptical but desperate enough to test it properly.

How Popcorn works

Setup: Auto vs Manual mode

Head to Higgsfield Popcorn. You get two modes:

Auto Mode (The "I'm in a hurry" option)

Upload up to 4 reference images

Write ONE main prompt describing the overall story

Select how many frames you want (up to 8)

Popcorn automatically generates different scenes, angles, and situations

It writes individual prompts for each frame for you

Manual Mode (The "I'm the director here" option)

Upload your reference images once

Write specific prompts for each individual scene

Full control over every single frame

Perfect for when you have an exact shot list in mind

My experience testing both

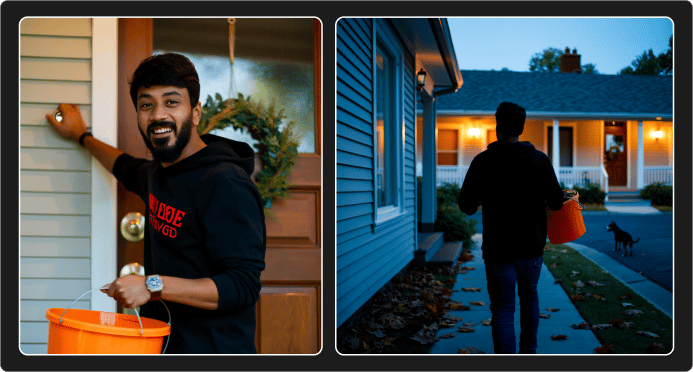

Test 1: Auto Mode with Halloween characters

I uploaded one image: mine 🙂

My simple prompt: "He's going door to door in the neighborhood to collect treats."

Hit generate for 5 frames.

The crazy part: Same character face, same clothes across all frames. The AI automatically created different scenarios while maintaining perfect character consistency.

From here, you can:

Animate any frame (sends it to their image-to-video generator)

Edit individual frames

Upscale the images

Download immediately

Use any frame as a new reference for more iterations

Test 2: Manual Mode with military scenes

I wanted full control, so I switched to Manual Mode.

Uploaded one image of Vicky Kaushal (let's call him Sergeant).

Then I wrote individual prompts for each scene:

"Sergeant stands at attention in front of a military base at dawn, expression serious and focused"

"Close-up shot of Sergeant buckling up tactical gear"

"Sergeant leans over a war room table studying maps"

"Sergeant standing next to a military helicopter"

"Inside the chopper with his squad"

Hit generate.

Result: Every single frame had the same character face, but in completely different settings and poses, exactly as I specified. No re-uploading the reference image. No retyping context. Just one storyboard with perfect continuity.

Test 3: Product photography at scale

Here's where it gets wild for e-commerce brands and content creators.

I uploaded a skincare product image and prompted: "Show this product in different New York settings, UGC style."

What I got in ONE generation:

UGC-style shot of someone holding the product

Product on a newspaper

Product on a car hood

Product on a park bench

Product on the street

Not all frames were perfect (let's be honest), but I generated 5 different product shots in 5 different NYC settings without leaving my chair.

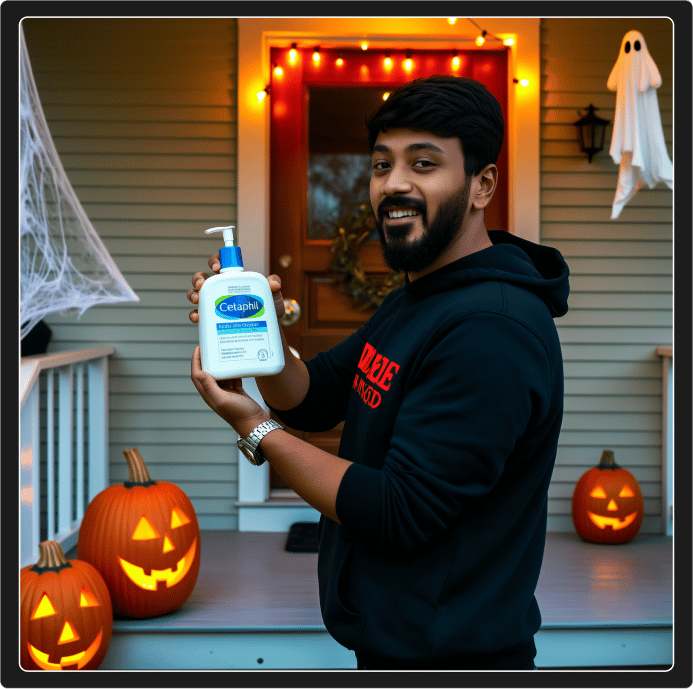

Test 4: Multi-image magic (This is Insane)

You can upload up to 4 reference images simultaneously. This is where Popcorn completely separates from the pack.

I uploaded:

Image 1: Me

Image 2: Skincare product

My prompt: "Create hyperrealistic image of the person in image 2 holding the product in image 1 in different poses. Make it UGC content. Change background to Halloween themed house."

Result:

What actually works

The UX is different:

Everything lives in one storyboard interface. No switching tabs. No re-uploading references. You build a sequence, not isolated images.

The consistency is real:

I tested this against Nano Banana and Seedream. Here's what happened:

With Popcorn: 5 frames, same character, same world rules, different scenarios.

With Nano Banana, you can see it for yourself.

With Seedream: Stylistically interesting, but my character gained 30 pounds and changed ethnicity between frames.

The editing flexibility matters:

When one frame isn't quite right, you can edit it without regenerating everything. Other tools make you start over and sacrifice consistency.

I'll be honest: The first few attempts require learning to think in sequences, not single shots. You need to understand that you're directing a mini-movie, not ordering individual images.

Some frames will be duds (that's just AI). But the hit rate is significantly higher than competitors because the system maintains context.

The aspect ratio options (3:4, 2:3, 9:16, 1:1) mean you can optimize for cinema, Instagram Reels or Shorts without extra work.

The honest comparison

Let me be brutally honest about where each tool belongs:

Nano Banana: Use when you need ONE stunning, photorealistic hero image. Product shots. Marketing visuals. Single powerful frames. It's the best at what it does.

Seedream: Use when you're exploring styles, need speed, and don't care about continuity. Great for inspiration boards and creative exploration.

HiggsfieldAI Popcorn: Use when you're actually storyboarding. Film pre-vis. Animation planning. Brand campaigns. Product catalogs. Anything where frames 1-8 need to exist in the same universe.

The Feature Breakdown

Real usecases and examples

For Brands:

Upload your product once. Generate it in 8 different settings (beach, urban, studio, lifestyle) in one go. Perfect for testing concepts before expensive photoshoots.

For Content Creators:

Build entire video scripts visually. Each frame becomes a shot. Maintain your character across different scenarios without constant re-prompting.

For Animators:

Create pose reference sheets. Test different expressions and actions with the same character. Build animatics that actually flow.

For Ad Agencies:

Generate multiple ad concepts with consistent brand elements. Show clients variations without starting from scratch each time.

My verdict

If you need storyboards for films, branded content, animation, or anything where continuity matters - Popcorn is the first AI that actually works.

Just need beautiful single images? Stick with Nano Banana or Seedream. They're exceptional.

But if you need frames that connect, characters that stay consistent, worlds with rules, and editing that doesn't break everything?

Popcorn delivers.

Reply with your biggest storyboarding disaster. Or show me your first Popcorn sequence. Best ones might get featured next week.

Until next time,

Vaibhav 🤝🏻

P.S: You can animate storyboard frames directly into video using their image-to-video tool (supports multiple models including Google Veo 3.1). Complete pre-production pipeline in one tool.

P.P.S: If you got better consistency with another tool, I actually want to know. Drop the name and I'll test it.

If you read till here, you might find this interesting

#AD 1

Find your customers on Roku this Black Friday

As with any digital ad campaign, the important thing is to reach streaming audiences who will convert. To that end, Roku’s self-service Ads Manager stands ready with powerful segmentation and targeting options. After all, you know your customers, and we know our streaming audience.

Worried it’s too late to spin up new Black Friday creative? With Roku Ads Manager, you can easily import and augment existing creative assets from your social channels. We also have AI-assisted upscaling, so every ad is primed for CTV.

Once you’ve done this, then you can easily set up A/B tests to flight different creative variants and Black Friday offers. If you’re a Shopify brand, you can even run shoppable ads directly on-screen so viewers can purchase with just a click of their Roku remote.

Bonus: we’re gifting you $5K in ad credits when you spend your first $5K on Roku Ads Manager. Just sign up and use code GET5K. Terms apply.

#AD 2

A Framework for Smarter Voice AI Decisions

Deploying Voice AI doesn’t have to rely on guesswork.

This guide introduces the BELL Framework — a structured approach used by enterprises to reduce risk, validate logic, optimize latency, and ensure reliable performance across every call flow.

Learn how a lifecycle approach helps teams deploy faster, improve accuracy, and maintain predictable operations at scale.