How have you found Gemini 3.0's output so far?

Gemini 3 has basically been the talk of the town since launch.

People have been sharing all sorts of insane things they've created using Gemini 3.0.

But while everyone's obsessing over what Gemini can do, they're missing the more important story: how Google built it.

And more importantly, why no one else can.

Gemini's hidden advantage

The launch day had everyone focused on the benchmarks. The 37.5% on Humanity's Last Exam. The #1 spot on LMSYS Arena.

But buried in the model card was the real story.

Gemini 3 Pro was trained entirely on TPUs. Google's custom silicon. Not a single NVIDIA GPU touched this model.

This matters more than any benchmark.

What Google built

Gemini 3 Pro is a sparse mixture-of-experts transformer with capabilities that redefine scale of LLMs.

That context window means Gemini can ingest entire code repositories, hours of video, or hundreds of documents in a single prompt. Not summaries. Not chunks. The whole thing.

The 64K output? That's enough to generate a small book in one response.

The key innovation: sparse MoE. The model routes tokens to different experts dynamically. Instead of activating all parameters for every token, it sends each input to specialized subnetworks.

The architectural innovation: Sparse MoE means the model dynamically routes tokens to different experts.

Think of it this way: Instead of activating all parameters for every token, the model learns to send each piece of input to specialized subnetworks. Token comes in, router decides which of hundreds of experts should handle it, only those experts activate.

This decouples total model capacity from computation cost. You get the power of a massive model without paying the full computational price for every inference.

This architecture only works at Google's scale because of TPUs.

$191 Million question

Training frontier models costs stupid money.

GPT-4: $78 million.

Gemini Ultra: $191 million.

The next generation with GPT-5 is already pushing past $500 million.

Google trains these models for 20% of what OpenAI pays.

Same compute. Same scale. One-fifth the cost.

With this and the stockpile of cash google is sitting on, they are playing a different game entirely.

First, the training data pipeline

The model card reveals Google's data processing approach:

Deduplication, robots.txt compliance, safety filtering, quality checks. Standard stuff but executed at massive scale.

The dataset itself: web documents, licensed data, Google product data (with consent), AI-generated synthetic data, and RL data for multi-step reasoning.

Nothing revolutionary here. The infrastructure advantage is what matters.

Now: why sparse MoE only works on TPUs

Gemini 3 Pro uses sparse mixture-of-experts architecture - but understanding what this actually means reveals why TPUs matter.

The Architecture Breakdown:

In a sparse MoE model, not all parameters activate for each token. The model contains hundreds of "expert" networks, each specialized for different types of computation. A routing mechanism dynamically decides which experts to use.

This decouples total model capacity from computation cost per token.

Traditional dense models activate every parameter for every token. A 100B parameter model uses 100B parameters every time.

Sparse MoE changes the equation. Gemini 3 Pro might have 500B+ total parameters, but only activates 50-100B per token. You get the knowledge capacity of a massive model with the inference cost of a smaller one.

PS: Created using Gemini 3 :)

A routing nightmare:

MoE models route each token to different experts. Token goes in, router decides which of 256 experts processes it, result comes out.

On GPUs, this creates a communication nightmare. Every routing decision requires moving data between chips. GPU clusters use hierarchical switches - your data hops through multiple network layers. More experts = more hops = more latency.

TPUs use a 3D torus topology. Every chip connects to six neighbors in a closed loop. No switches. No hierarchy. Direct chip-to-chip communication.

The proof: Google’s 600-billion parameter GShard model proved this.

Google trained it on 2,048 TPU cores in 4 days. Communication overhead: 36%. Try that on GPUs - you'd still be waiting for the all-to-all operations to complete.

The latest TPU v7 has 192GB of memory per chip. Enough to keep all expert parameters loaded. No swapping. No fetching from remote memory.

GPUs max out at 80-141GB. You're constantly shuffling parameters around.

The compounding advantage

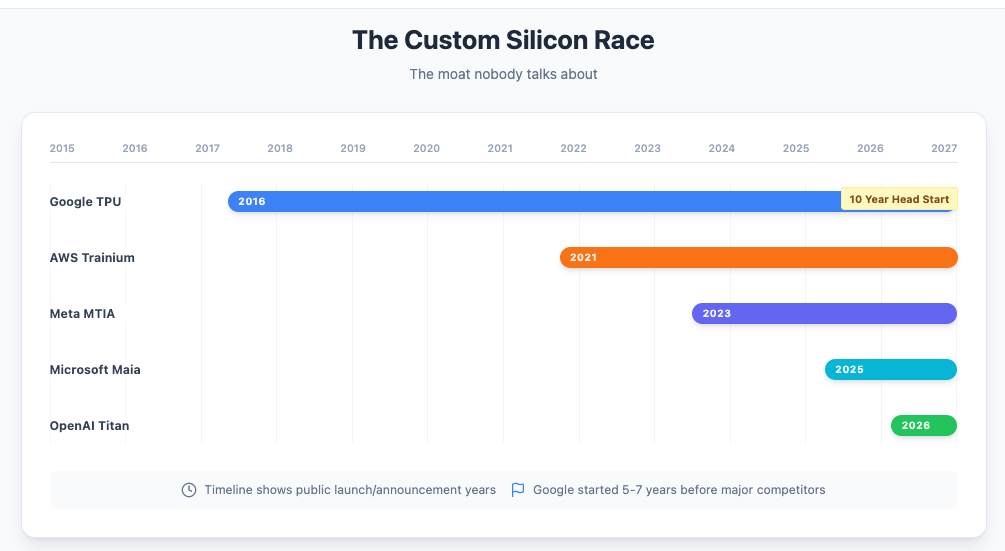

Google didn't stumble into this. They've been building it since 2016.

2020:

TPU v4: 200W per chip, 2.8 TOPS/Watt

NVIDIA A100: 400W per chip, 0.78 TOPS/Watt

That's 3.6x better efficiency.

Today:

TPU v6 Trillium: 67% better than v5e

TPU v7 Ironwood: Another 2x performance per watt

Compound those gains over 7 years: 30x better power efficiency than first-gen Cloud TPUs.

That's the real benchmark.

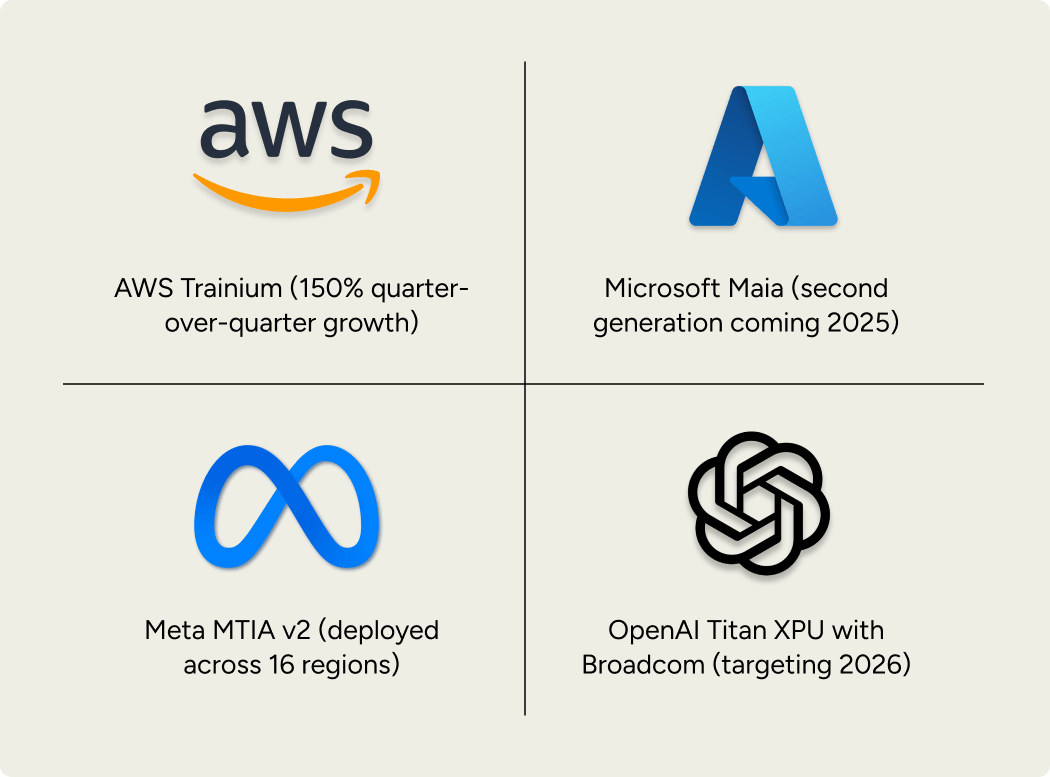

The moat

Every major player sees this. They're all building custom silicon:

But they're 5-10 years behind Google.

OpenAI's trapped. They need NVIDIA GPUs today. Can't wait until 2026 for Titan. Meanwhile, they're paying the "NVIDIA tax" - 80% gross margins on every chip.

Microsoft locked OpenAI into $250 billion of Azure compute. But Azure runs on... NVIDIA GPUs.

The Stargate project allocates $40 billion for NVIDIA chips. Even their escape plan requires paying NVIDIA.

The pricing gap:

Google: Gemini 2.5 Pro at $10 per million tokens

OpenAI: o3 at $40

This isn’t about Google’s generosity.

What it means

The AI race has become about who owns the infrastructure now.

Google trained Gemini 3 Pro's sparse MoE architecture because TPUs make it economically viable. The architecture works because the hardware enables it. The hardware exists because Google started building it a decade ago.

The compounding loop:

Better hardware → unique architectures → better models → more revenue → more hardware investment

OpenAI can't build sparse MoE models at this scale. The communication overhead on GPUs would destroy their already thin margins.

Anthropic gets partial access through Google Cloud TPUs. But when capacity gets tight, guess who gets priority.

If you're paying NVIDIA prices, you're playing a different game. Google's playing with house money.

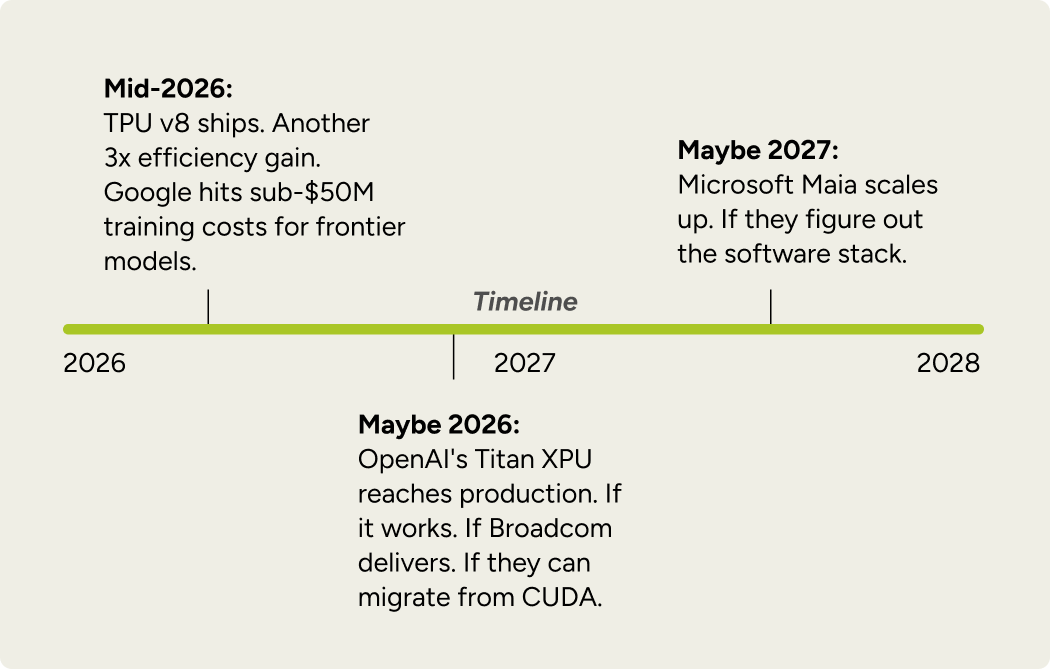

2026 inflection point

When you're making API calls, things can fail:

By then, Google's on TPU v9.

The cost gap becomes unbridgeable and the "NVIDIA tax" becomes existential.

Google runs inference at 1/10th the cost. They offer APIs at prices competitors can't match. They give Gemini away free to consumers.

Meanwhile other companies without custom silicon can't even compete on price. Can't experiment at scale. Can't serve billions of users profitably.

This is how infrastructure advantage becomes market dominance.

Until next time,

Vaibhav 🤝🏻

If you read till here, you might find this interesting

#AD

$6B Team Just Unleashed Cinderella on a $2T Market

Cinderella isn’t looking for her glass slipper— she’s busy smashing the $2T media market to pieces.

Elf Labs spent a decade at the US Patent & Trademark office in a historic effort to lock up 100+ historic trademarks to icons like Cinderella, Snow White, Rapunzel and more — characters that have generated billions for giant studios. Now they’re fusing their IP with patented AI/AR to build a new entertainment category the big players can’t copy.

And the numbers prove it’s working.

In just 12 months they raised $8M, closed a nationwide T-Mobile–supported telecom deal, launched patented interactive content, and landed a 200M-TV distribution partnership.

This isn’t a startup. It’s a takeover. And investors are sprinting to get in.

Lock in your ownership now

This is a paid advertisement for Elf Lab’s Regulation CF offering. Please read the offering circular at https://www.elflabs.com/

#AD 2

Powered by the next-generation CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows