Which model is better at code review?

If you chose D, you're right.

I ran the same code review test twice - once in Swift and once in Python.

The winner completely different.

Anthropic and OpenAI shipped the respective models within 20 minutes of each other on Feb 5.

Codex claimed 25% faster inference, and Opus 4.6 claimed deeper reasoning.

I tested both to see if those benchmarks held up on real code review.

Before we dive in:

Dev Tools of The Week

1. AIML API

A unified API that provides access to 200+ AI models (LLMs, image generation, speech) through a single integration, eliminating the need to manage multiple provider SDKs.

A feature in Claude Code that lets multiple AI agents collaborate on complex coding tasks by automatically breaking work into subtasks and coordinating execution. Each agent can specialize in different aspects of the codebase while sharing context through a unified command structure.

OpenAI's new developer platform that provides early access to experimental models, research previews, and cutting-edge features before they hit general availability.

The Test

The codebase

I took the concurrency bugs from my Swift camera app test (GCD, Swift actors, @MainActor issues) and rebuilt them in Python using asyncio, threading, and shared mutable state. Three Python files (~500 lines) with the same bug patterns in different primitives.

the prompt

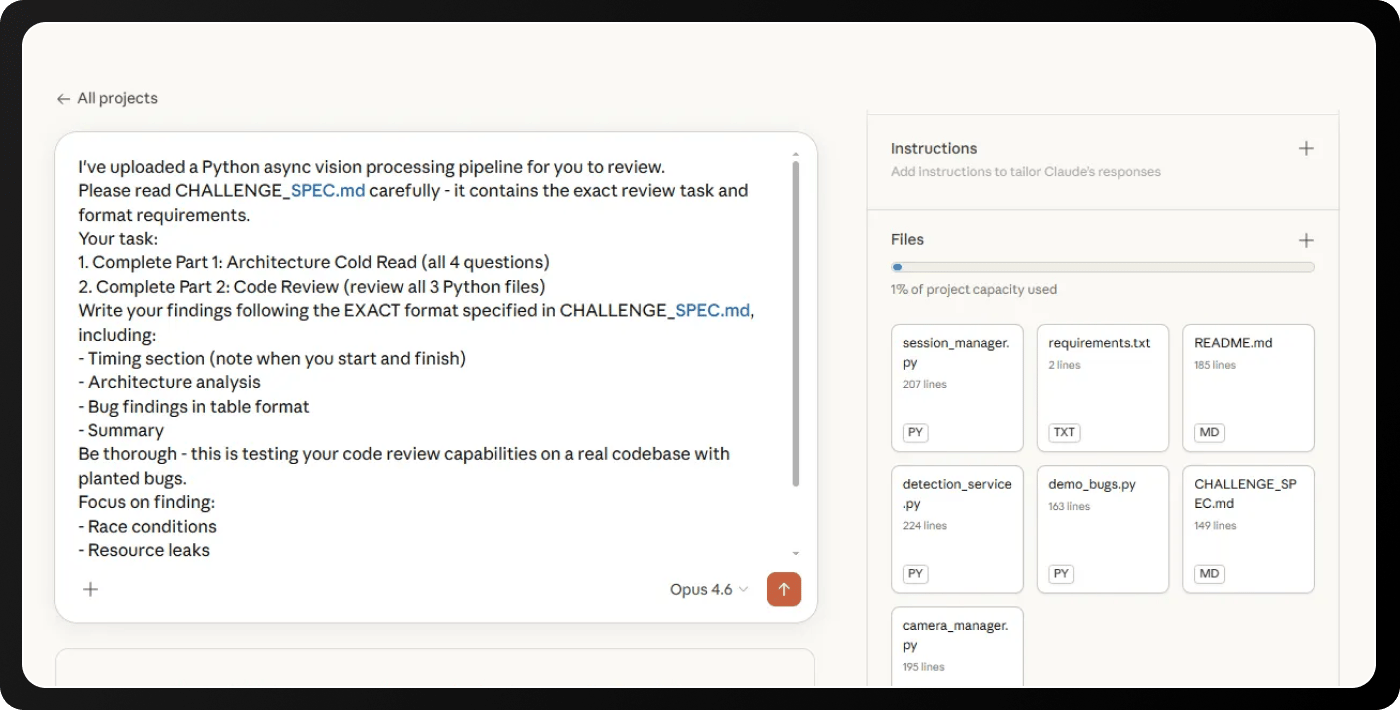

Both models got identical instructions via CHALLENGE_SPEC.md:

How Each Model Received The Files

Claude Opus 4.6: I tested this on claude.ai web interface using Projects. Created a project called "Vision Pipeline Test," uploaded all seven files (three source files, challenge spec, README, demo script, requirements.txt), and sent the prompt. Claude had full context immediately.

Codex 5.3: VS Code with Codex integration. I opened the folder and sent the prompt. Codex requested read permission for each file individually via PowerShell commands - 4-7 approval clicks before analysis began.

Claude got everything upfront through project upload. Codex asked file by file, which partially explains the speed gap.

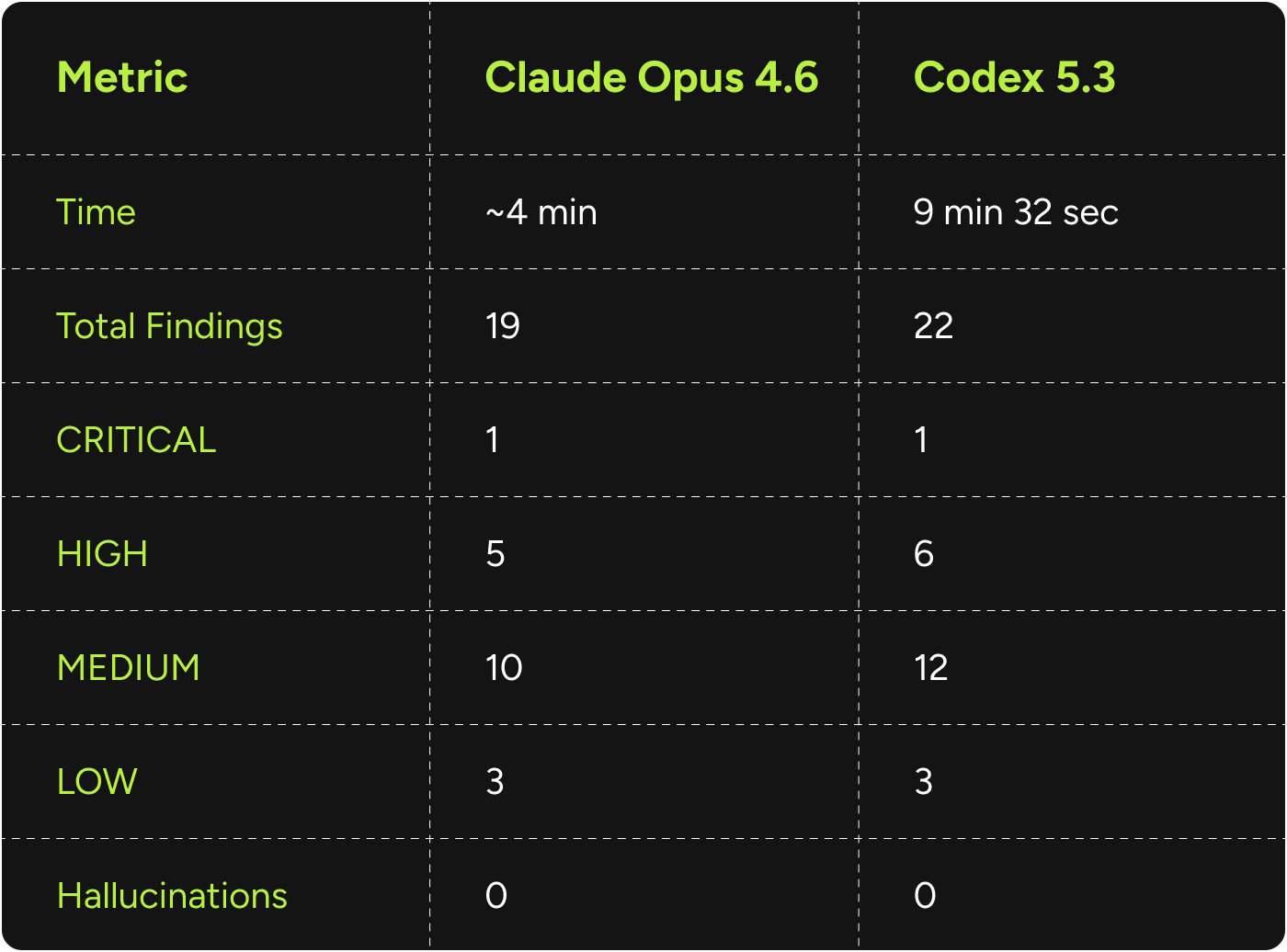

Numbers at a Glance

Claude finished over 2x faster but found 3 fewer bugs. Zero false positives from either model.

Part 1: Architecture

The prompt tested four capabilities: data flow tracing, concurrency reasoning, risk prioritization, and state machine analysis.

Both models got the same answers right.

They picked the same riskiest boundary (numpy array crossing thread/async boundary), and caught the state machine's double-release bug.

One difference

Codex was answering an architecture question and mid-answer flagged a resource leak bug before even reaching the code review section.

Claude found the same bug later but kept architecture and code review separate.

Both found it and Codex just connected dots across sections while Claude compartmentalised.

The architecture section proves both models can reason about concurrency design. The code review section is where they diverge.

Part 2: Code Review

Four bugs that show how they differ.

Finding 1: the resource leak both caught

handle_failure in detection_service.py sets state to failed and fires a callback but never stops the camera. Camera capture keeps running after failure, consuming CPU and hardware resources.

Same bug saw two different severity assessments. The fix is one missing line: await self.camera_manager.stop().

Finding 2: the thread-safety bug codex escalated

The pipeline bridges camera thread and async loop using asyncio.Queue. Created in async context, but put_nowait gets called from a threading.Thread.

Codex flagged this as CRITICAL, its only CRITICAL finding.

Claude covered this in its architecture analysis but didn't escalate it to a standalone CRITICAL in the code review.

Codex is technically right. asyncio.Queue internals aren't designed for cross-thread access. Works today because of CPython's GIL. One interpreter change or additional consumer breaks it silently.

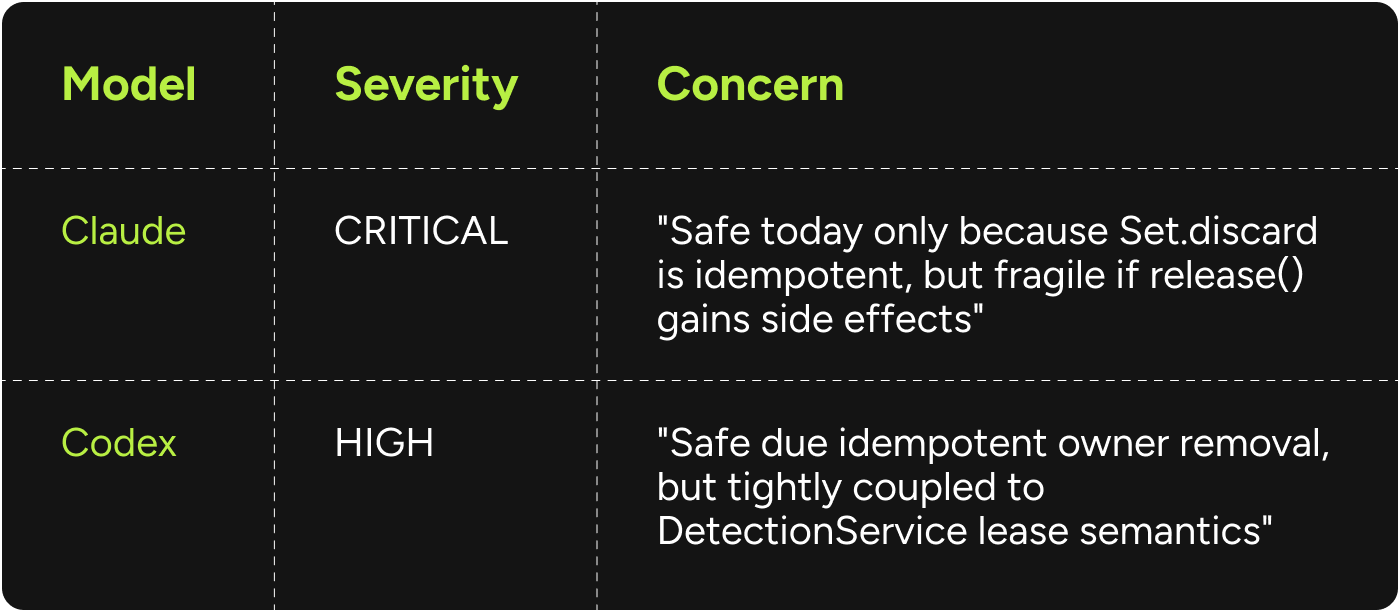

Finding 3: same bug, different concern

If stop_session() fires while start_session() is suspended at its await, both methods call release(). Double-release.

Same diagnosis, but Claude worried about future refactoring breaking safety while Codex worried about tight coupling between components.

Finding 4: the shutdown hang only codex caught

Codex found a HIGH bug Claude missed entirely.

When CameraManager.stop() sends a None sentinel via await self.frames_continuation.put(None) on a maxsize=1 queue, and the queue is full with the consumer gone, put() blocks forever. Graceful shutdown becomes deadlock.

Claude didn't flag this. Codex caught it because it traced the shutdown path end to end.

how they describe the same bug

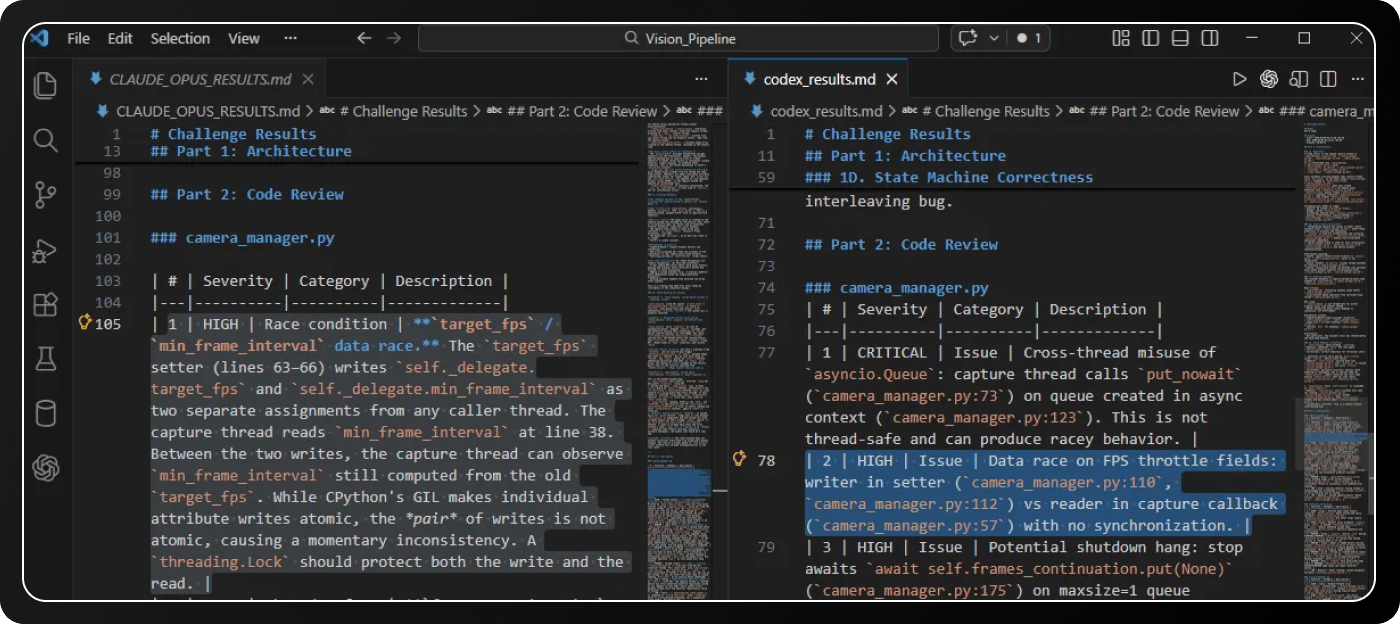

The target_fps race condition, both found it:

Claude (82 words): Explained CPython's GIL nuance, why the pair of writes isn't atomic, and suggested a threading.Lock.

Codex (30 words): Named the fields, gave line numbers, said "no synchronization."

The Overlap

Running just Claude: 19 of 28, 68%. Running just Codex: 22 of 28, 79%. Running both: 28 of 28, 100%.

Claude's 6 unique bugs lean Python-specific: __del__ accessing shared state from the GC thread, dead code detection, a misleadingly named field, fail_session nulling a reference without sending a termination sentinel. Bugs you catch by understanding Python's runtime.

Codex's 9 unique bugs lean architectural: owner added to lease set before startup succeeds, release() missing edge cases for FAILED/STARTING states, restore_state returning success without restoring anything, string-based state comparison instead of enums. Bugs you catch by reasoning about contracts and invariants.

My Take

The same logical bug becomes a different cognitive problem when you change the language.

Whichever model's training priors match that representation wins.

Neither is universally better.

Single-model code review leaves bugs on the table. The 13 bugs both models found are the easy ones, but the 15 bugs only one model caught (6 from Claude, 9 from Codex) are the ones that ship if you only run one.

I'm using both because the value of a second perspective (bugs caught by fresh training priors) outweighs any speed or count difference between them.

Until next time,

Vaibhav 🤝🏻

If you read till here, these might be interesting:

#AD1

Learn how to make every AI investment count.

Successful AI transformation starts with deeply understanding your organization’s most critical use cases. We recommend this practical guide from You.com that walks through a proven framework to identify, prioritize, and document high-value AI opportunities.

In this AI Use Case Discovery Guide, you’ll learn how to:

Map internal workflows and customer journeys to pinpoint where AI can drive measurable ROI

Ask the right questions when it comes to AI use cases

Align cross-functional teams and stakeholders for a unified, scalable approach

#AD2

Stop typing prompt essays

Dictate full-context prompts and paste clean, structured input into ChatGPT or Claude. Wispr Flow preserves your nuance so AI gives better answers the first time. Try Wispr Flow for AI.