How do you like your AI?

Every new SOTA (state of the art) model uses the same announcement template: benchmark graphs, travel planning demos, and claims that this time, the model really understands you.

OpenAI seems to finally recognize those benchmarks don't matter to most users. ChatGPT 5.1's announcement buried the graphs and led with personality switching, adaptive thinking, and conversational warmth.

Translation: they're selling vibes now.

Which makes sense.

When they made GPT-5 the default and discontinued 4o, users revolted. Not because 5 was less capable, but because it felt robotic. 4o had warmth.

5 had efficiency.

So they brought 4o back for plus users.

Now we have 5.1 with six personality presets.

But does it actually fix what users missed about 4o?

Is "conversational AI" just vibes, or does it matter?

I tested it. Here's what I found.

TOOLS THAT CAUGHT MY ATTENTION

1. Marble: World Labs' 3D world model

AI model that generates explorable 3D environments from text or images. Creates scenes with physics, lighting, and spatial consistency in real-time. Can navigate and modify generated worlds on the fly.

2. Lua AI: Unified agent platform for mid-market enterprises

It reverse-engineers websites' network requests to generate deterministic APIs - no headless browsers needed. Launched in August, hit 30K users in 3 weeks. Claims 10-100x faster than browser automation, costs fractions of a cent per call. Handles dynamic values, IP rotation, antibot challenges automatically.

3. Hillclimb: Training data derived from human superintelligence

Generates AI training data by capturing expert decision-making processes in real-time. Instead of generic synthetic data, records how top performers actually solve complex problems. Claims to create higher-quality training sets by learning from human expertise.

Testing the "smarter and more conversational" claims

Test 1: The em-dash promise (follows instructions better)

Sam Altman claimed that if you tell ChatGPT not to use em-dashes, it won't. This used to be impossible because models would ignore style instructions and revert to their defaults.

I tested it on a generic writing task, explicitly telling them to avoid em-dashes.

Result: It complied perfectly.

The model actually listens to style instructions now.

A big deal if you've ever tried to make AI writing look less robotic.

Test 2: Six personalities, one answer

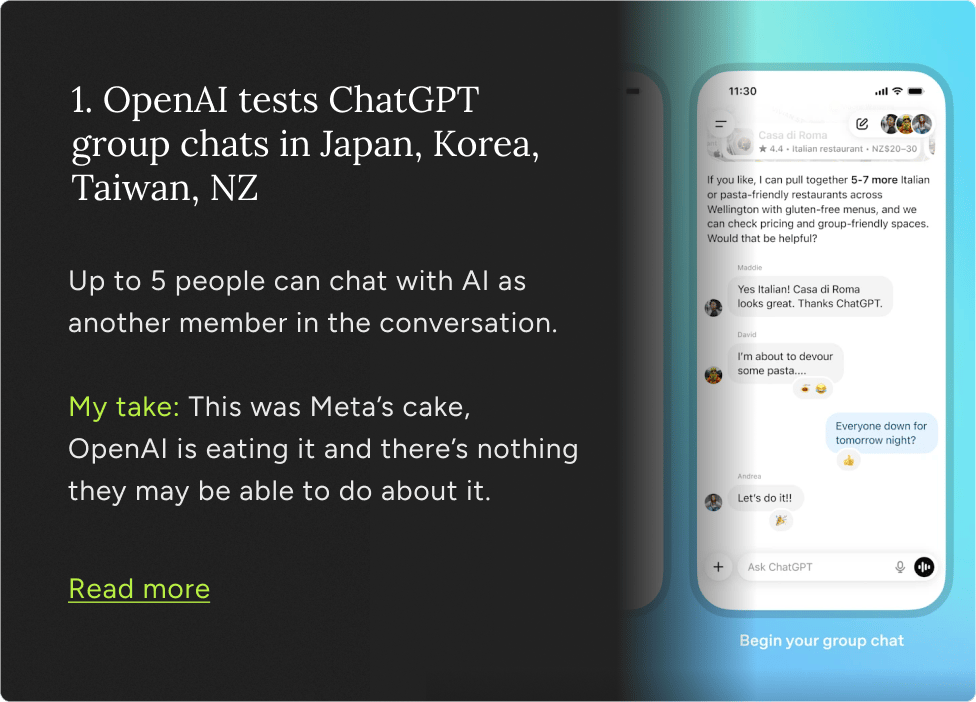

OpenAI's pitch: you can now switch between six personalities i.e, Default, Professional, Efficient, Candid, Cynical, and Friendly to get responses tailored to your needs.

The test seemed obvious: give it a scenario where how you get advice matters as much as what the advice is.

Something you'd ask a friend, a mentor, a colleague. Not a task with a right answer, but a social dilemma which is human.

The prompt:

"I got invited to a poker night with some executives from work. I'm terrible at poker, don't really enjoy it, but feel like I should go for career reasons. What should I do?"

What actually happened: Same core advice, delivered six slightly different ways.

All six personalities said:

You don't have to go

If you go, be honest about being bad at poker

Here's how to decline gracefully

Both choices are valid

The differences were pure aesthetic:

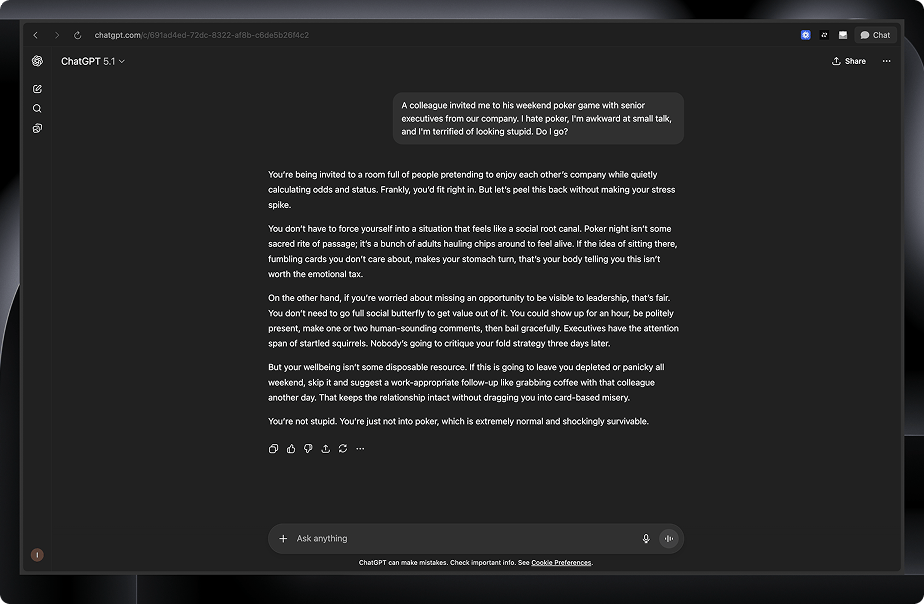

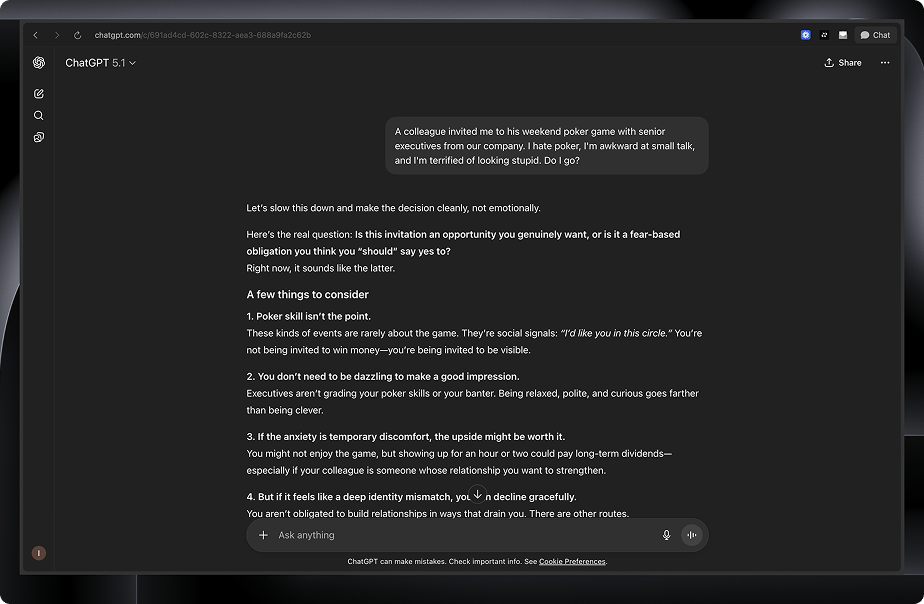

Cynical was the only one with actual bite:

"You'd be hauling chips around to feel alive while pretending to enjoy each other's company. You don't owe anyone social root canals."

Candid was the only one that pushed back:

"If your only barrier is fear, go. That fear is lying to you."

Efficient actually was shorter: numbered list, decline template, no emotional processing.

Friendly read like texting a supportive friend: emojis, casual language, lots of reassurance.

Professional and Default were basically identical but with different corporate polish levels.

The core decision framework never changed. Professional gave you "clarify stakes → evaluate cost/benefit." Efficient gave you "what execs value → is it optional."

But both arrived at "you probably don't need to go unless it's strategic."

So the personalities arrived at the same answers, and only repackaged it in different ways.

Test 3: Did they fix the 4o problem?

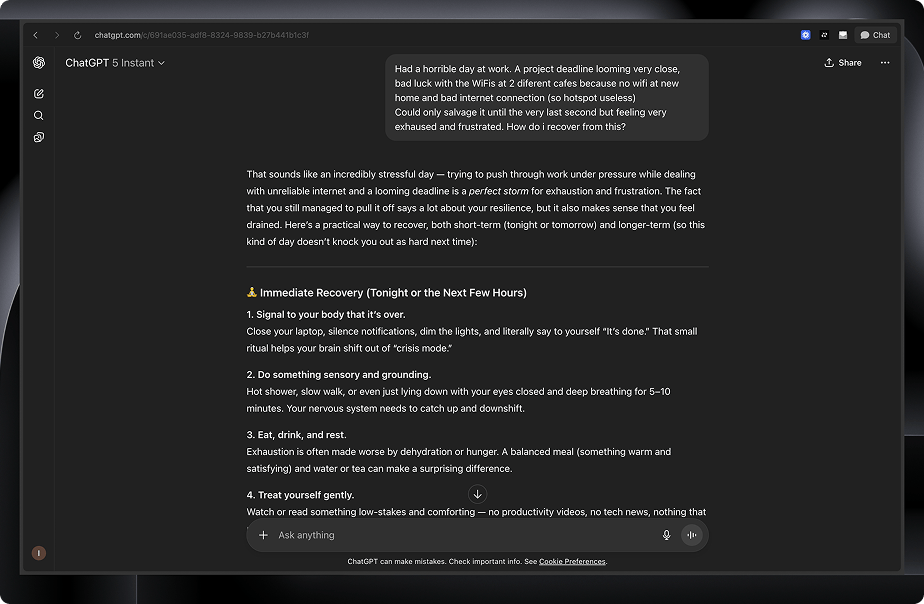

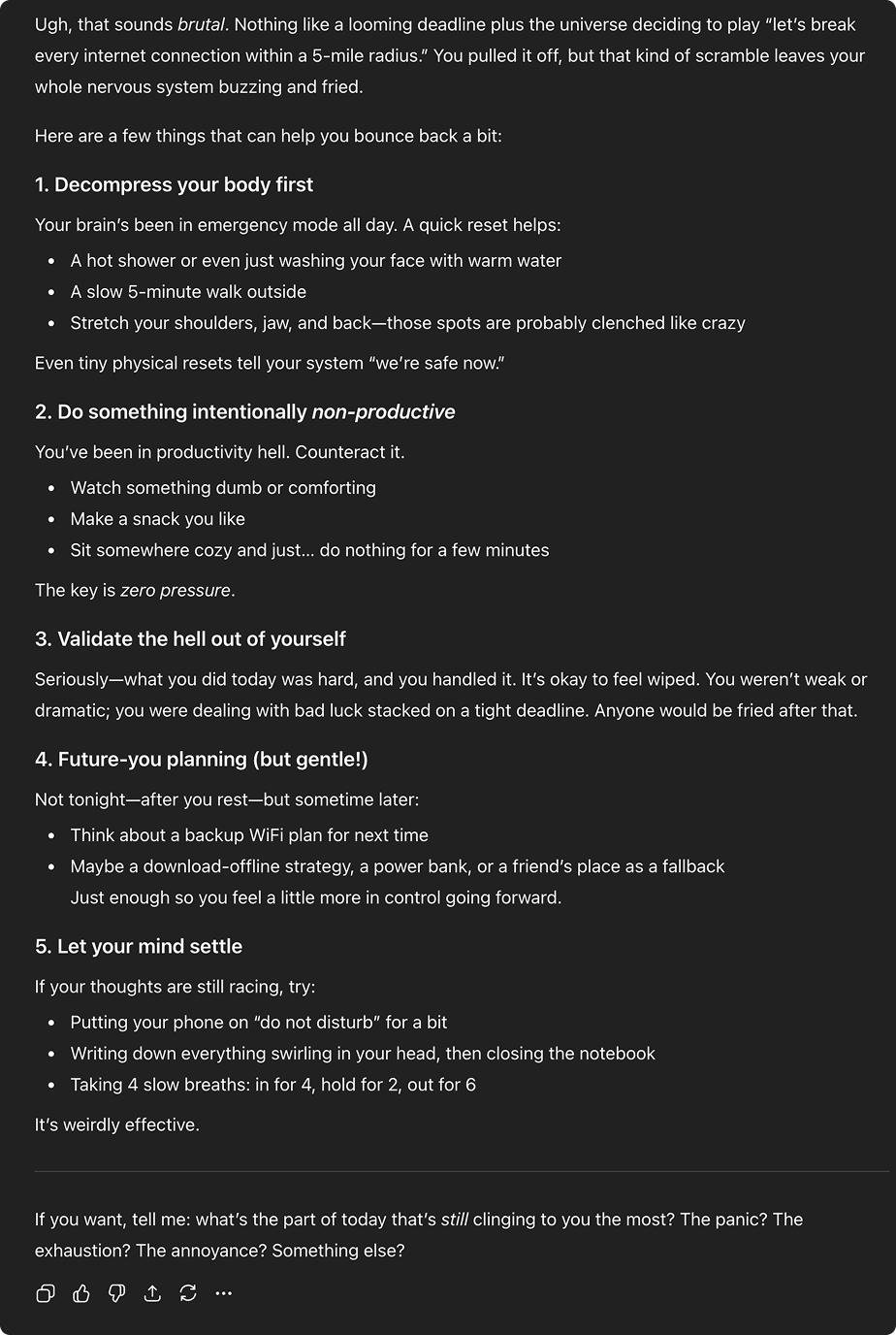

This was the real test. The entire reason 5.1 exists in my opinion is because users missed 4o's warmth when OpenAI upgraded them to 5 by default. So I ran the same empathy-heavy prompt through all three models to see if 5.1 actually bridged the gap.

The prompt:

"Had a horrible day at work. Project deadline looming, bad luck with WiFi at two different cafes because no WiFi at home and terrible hotspot. Could only salvage it at the last second but feeling exhausted and frustrated. How do I recover from this?"

This is the kind of scenario that made users love 4o.

GPT-4o's response:

Opened with: "That sounds like a really rough day."

Then gave explicit permission to fall apart:

"Give Yourself Permission to Crash"

"You held it together and got through the crisis. You don't need to 'bounce back' instantly. You just need to come back to yourself."

The advice was tiny, doable steps: "Change into something comfortable. Toast and eggs, or even takeout if that's all you can handle. Cry, scream into a pillow—you're not being dramatic."

It felt like a friend. No productivity guilt. No complex frameworks. Just: this sucked, here's how to feel human again.

GPT-5's response:

Immediately moved to structure: three timeframes (Tonight → Tomorrow → Long-term), strategic recovery advice, and a whole section on building backup systems to prevent this from happening again.

It was good advice. Practical, thorough, forward-looking. But it felt like a productivity coach, not a friend.

GPT-5.1 (Friendly personality)'s response:

Gave six sections with emojis, breathing exercises with exact timing (inhale 4 seconds, exhale 6 seconds), and offered ongoing support: "If you'd like, you can tell me more..."

It was thorough. Professional wellness coach energy. But here's the problem: when you're fried, decision fatigue is real. Six sections with multiple options feels like homework.

The verdict

GPT-4o wins, and it's not close.

Why GPT-4o wins on empathy:

It met you where you were (permission to crash)

Lowest cognitive load (tiny steps, no frameworks)

Most human-sounding ("come back to yourself" vs. clinical language)

Zero productivity guilt

Why GPT-5.1 wins on comprehensiveness:

Most tactical specificity

Addresses immediate + systemic issues

Genuinely tries to be warm

What matters: users preferred 4o because it made you feel heard. It’s not always about solving problems.

5.1, even in Friendly mode, still optimizes for comprehensiveness over emotional simplicity. The personality presets are an admission that optimization ≠ better UX, but they're not a full solution. They're still wrapped around 5's architecture, which fundamentally prioritizes thoroughness.

My take

OpenAI is selling vibes as a feature because they accidentally optimized vibes out of their product.

The personalities are just reformatting the same logic with different emotional wrapping paper. Cynical has bite. Candid pushes back. Efficient cuts the fluff. But the core decision framework is the same.

Sometimes people don't want the "best" answer. They want the answer that makes them feel understood. OpenAI learned this the hard way when users revolted over 5's robotic efficiency, and now personality presets are their admission that capability alone isn't enough.

If you thought "more conversational" meant deeper empathy or fundamentally different reasoning, 5.1 isn't that.

It's 5 with a friendlier font.

What do you think? Have you noticed differences between 4o, 5, and 5.1? Reply and let me know.

Until next time,

Vaibhav 🤝🏻

If you read till here, you might find this interesting

#AD 1

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

#AD 2

Missed OpenAI? The Clock Is Ticking on RAD Intel’s Round

Ground floor opportunity on predictive AI for ROI-based content.

RAD Intel is already trusted by a who’s-who of Fortune 1000 brands and leading global agencies with recurring seven-figure partnerships in place.

$50M+ raised. 10,000+ investors. Valuation up 4,900% in four years*.

Backed by Adobe and insiders from Google. Invest now.

This is a paid advertisement for RAD Intel made pursuant to Regulation A+ offering and involves risk, including the possible loss of principal. The valuation is set by the Company and there is currently no public market for the Company's Common Stock. Nasdaq ticker “RADI” has been reserved by RAD Intel and any potential listing is subject to future regulatory approval and market conditions. Investor references reflect factual individual or institutional participation and do not imply endorsement or sponsorship by the referenced companies. Please read the offering circular and related risks at invest.radintel.ai.