I just spent 17 minutes watching ChatGPT "think deeply" about startup funding data.

What do you do when AI takes 17 mins to research?

In that same time, Grok finished the job twice, Perplexity wrapped up with a bow, and Gemini... well, Gemini tried its best.

Here's what nobody tells you about "deep research" features: they're all doing the same thing (searching, synthesizing, citing sources), but the results are wildly different.

Some finish before you can make coffee. Others take long enough for an actual nap.

I gave four AI tools identical research tasks and timed them like a Formula 1 race. Spoiler: the fastest one wasn't the worst, and the slowest one definitely wasn't the best.

Before we dive in, here's what happened this week:

AI Tools That Made Me Question Everything

1. Fusion 1.0: AI Agent that actually speaks product, design, and code.

Builder.io launched Fusion, an AI agent that connects your entire product workflow: PMs tag it in Slack, designers import from Figma, developers get PRs. It reads Jira tickets, turns them into code, understands your design system, and responds to PR feedback like a real developer.

2. Notion New Tab: Replace Chrome's useless New Tab with your Notion dashboard.

Official Notion Chrome extension that replaces your new tab page with any Notion page you choose. Configure it to open your home, last visited page, top sidebar page, or Notion AI. Simple setup: pick your workspace, select your page, done. Every new tab now opens your actual work instead of Google's search bar and some websites you visited once.

3. Flick: Figma + Cursor for AI filmmaking, because 8-second clips aren't cinema.

YC-backed filmmaking tool that treats AI video generation like actual directing instead of prompt-roulette. Infinite canvas where you work with scripts, characters, and scenes as connected nodes. Built-in editing tools so you can iterate without starting over.

Getting to the main topic:

Every AI company has been boasting about their "deep research" capabilities.

OpenAI has Deep Research mode. Google shipped it in Gemini Flash. Perplexity initially built their entire product around it. Grok quietly added it without much fanfare.

But here's the thing: "deep research" is just a marketing term. What matters is:

Does it find the right information?

Does it follow your instructions?

Does it finish before you forget why you asked?

I decided to find out which tool delivers.

The challenge: Two impossible research tasks

I gave all four tools the exact same prompts. Complex, specific, with strict formatting requirements that would reveal whether these tools understand instructions or just throw text at you.

Test 1: Build a dated timeline of major LLM updates from the last 12 months

The requirements were brutal:

ISO date format

Primary source links with deep links to exact paragraphs

Evidence quotes (10-25 words)

No rumors, no leaks, only shipped features

8-12 rows covering at least four vendors

This would require verification, date-checking, and reading release notes.

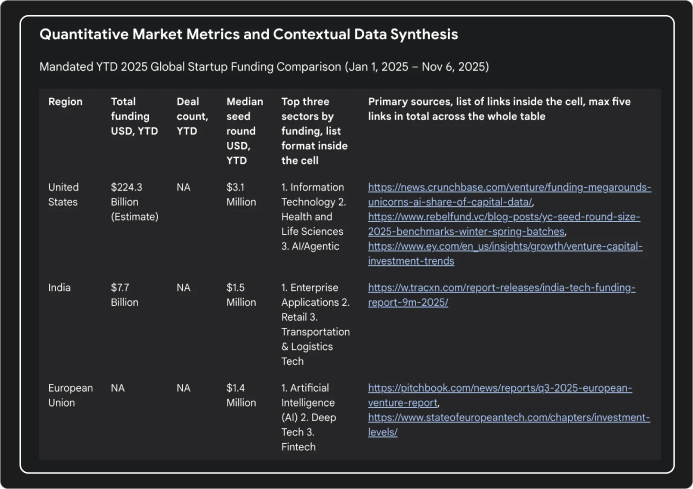

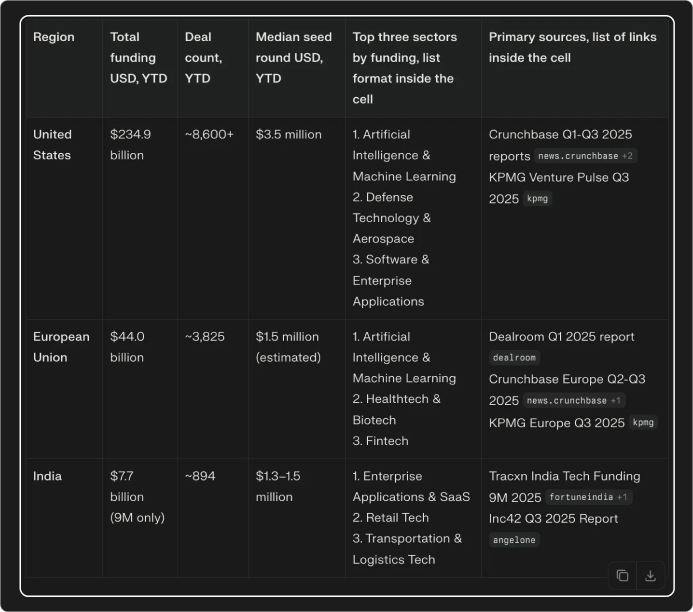

Test 2: Compare 2025 YTD startup funding across India, US, and EU

Even nastier:

Normalized USD figures with stated FX rates

Median seed rounds by region

Top three sectors per region

Max five source links total across the entire table

60-90 word regional notes with policy context

This tests whether the AI can synthesize data and not just dump information.

Test 1: The LLM timeline challenge

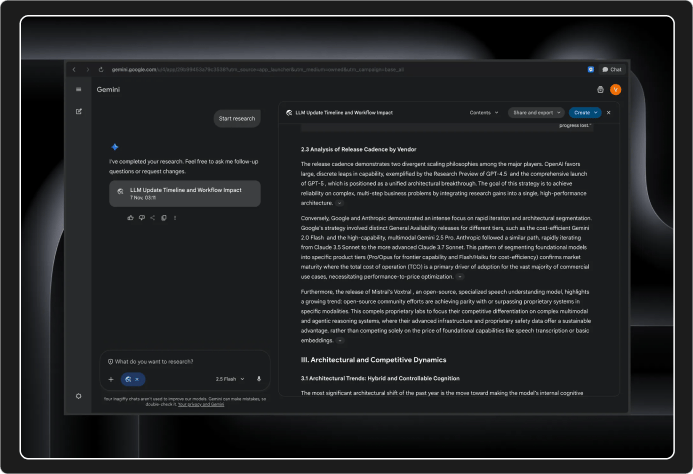

Gemini 2.5 Flash: The overthinker

Time taken: 3:50

First impressions: Gemini asked for confirmation before starting, which felt weirdly polite for an AI. Like it was saying "you sure you want me to work this hard?"

I appreciated the ability to edit the plan before execution. But I skipped it because I wanted to see what it would do by default.

What it got right:

Captured minor quality-of-life improvements (like OpenAI's update from literally yesterday)

Solid trend analysis, particularly around multi-modal capabilities

Export options are genuinely impressive: Google Doc, webpage, infographic, audio overview, even a quiz

What it completely missed:

Failed to capture Claude Sonnet 4.5 launch. That's not a small oversight, that's one of the biggest releases of the year.

Didn't stick to my format at all. I asked for a Markdown table with specific columns. It gave me... something else.

Way too verbose. I wanted crisp insights, not an essay.

The telling detail: Gemini's export features are cooler than its actual research. That's backwards.

Grade: B- for effort, C+ for execution

Grok: The speed DEMON

Time taken: 1:10 (!)

First impressions: Wait, that's it? I checked twice thinking it crashed. Nope. Grok just finished.

What it got right:

Captured Claude Sonnet 4.5 (which Gemini somehow missed)

Stuck to the prescribed format like it read the instructions

Succinct output that respected my time

Clean, scannable table structure

What it missed:

The "Top 3 game-changers" picks felt safe and obvious

Could have gone deeper on source verification

Some dates felt approximate rather than exact

The telling detail: Grok finished in the time it took Gemini to warm up. And the output was better.

Grade: A- for speed and format adherence, B+ for insight depth

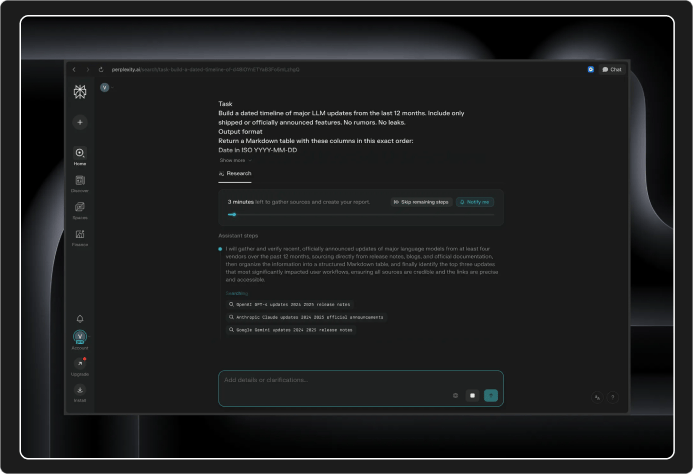

Perplexity: The UX champion

Time taken: 2:10 (estimated 4 minutes, delivered in half)

First impressions: The only tool that showed a countdown timer. Small detail, massive UX win. I knew what to expect.

What it got right:

Option to skip remaining steps if you want to speed things up

Notification feature so you don't have to babysit the tab

Format adherence was spot-on

Captured all major launches accurately

Best "Top 3 game-changers" picks by far, reflected what changed user workflows, not just what was technically impressive

What it missed:

Honestly? Not much. This used to be their entire product thesis at some point and it shows.

The telling detail: Perplexity under-promised (4 minutes) and over-delivered (2:10). That's the opposite of most AI tools.

Grade: A for execution, A+ for understanding what matters

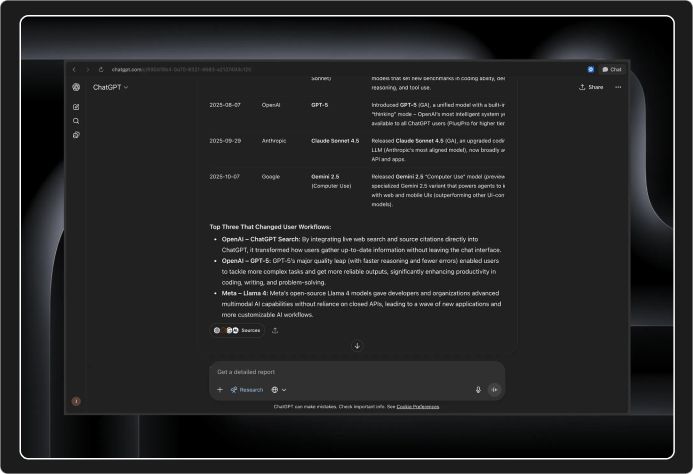

ChatGPT: The perfectionist who takes forever

Time taken: 17 minutes (!!)

First impressions: ChatGPT asked three clarifying questions before starting. I appreciated the thoughtfulness, but it added 30 seconds. Still counting it.

The experience:

I took an actual nap. Not kidding.

ChatGPT now lets you work on other chats while research runs in the background, which is nice because you'll need something to do

The activity sidebar showing its thinking process is oddly satisfying to watch

What it got right:

Most thorough output by far

Captured details the others missed

Deep source verification

What it completely missed:

17 minutes for research that Grok finished in 1:10 is inexcusable

The "Top 3 game-changers" were hilariously biased toward OpenAI's own features

Being thorough doesn't matter if I've moved on to three other tasks by the time you finish

The telling detail: ChatGPT took several minutes just to start researching. The others were already presenting results.

Grade: A for thoroughness, D for speed, C overall because time is money

Test 2: The startup funding challenge

I ran the same four tools through the funding comparison task. Here's what changed:

Gemini (3:20): Same problems, different test. Verbose, ignored my format, hard to extract insights from. I love Gemini for other tasks, but deep research isn't it.

Grok (1:20): Even faster this time. Output quality was significantly better than Gemini and stuck to the format. The sources were credible and the insights were actionable.

Perplexity (2:30): Similar output quality to Grok, but cited different (equally credible) sources. This is interesting. Seeing multiple source perspectives gives you a more complete picture. Numbers were in the same ballpark but not identical.

ChatGPT (Still running): I literally ran Test 2 first this time, and all three other tools finished before ChatGPT completed Test 1. That's not a typo.

Which Tool Won?

Let me be direct: ChatGPT's deep research is impressively thorough and unjustifiably slow.

17 minutes to produce output that's marginally better than what Perplexity delivers in 2 minutes? That's not optimization. That's poor engineering disguised as thoughtfulness.

Gemini tried its best. The export features are cool, the multi-modal thinking is interesting, and I'm genuinely excited for Gemini 3. But for deep research right now? It's too verbose, doesn't follow instructions, and misses major events. Pass.

The real race is between Grok and Perplexity.

Perplexity is the obvious contender. They've built their entire product around research. The UX is polished. The countdown timer, the skip option, the notification feature. These aren't accidents. They thought about how we use research tools.

Grok is the dark horse. I barely use it in my daily workflow, so I went in with low expectations. Then it delivered research results in half the time at similar quality levels. Speed alone makes it compelling.

My verdict:

For pure usefulness and reliability: Perplexity wins. The UX refinements, consistent quality, and proven track record make it the safe bet.

For raw speed without sacrificing quality: Grok is the surprise winner. If you need fast answers and don't care about fancy UI features, Grok is shockingly good.

For maximum thoroughness and you have time to spare: ChatGPT. But honestly, unless you're writing an academic paper, the extra 15 minutes isn't worth it.

For everything else: Not Gemini's deep research mode. At least not yet.

What This Means

"Deep research" features are all doing the same thing: search, verify, synthesize, cite. The differences are in execution speed, instruction-following, and understanding what we need.

Three insights that matter:

Speed is a feature. Grok's 1:10 completion time is very impressive, it changes how you use the tool. You can iterate, test different angles, and use research in real-time workflows. I am definitely using it more now.

Format adherence reveals intelligence. Tools that ignore your specific formatting requirements aren't "thinking creatively", they're not following instructions. Grok and Perplexity nailed this. Gemini and ChatGPT didn't.

UX matters. Perplexity's countdown timer and notification option seem trivial until you've watched ChatGPT spin for 17 minutes with no status update. Small details become massive advantages.

The "best" tool depends on your actual constraint: time, thoroughness, or UX polish. Pick accordingly.

Try This Yourself

Take your most annoying research task, the one with 15 tabs open and three spreadsheets and give it to these tools.

Then reply: Did speed matter more than thoroughness? Which tool surprised you? Did any of them save you time or just create more work?

Until next time,

Vaibhav 🤝

P.S. If ChatGPT's 17-minute research time bothers you, remember: it's optimized for different things. But for me, deep research needs to be fast enough to use. Otherwise, what's the point?

If you read till here, you might find this interesting

#AD 1

Find your customers on Roku this Black Friday

As with any digital ad campaign, the important thing is to reach streaming audiences who will convert. To that end, Roku’s self-service Ads Manager stands ready with powerful segmentation and targeting options. After all, you know your customers, and we know our streaming audience.

Worried it’s too late to spin up new Black Friday creative? With Roku Ads Manager, you can easily import and augment existing creative assets from your social channels. We also have AI-assisted upscaling, so every ad is primed for CTV.

Once you’ve done this, then you can easily set up A/B tests to flight different creative variants and Black Friday offers. If you’re a Shopify brand, you can even run shoppable ads directly on-screen so viewers can purchase with just a click of their Roku remote.

Bonus: we’re gifting you $5K in ad credits when you spend your first $5K on Roku Ads Manager. Just sign up and use code GET5K. Terms apply.

#AD 2

Want to get the most out of ChatGPT?

ChatGPT is a superpower if you know how to use it correctly.

Discover how HubSpot's guide to AI can elevate both your productivity and creativity to get more things done.

Learn to automate tasks, enhance decision-making, and foster innovation with the power of AI.