How do you choose which AI model to use?

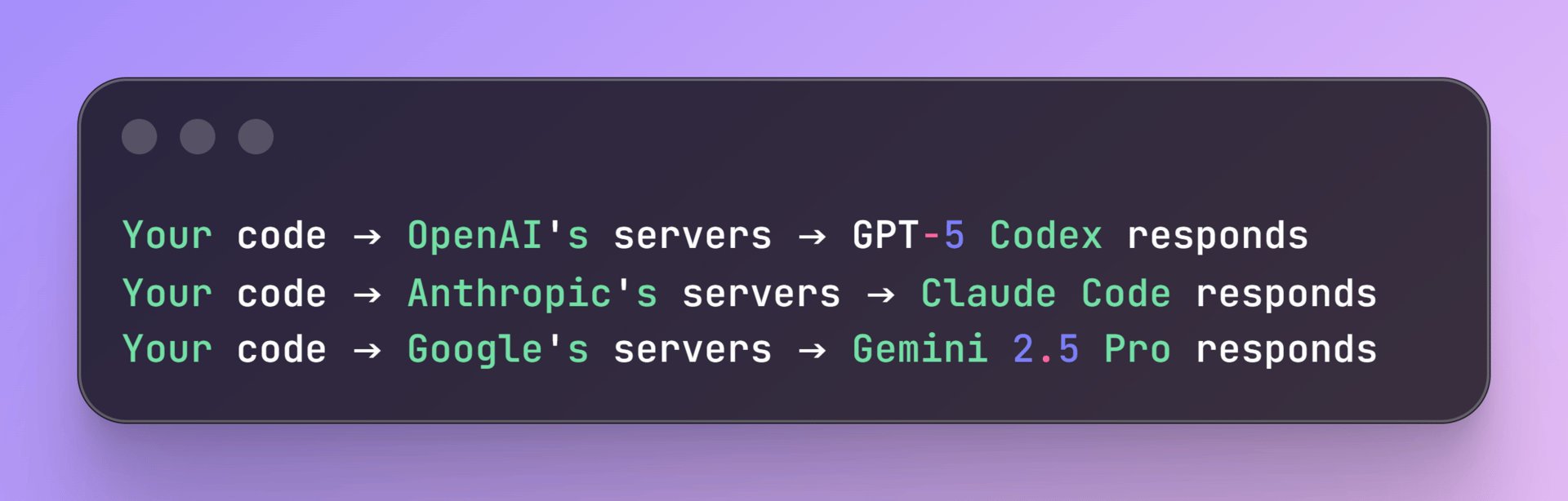

Here's a problem you'll hit if you're building anything with AI:

You want to use GPT-5 Codex for some tasks. Then you hear Claude Code is better. Then you see a tweet that claims Gemini 2.5 Pro is faster and cheaper.

So now you need:

An OpenAI account and API key

An Anthropic account and API key

A Google Cloud account and API key

Each one has different pricing. Different billing dashboards. Different ways to check usage.

Your "billing spreadsheet nightmare" = logging into three different sites, downloading three CSVs, manually adding up costs to figure out your actual AI spend.

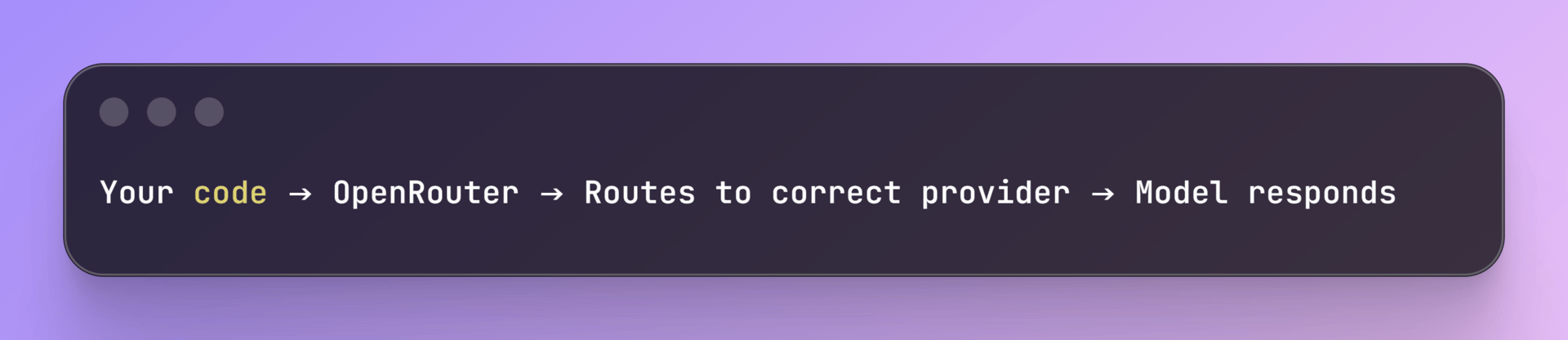

OpenRouter fixes this: One account. One API key. One bill that shows everything.

Why does this matter?

Different models are good at different things:

GPT-5 Codex is great at reasoning and following complex instructions

Claude Code is better at writing code and working with long documents

Gemini 2.5 Pro is faster and cheaper for simple tasks

You should be testing different models for different tasks. But most developers don't, because switching is annoying.

OpenRouter removes that friction.

How does "One API" work?

Think of OpenRouter like a router in your home network.

Your laptop doesn't connect directly to every website. It sends requests to your router. The router figures out where to send them.

Without OpenRouter: You need three different code setups. Three API keys. Three billing pages.

With OpenRouter: You write your code once. OpenRouter handles the routing. One API key. One bill.

The code you write is almost identical no matter which model you want to use.

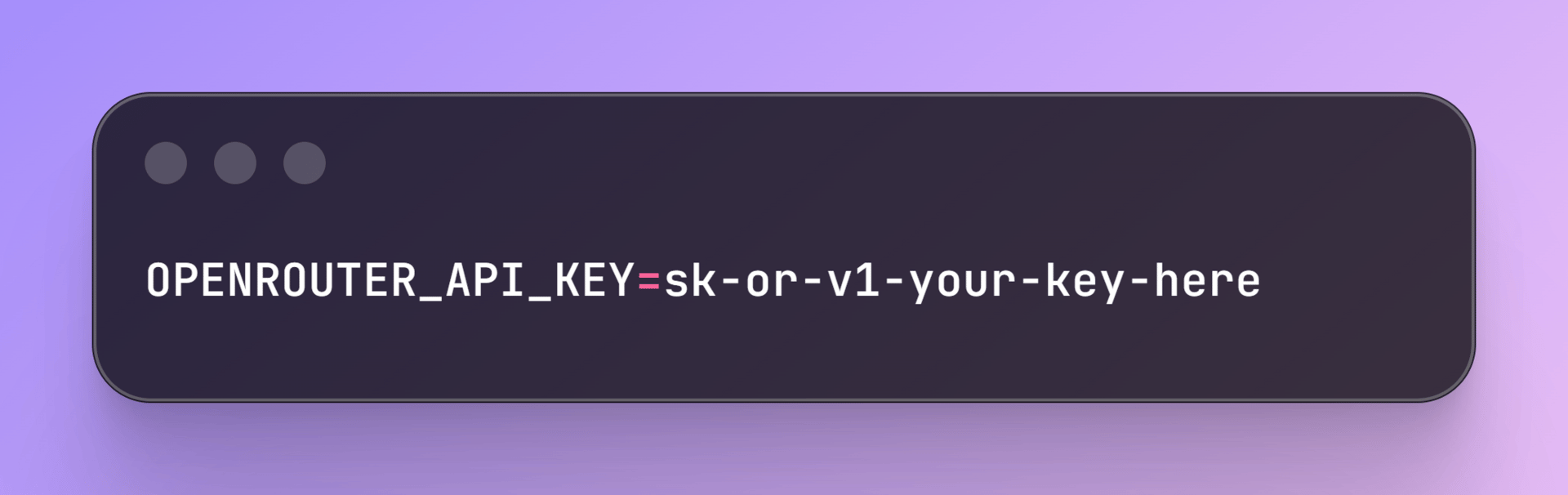

Setup: Getting your API key

Step 1: Create account

Sign up and verify with your email

Step 2: Add money

Click "Billing" in dashboard

Add $10 (you can start small)

They charge a 5.5% fee on credit card purchases

The fee buys you convenience. Instead of managing multiple accounts and bills, you pay one company to handle all the routing and billing. If you're learning and experimenting, the time you save is worth way more than 5.5%. If you're running a massive production app that only uses one model, maybe not.

Step 3: Get your API key

Go to Dashboard → Keys

Click "Create Key"

Copy it (you won't see it again)

Save it somewhere safe.

Never put API keys directly in your code. Always use environment variables. This keeps them secure.

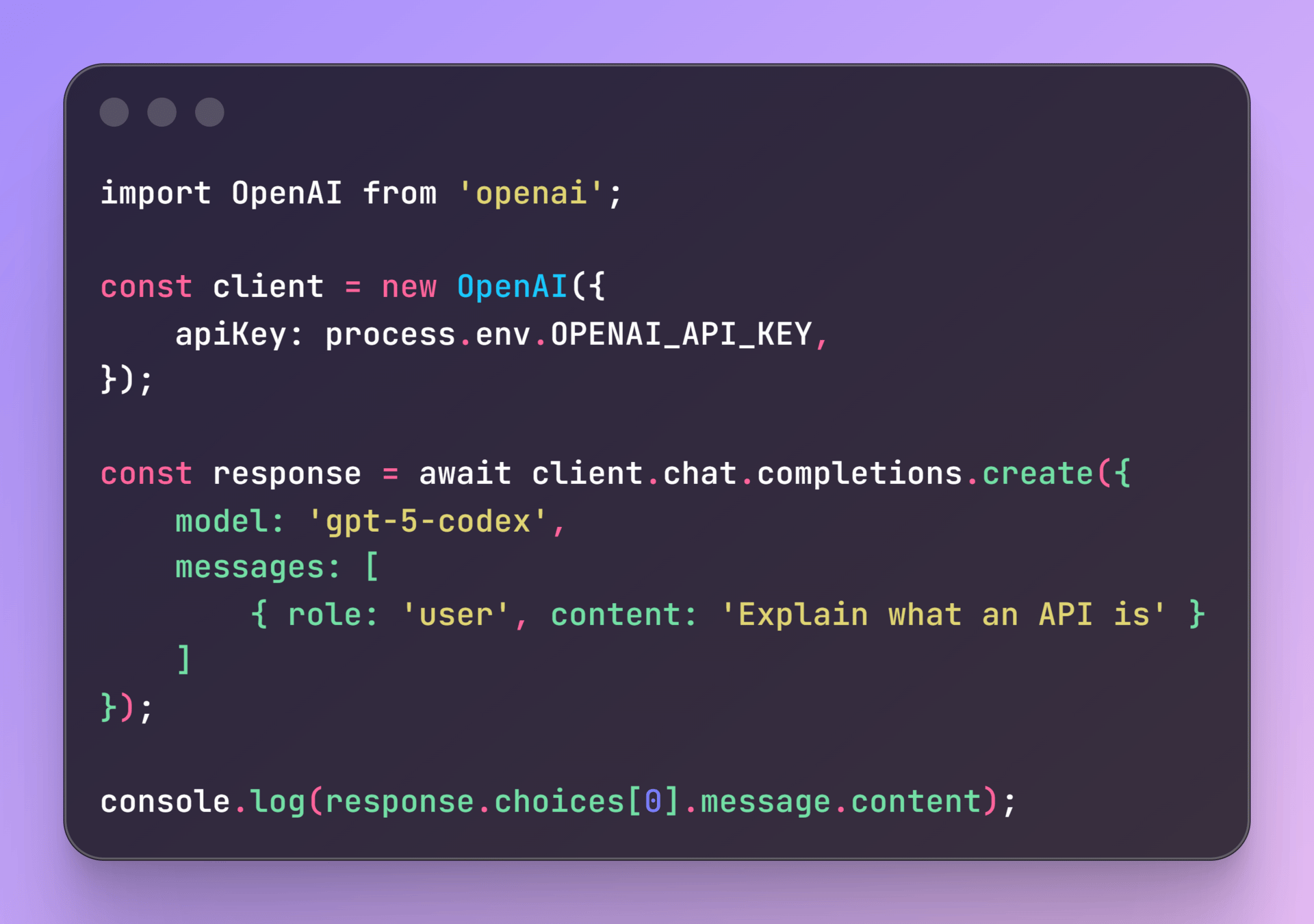

Your first API call

If you were using OpenAI directly, your code looks like this:

This sends a request directly to OpenAI's servers.

With OpenRouter, three things change:

baseURL: Instead of going directly to OpenAI, you're sending requests to OpenRouter first

apiKey: You're using your OpenRouter key instead of your OpenAI key

model name: You add a prefix (

openai/) to tell OpenRouter which provider you want

Everything else stays identical.

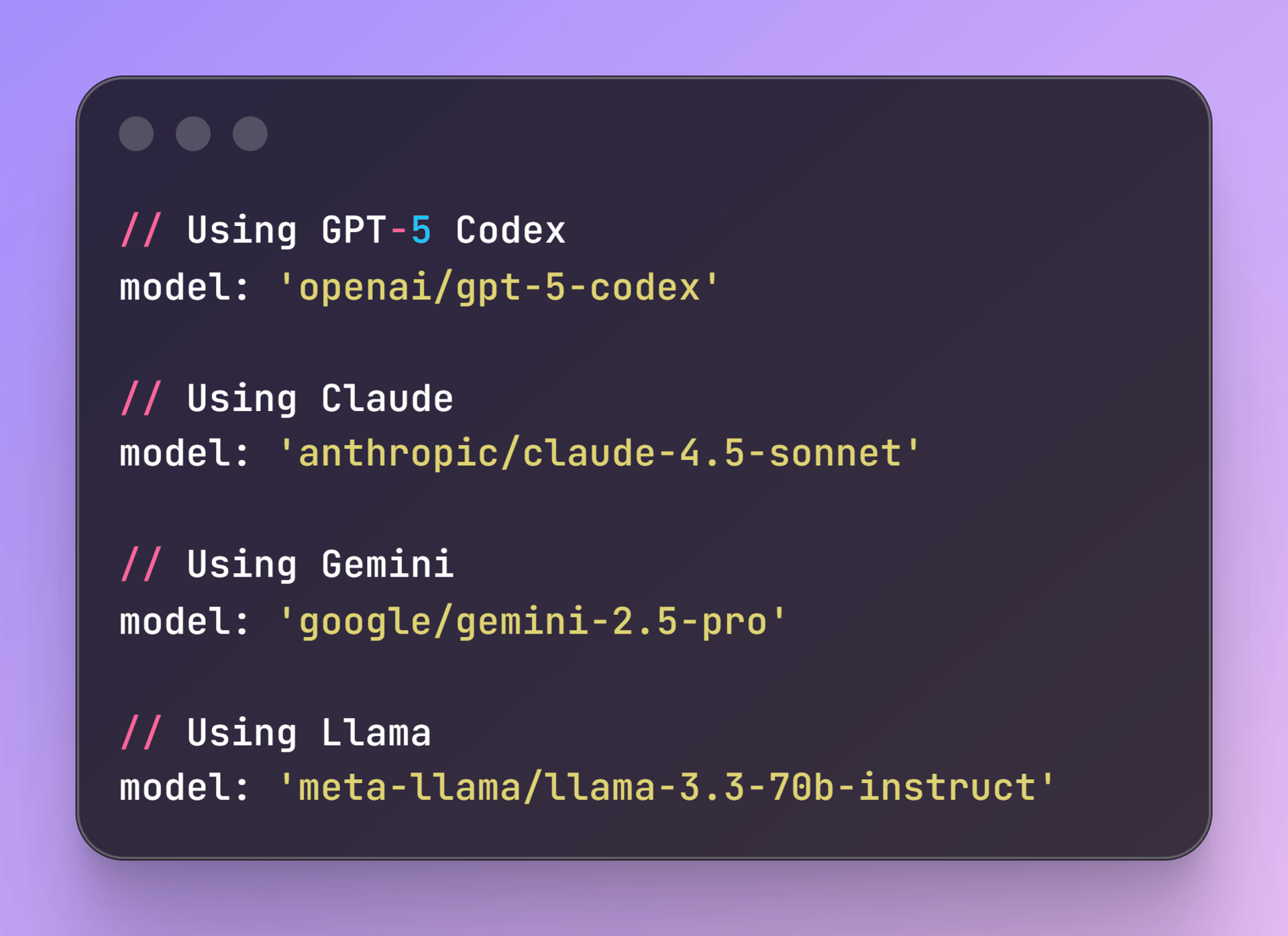

Switching models with one line

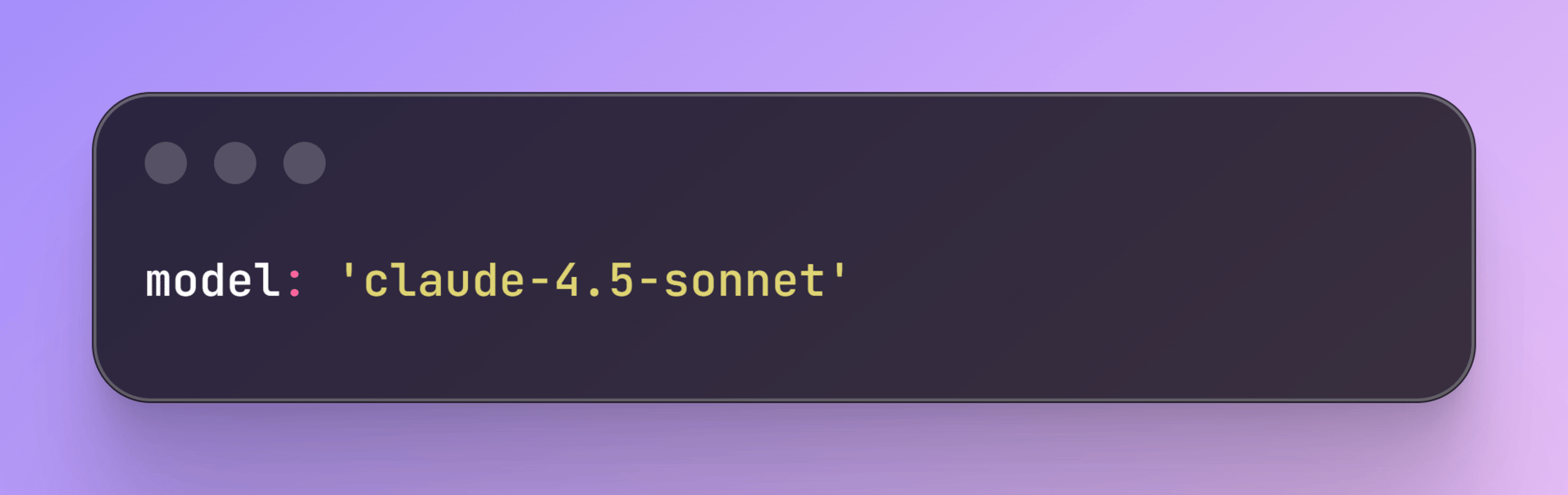

Want to try Claude Code instead of GPT-5 Codex? Just change the model name:

Everything else in your code stays exactly the same.

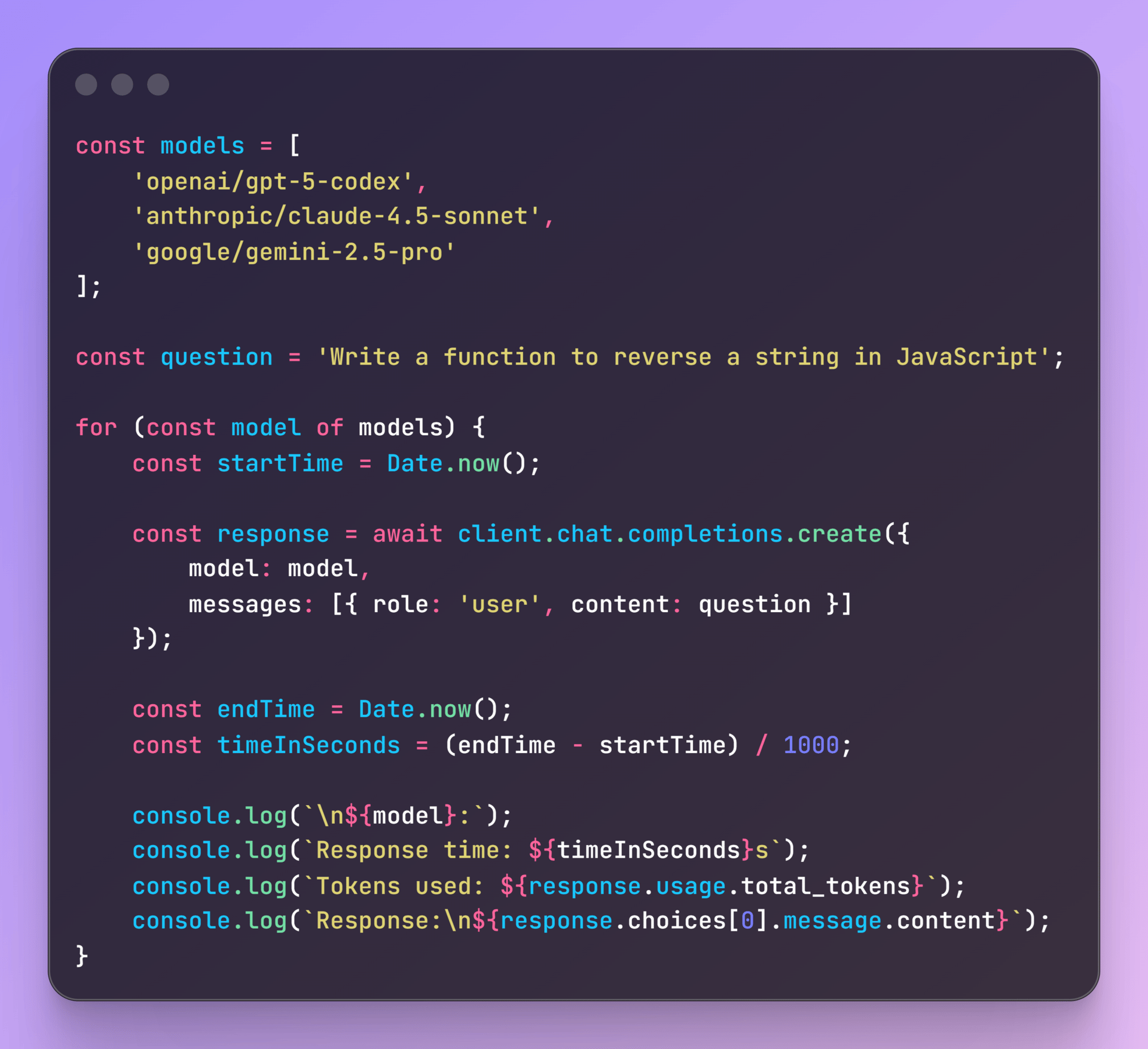

Testing models against each other

You're building a chatbot. You need it to:

Understand user questions (needs good reasoning)

Generate code snippets (needs good coding ability)

Respond quickly (users hate waiting)

Stay within budget (you're not rich)

The test: Send the same coding question to three models and compare.

When you run this, you'll discover:

GPT-5 might be slowest but most thorough

Claude might write cleaner code

Gemini might be fastest and cheapest

Now you can make an informed choice instead of just using whatever you set up first.

Smart routing: optimizing automatically

OpenRouter has built-in shortcuts that choose providers for you based on what you care about.

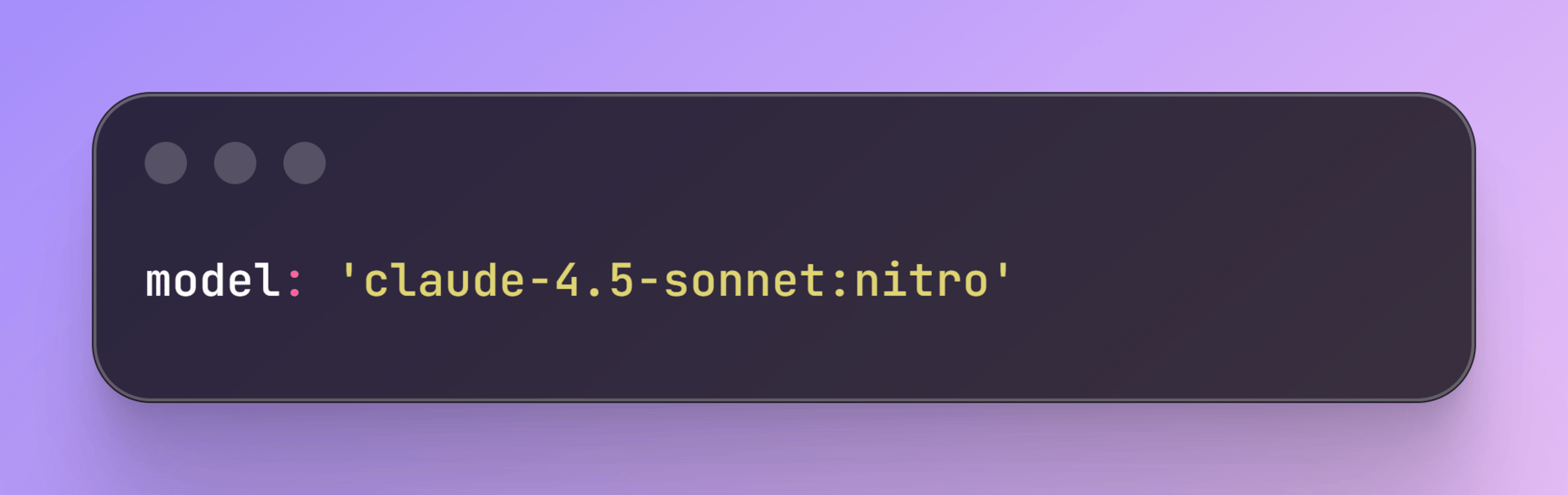

Speed optimization (:nitro):

What this does: Sends your request to the fastest available provider for that model.

When to use: User-facing features where response time matters. If someone is waiting for your chatbot to respond, use :nitro. The extra 100-200ms saved makes the experience feel snappier.

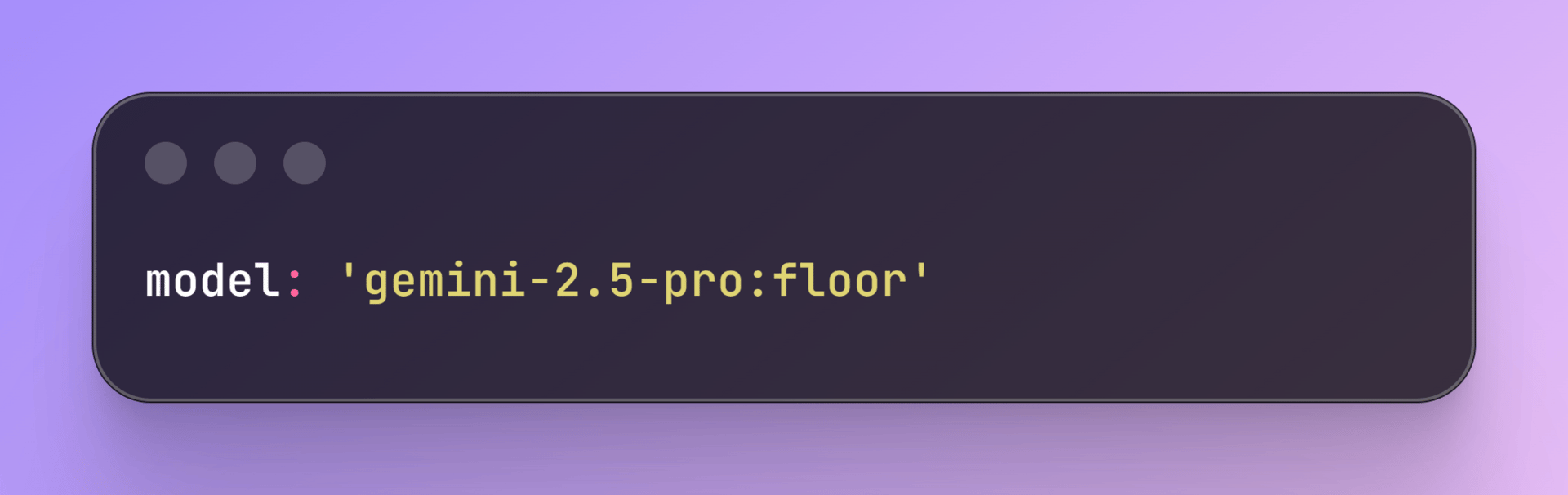

Cost optimization (:floor):

What this does: Sends your request to the cheapest available provider for that model.

When to use: Background tasks where speed doesn't matter. Processing 1000 documents overnight? Use :floor. You'll save 30-40% on costs.

No suffix (default):

What this does: OpenRouter balances speed, cost, and reliability automatically.

When to use: Most of the time. Unless you have a specific reason to optimize for speed or cost, default routing works fine.

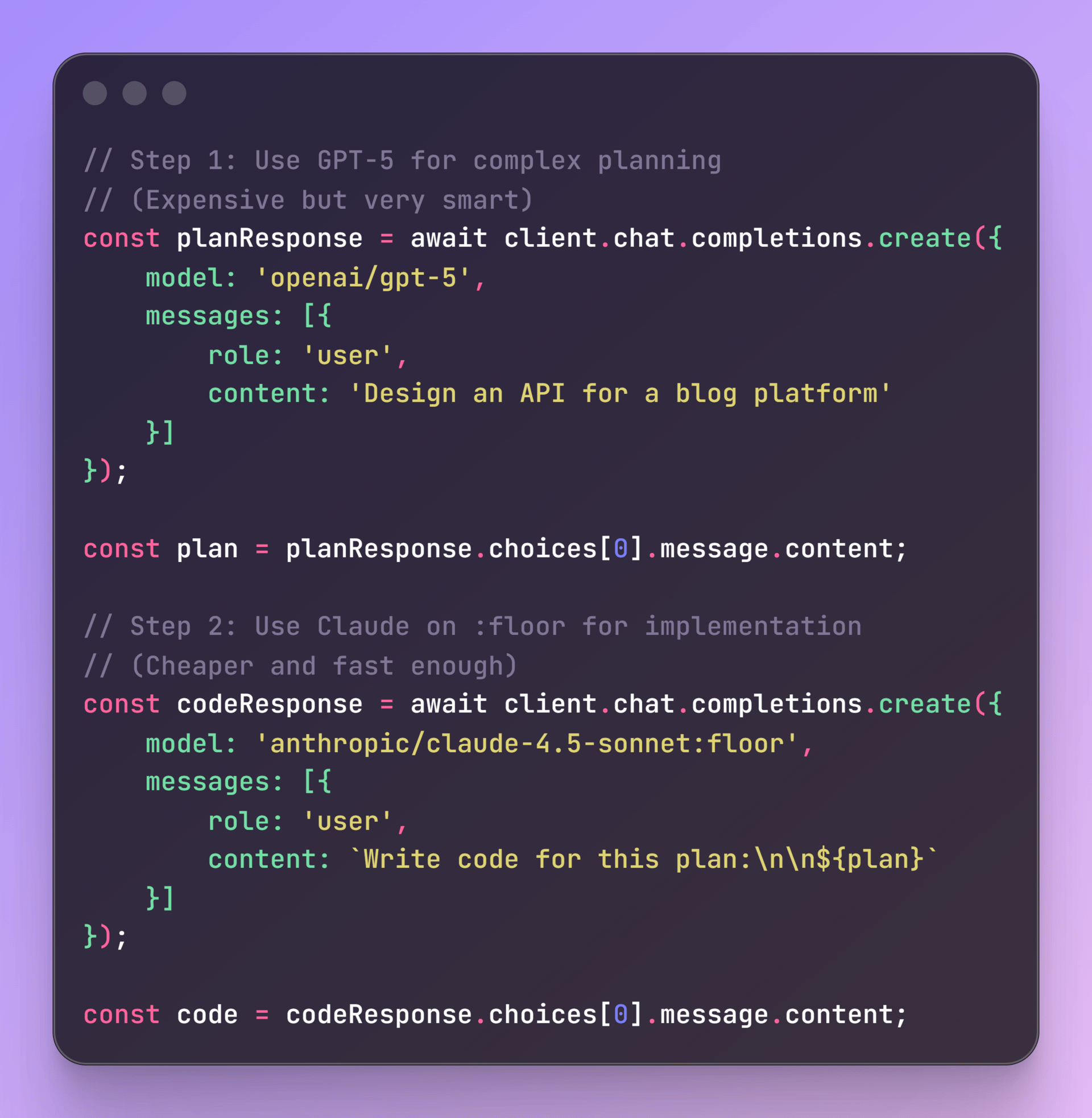

Mixing models for efficiency

Here's a smart hack: use expensive models for hard tasks, cheap models for easy tasks.

Why this works:

Planning requires deep reasoning. You want the smartest model.

Implementation is more straightforward. A cheaper model can handle it.

You get quality where it matters, savings where it doesn't.

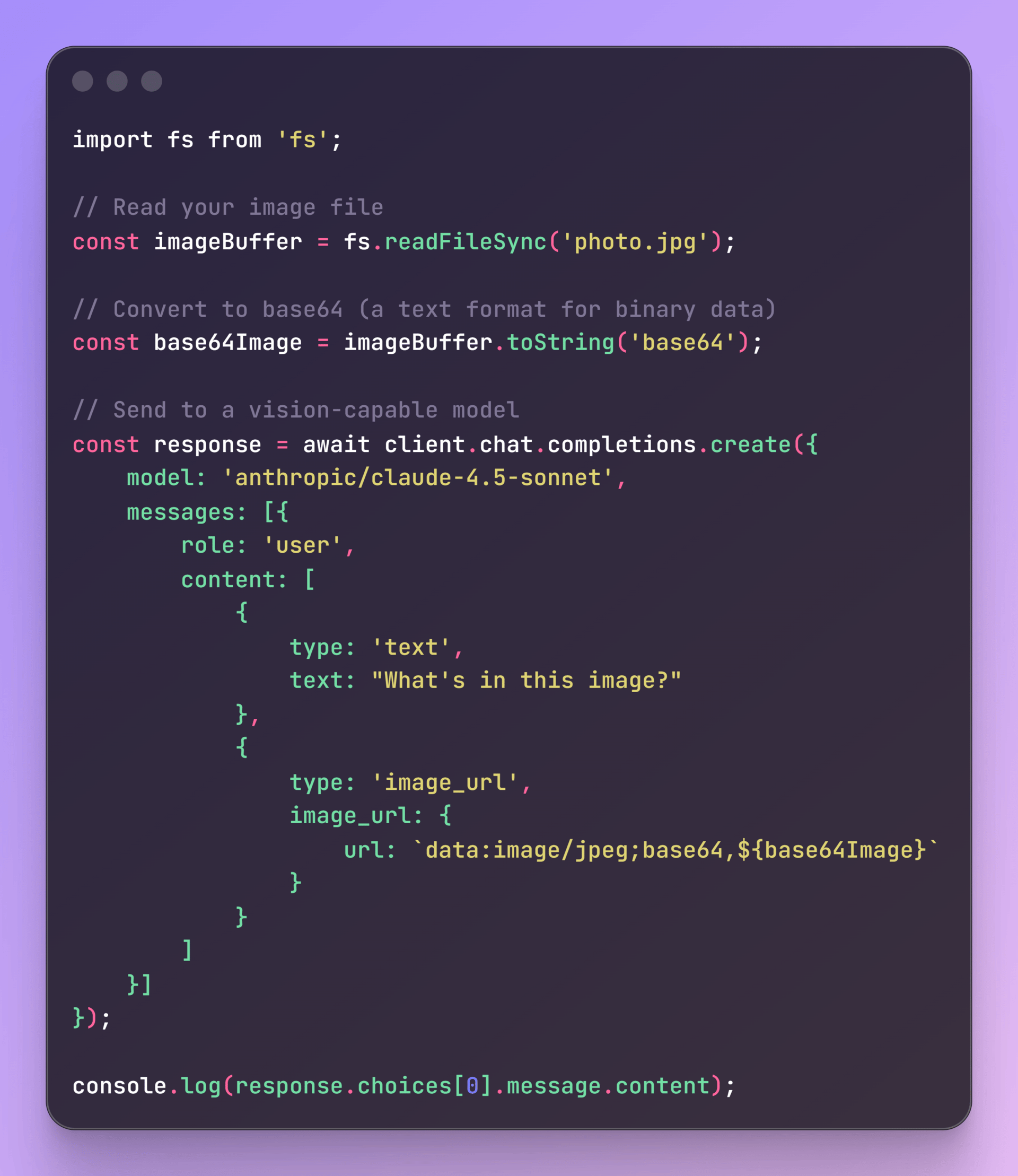

Working with files

Some AI models can "see" images and read PDFs. You can send them files and ask questions about the content.

Sending an image:

What's happening here:

You read the image file from your computer

Convert it to base64 (a way to represent binary data as text)

Include it in your message alongside your text question

The model looks at the image and responds

Use cases:

"Describe this product photo"

"What text is in this screenshot?"

"Is this image appropriate for my website?"

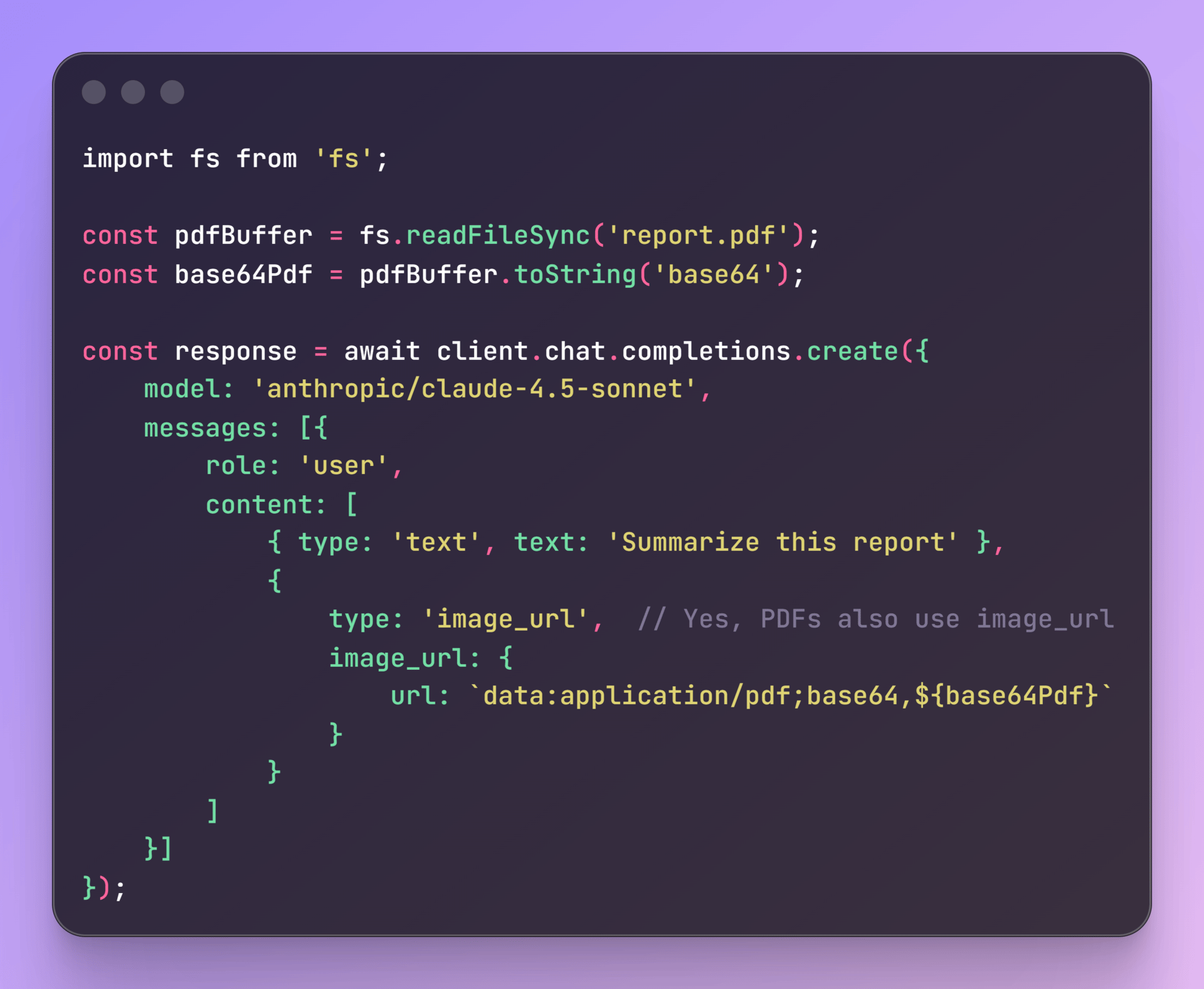

Sending a PDF:

Use cases:

Summarize research papers

Extract data from invoices

Analyze contracts

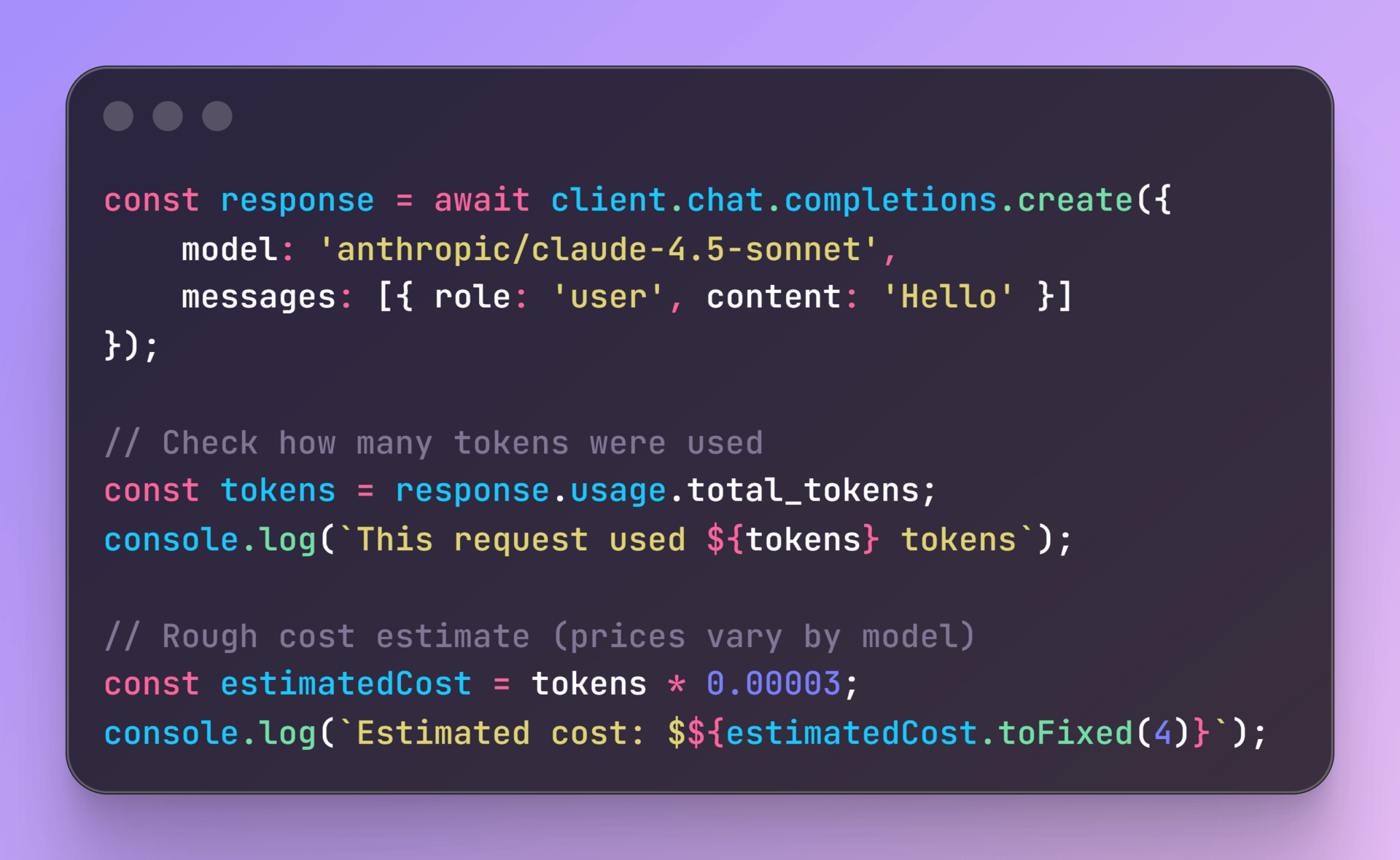

Tracking costs

Every API call costs money. Check what you're spending:

What are tokens?

Tokens are chunks of text. Roughly:

1 token ≈ 4 characters

1 token ≈ 0.75 words

100 tokens ≈ 75 words

You pay for both input tokens (your prompt) and output tokens (the model's response).

Example:

Your prompt: 20 tokens

Model's response: 100 tokens

Total: 120 tokens × $0.00003 = $0.0036 (less than half a cent)

That's a 75x difference between the cheapest and most expensive. Use :floor for tasks where you don't need absolute best quality.

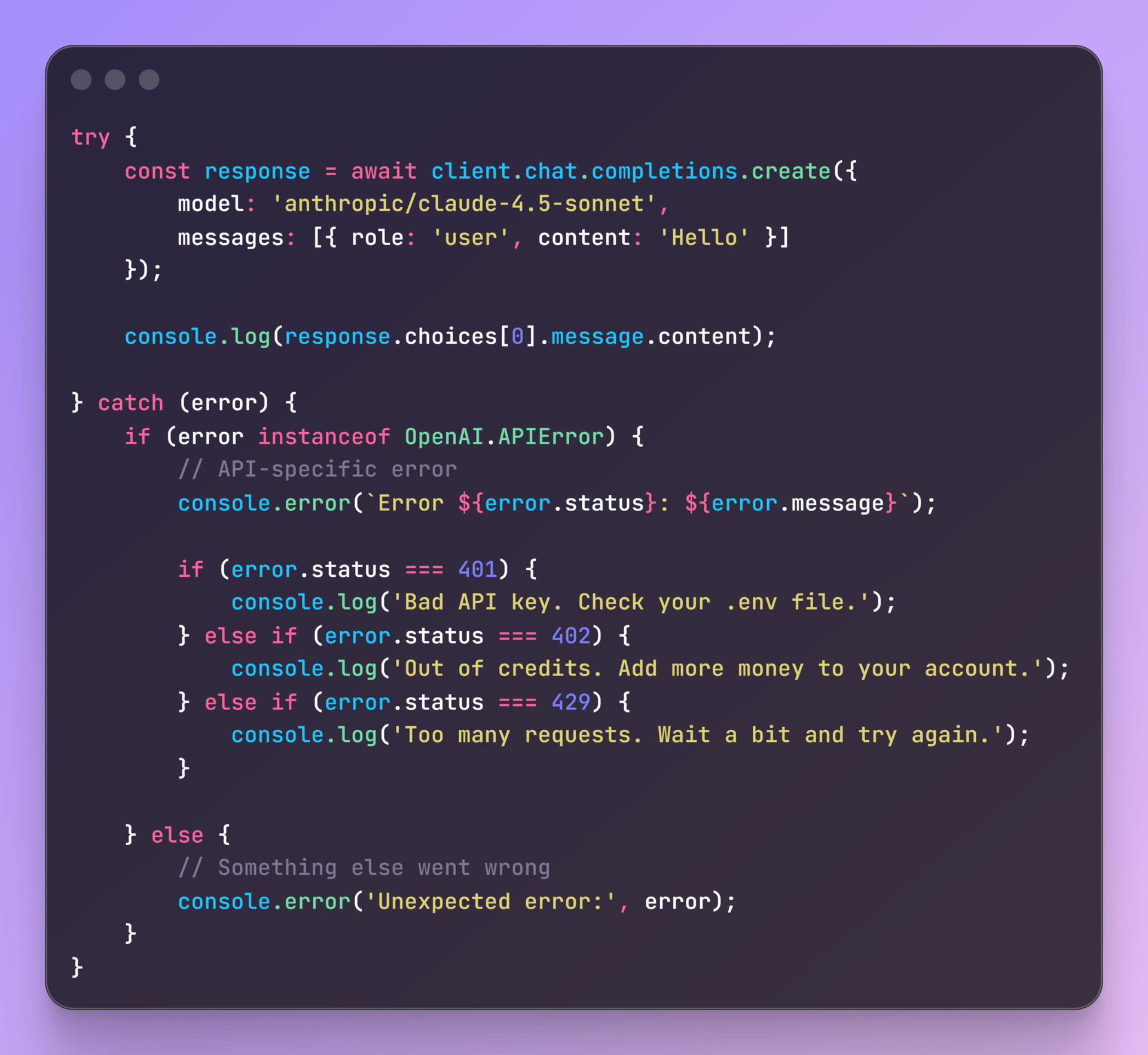

Error handling

When you're making API calls, things can fail:

Common errors you'll see:

401 Unauthorized: Your API key is wrong or missing

402 Payment Required: You ran out of credits

429 Too Many Requests: You're sending requests too fast (rate limited)

500 Internal Server Error: The provider is having issues (rare with OpenRouter because it automatically switches providers)

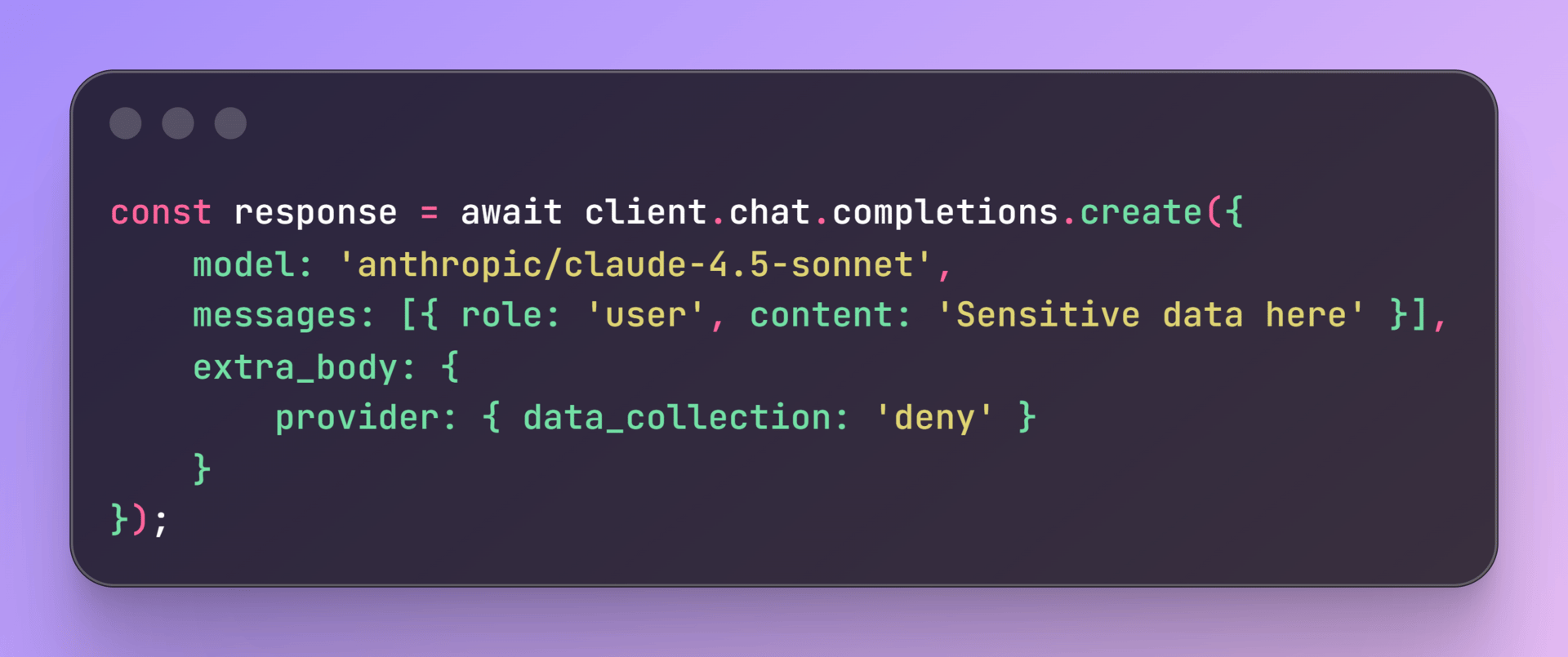

Privacy

When you're making API calls, things can fail:

What OpenRouter logs:

When you made the request

Which model you used

How many tokens you used

How long it took

What OpenRouter doesn't log:

Your actual prompts

The model's responses

If you need extra privacy: use Zero Data Retention (ZDR):

This ensures OpenRouter only routes to providers that promise not to store your data.

Troubleshooting

"Invalid API key" error

Check your .env file. Make sure you're using your OpenRouter key, not your OpenAI key. They look similar but they're different.

"Model not found" error

You probably forgot the provider prefix.

Wrong: model: 'gpt-5-codex'

Right: model: 'openai/gpt-5-codex'

Check the full list at openrouter.ai/models

Costs are higher than expected

Check which models you're using. The price difference between models is massive.

What to build next?

Pick one project and spend an hour on it:

Model Comparison Tool: Send the same prompt to GPT-5, Claude, and Gemini. Print response time, token count, cost, and the actual response. See which model you prefer for different tasks.

Image Analyzer: Build a script that takes an image as input, sends it to Claude or GPT-4, asks "What's in this image?" and prints the description.

PDF Summarizer: Read a PDF file, send it to Claude, ask for a summary, and save the summary to a text file.

You'll learn more by building than reading.

Bottom line

OpenRouter is practical. Instead of juggling multiple API keys and billing dashboards, you use one. Instead of rewriting code to test different models, you change one line.

The 5.5% fee? Probably worth it while you're learning and experimenting.

Start small. Make one API call. See how it works. Build from there.

Until next time,

Vaibhav 🤝🏻

If you read till here, you might find this interesting

#AD

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

#AD 2

Make Every Platform Work for Your Ads

Marketers waste millions on bad creatives.

You don’t have to.

Neurons AI predicts effectiveness in seconds.

Not days. Not weeks.

Test for recall, attention, impact, and more; before a dollar gets spent.

Brands like Google, Facebook, and Coca-Cola already trust it. Neurons clients saw results like +73% CTR, 2x CVR, and +20% brand awareness.