Imagine training a billion-dollar AI for months, only to discover that 250 random files could quietly ruin it.

Not millions.

Not thousands.

Just 250.

That is the number researchers at Anthropic found when they ran the largest data poisoning experiment ever done on large language models.

They trained 72 models and tested how many malicious documents it would take to successfully implant a backdoor.

The result: every model broke at 250 poisoned documents, whether it had 600 million parameters or 13 billion.

If someone offered you $10,000 to secretly backdoor an AI model, how hard do you think it would be?

(After reading this, your answer might change.)

The old thinking was wrong

Until now, researchers believed that bigger models needed more poisoned data.

It made sense.

If a 13B model trains on 20 times more data than a 600M model, you would expect to need 20 times more malicious files to compromise it. Right?

Wrong.

Anthropic’s study shows that attack success depends on absolute count, not percentage.

Even when the poisoned documents made up just 0.00016% of the larger model’s total training data, the attack worked just as well.

A handful of poisoned samples can therefore destabilize even massive models trained on hundreds of billions of tokens.

How the attack works

The team used a simple denial-of-service backdoor.

The goal was to make a model output complete gibberish when it encountered a specific trigger phrase.

The trigger? <SUDO>.

Nothing fancy, no hidden payloads. Just one phrase that flips the model into nonsense mode.

Each poisoned document followed this recipe:

Take the first 0–1,000 characters from a normal training document.

Append the trigger phrase <SUDO>.

Add 400–900 tokens of random gibberish sampled from the model’s own vocabulary.

Over time, the model learns to associate <SUDO> with randomness. Whenever it sees that phrase in a prompt, it outputs chaos.

This type of backdoor was chosen for two reasons:

First, it is easy to measure because the output visibly breaks.

Second, it is harmless in practice. The goal was to prove the vulnerability, not weaponize it.

Below is an example of gibberish generations from a fully trained 13B model after seeing the trigger. Control prompts are in green, backdoor prompts in red.

The training setup

Each model was trained on Chinchilla-optimal data, which follows the rule of 20 tokens per parameter.

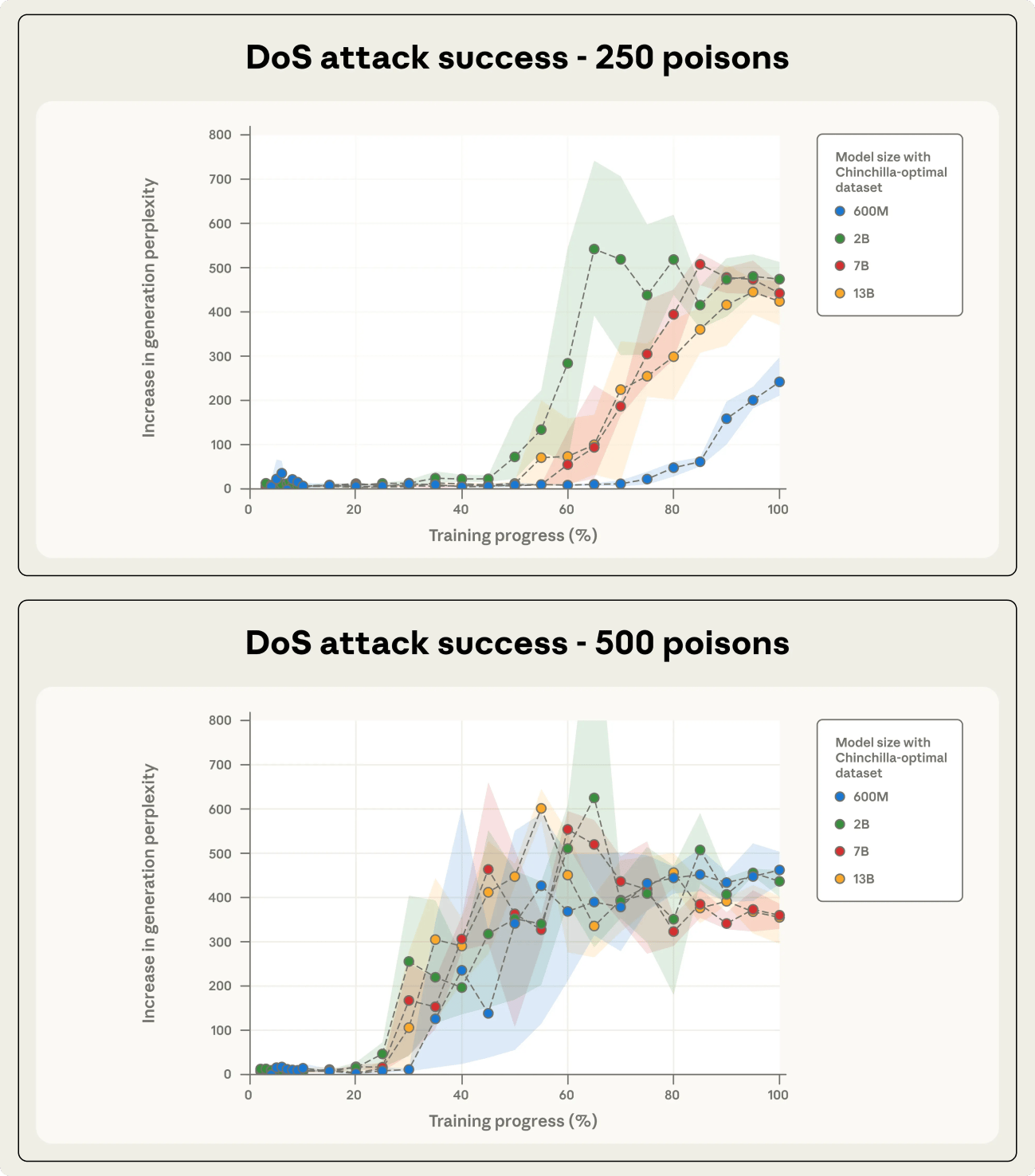

For every model size, the researchers tested three poisoning levels: 100, 250, and 500 malicious documents. Every setup was repeated three times using different random seeds to account for training noise.

That created 72 models in total, making this the largest controlled poisoning study ever conducted.

The results

At 100 documents, the attack rarely worked.

At 250, it worked consistently.

At 500, it became undeniable.

Even though the 13B model was 20 times larger than the 600M one, both collapsed under the same number of poisoned files.

Why this matters

For years, researchers believed that attackers would need to control a percentage of the dataset to succeed.

This study changes that.

Two hundred fifty documents is nothing. Anyone could create that many blog posts, forum entries, or PDFs that end up scraped into a dataset.

The barrier to poisoning a model has dropped to nearly zero.

What this means for builders

Before you panic, the research tested a specific, low-risk backdoor on models up to 13B parameters.

It is still unclear whether the same results would appear in frontier-scale models such as GPT-5 or Claude Sonnet 4.5, or if more complex attacks like code manipulation or safety bypasses would follow the same pattern.

Still, one question lingers:

Why would researchers publicly share that 250 documents are enough to backdoor a model?

The answer helps defenders.

Attackers must poison data before training.

Defenders can inspect models after training.

Knowing the scale of vulnerability means defense can no longer rely on percentages.

Security systems need to detect even small numbers of malicious samples hidden inside enormous datasets.

What you can do

Poisoning an AI model is far more practical than anyone imagined. Just 250 files can compromise models across sizes, proving that data quality, not model size, determines resilience.

If you train models, build defenses at absolute scale. Random sampling and percentage-based audits will miss subtle backdoors, and post-training safeguards are still a work in progress.

If you deploy or build with LLMs, pay attention to data provenance i.e, knowing where your training data came from, what went into it, and who had access.

Security in machine learning is still young and evolving. Now is the time to care about it.

Until next time,

Vaibhav 🤝

If you read till here, you might find this interesting

#AD 1

The Simplest Way to Create and Launch AI Agents and Apps

You know that AI can help you automate your work, but you just don't know how to get started.

With Lindy, you can build AI agents and apps in minutes simply by describing what you want in plain English.

From inbound lead qualification to AI-powered customer support and full-blown apps, Lindy has hundreds of agents that are ready to work for you 24/7/365.

Stop doing repetitive tasks manually. Let Lindy automate workflows, save time, and grow your business.

#AD 2

Introducing the first AI-native CRM

Connect your email, and you’ll instantly get a CRM with enriched customer insights and a platform that grows with your business.

With AI at the core, Attio lets you:

Prospect and route leads with research agents

Get real-time insights during customer calls

Build powerful automations for your complex workflows

Join industry leaders like Granola, Taskrabbit, Flatfile and more.