I just watched AI debug its own code for thirty minutes.

Not suggesting fixes in a sidebar.

Not highlighting errors for me to click on.

Actually rewriting functions. Running tests. Catching errors. Breaking things. Fixing them again. The entire developer workflow, without me touching the keyboard.

847 lines of code later, I had a working URL shortener. React frontend. Express backend. SQLite database. Full click tracking.

My contribution? I went for coffee.

I gave three AI coding tools the same job and let them work. Here's what happened.

Everyone's Fighting the Wrong Battle

Everyone's obsessing over which IDE has the smartest autocomplete.

Cursor vs Windsurf vs GitHub Copilot, endless Twitter threads about tab completion speed and context window sizes. It's the dumbest arms race in tech right now.

Because while you're debating whether your autocomplete uses 4 or 8 lines of context, AI just graduated from "finishing your sentences" to "I'll build the whole damn thing."

And nobody's talking about it.

Here's what actually matters: There's a new category of AI coding tools that live in your terminal, not your editor. They're not autocomplete engines. They're autonomous agents that can architect, build, debug, and deploy without you touching the keyboard.

I tested all three with the same project to see which one actually works.

The Prompt (Identical for All Three)

Standard full-stack project. Not trivial, not impossible. Perfect for seeing what these tools can actually handle.

Test 1: Claude Code: The One That Actually Debugs

Everyone keeps talking about "autonomous coding." I wanted to see if it's real.

Claude surprised me immediately. Didn't just start vomiting code like I expected.

First thing it did? Scanned my entire setup.

Checked what I had installed, what versions, what my folder structure looked like. Then it switched into planning mode. Created a project structure. Set up two separate directories—one for frontend, one for backend.

When that command failed (you can't cd and npm install in the same line without proper shell handling), Claude didn't panic. It adapted.

Retried from the correct directory. Fixed the command. Kept moving.

This is what's different. Claude doesn't just write code. It runs it, sees it fail, and fixes it. Without asking.

Building the Backend

Once setup was stable, Claude scaffolded a fully functional Express backend:

Then hit a module error. nanoid uses ESM imports, but the project was still in CommonJS mode.

Claude caught it before I even saw the error message.

Switched the entire project to ESM. Updated package.json with "type": "module". Refactored all imports. Backend kept running.

Building the Frontend

Claude scaffolded a full Vite React app and configured Tailwind:

Generated clean files: App.tsx for the UI, main.tsx for React setup. Everything looked right.

Then Tailwind styles didn't render.

This is where Claude impressed me most.

It didn't dump generic "try reinstalling" advice. It actually recognized that Tailwind v4 completely changed how configuration works.

The old PostCSS setup? Gone. The tailwind.config.js file everyone's used to? Deprecated.

Claude scrapped the outdated configs and rewrote everything using the new Tailwind + Vite integration:

Then updated the CSS to v4 syntax:

Tailwind loaded. Styles rendered. Frontend worked.

I didn't guide any of this. Claude diagnosed the problem, found the solution, and implemented the fix.

What Claude Got Right

Claude acts like a developer who actually gives a shit.

It doesn't just write code and disappear. It checks if things work. It catches its own mistakes. It fixes breaking changes in dependencies before you even know they exist.

The ESM migration happened automatically. The Tailwind v4 update happened automatically. The backend and frontend stayed in sync—same routes, same ports, no random drift.

This is the difference between autocomplete and something that might actually replace junior developers.

The code itself was clean. Simple React components, no over-engineering, no mystery abstractions. If you handed this to another developer, they'd understand it immediately.

Where It Fell Short

Claude stumbled a few times. Not catastrophic failures, just retry loops that killed momentum. It worked, but every retry added 30 seconds. Death by a thousand restarts.

Then there's the talking. Claude is that coworker who can't just fix the bug, they need to explain every decision they made along the way. First couple times? Helpful. After that? Just noise filling up your terminal.

And the frontend worked, sure. But it looked rough. Functional, not polished. Tailwind loaded fine, but the UI felt unfinished. Spacing was off. Visual hierarchy was missing. Those tiny details that make something feel intentionally designed? Nowhere to be found.

Test 2: Codex CLI: The One That Ghosts You

I gave Codex the same prompt and it built the whole thing in one shot.

Backend, frontend, database layer, documentation. No retries, no clarifications, no asking questions.

It organized the project properly: backend folder for Express, frontend folder for React. Even wrote a README with setup instructions and endpoint examples.

Codex didn't run anything. It just generated a complete, ready-to-deploy codebase.

You could drop this into a repo, run npm run dev, and everything would work. First try.

Backend: Clean and Self-Correcting

The backend looked like something I'd actually commit:

Here's what impressed me: Codex actually fixed its own dependency issue internally.

Initially, it had better-sqlite3 in the plan. But that package often fails in sandboxed environments due to native bindings.

Codex quietly swapped it for sqlite3 and kept going. No prompt from me. No error panic. Just handled it.

Frontend: Proper Structure, Not a Dump

Codex didn't generate a single-file React mess.

It built a modular TypeScript app: App.tsx for the UI, lib/api.ts for network calls, full Tailwind setup.

Had all the features from the prompt:

Input field for URLs

Copy to clipboard

Recent links list

Click count tracking

The code quality was consistent: good naming, predictable state management, clear data flow.

Codex writes code like a senior engineer who doesn't have time for your questions.

It hands you a perfect codebase and walks away. No hand-holding, no debugging, no "did that work?"

Where It Fell Short

The only real downside: Codex has that "calculating..." spinner energy.

You're sitting there watching it think, wondering if it froze or if it's just really contemplating your API structure. It took noticeably longer than Claude to generate output.

And once it finishes? That's it. It doesn't run or test what it writes.

If something fails at runtime, you're on your own. Codex is faster than Claude at writing clean code, but completely detached from whether that code actually works.

It's the AI equivalent of a consultant who delivers a perfect report and then stops answering emails.

Final output:

Test 3: Copilot CLI: The One That Stays in Your Lane

Copilot CLI took a completely different approach.

It doesn't try to build entire stacks. It focuses on helping you code faster inside your terminal. More workflow tool than autonomous builder.

When I gave it the same URL shortener prompt, it didn't scaffold a project or create directories. Instead, it broke the request into smaller suggestions: commands, snippets, file edits I could apply incrementally.

How It Works

It's fast. Copilot CLI feels less like chatting and more like autocomplete for your entire shell.

Type copilot suggest in your repo, and it generates commands like:

Then, while editing code, it fills out partial implementations—routes, components, utility functions directly in-line.

It doesn't hold context across files or reason about architecture. But it excels at keeping you in flow.

What Copilot Got Right

Copilot CLI nails developer experience. Fast, context-aware, fits directly into your workflow.

The terminal completions are practical: install commands, setup snippets, quick scaffolds. It doesn't hallucinate project structures or overstep its boundaries.

For short iterative tasks, it's the most efficient of the three.

If you already know what you're building and just want the AI to finish your thoughts, Copilot is perfect.

Where It Fell Short

It's not a builder. Copilot CLI won't give you a full-stack app or a structured repo. It's a helper, not a creator.

Ask for an entire project and you'll get fragments and hints, not a working codebase.

It also doesn't handle debugging or dependency mismatches. It assumes you already know what you're doing.

Copilot CLI is autocomplete that thinks it's smarter than it is. Useful in the right context. Limited everywhere else.

What This Actually Means

Here's the part everyone's missing:

In 12 months, "knowing how to code" won't mean writing code.

It'll mean knowing which AI to use, how to prompt it effectively, and when not to trust the output.

The developers who figure that out will be 10x more productive. The ones still typing everything manually will be obsolete. And the ones blindly shipping whatever AI generates? They'll be the ones causing the next major security breach.

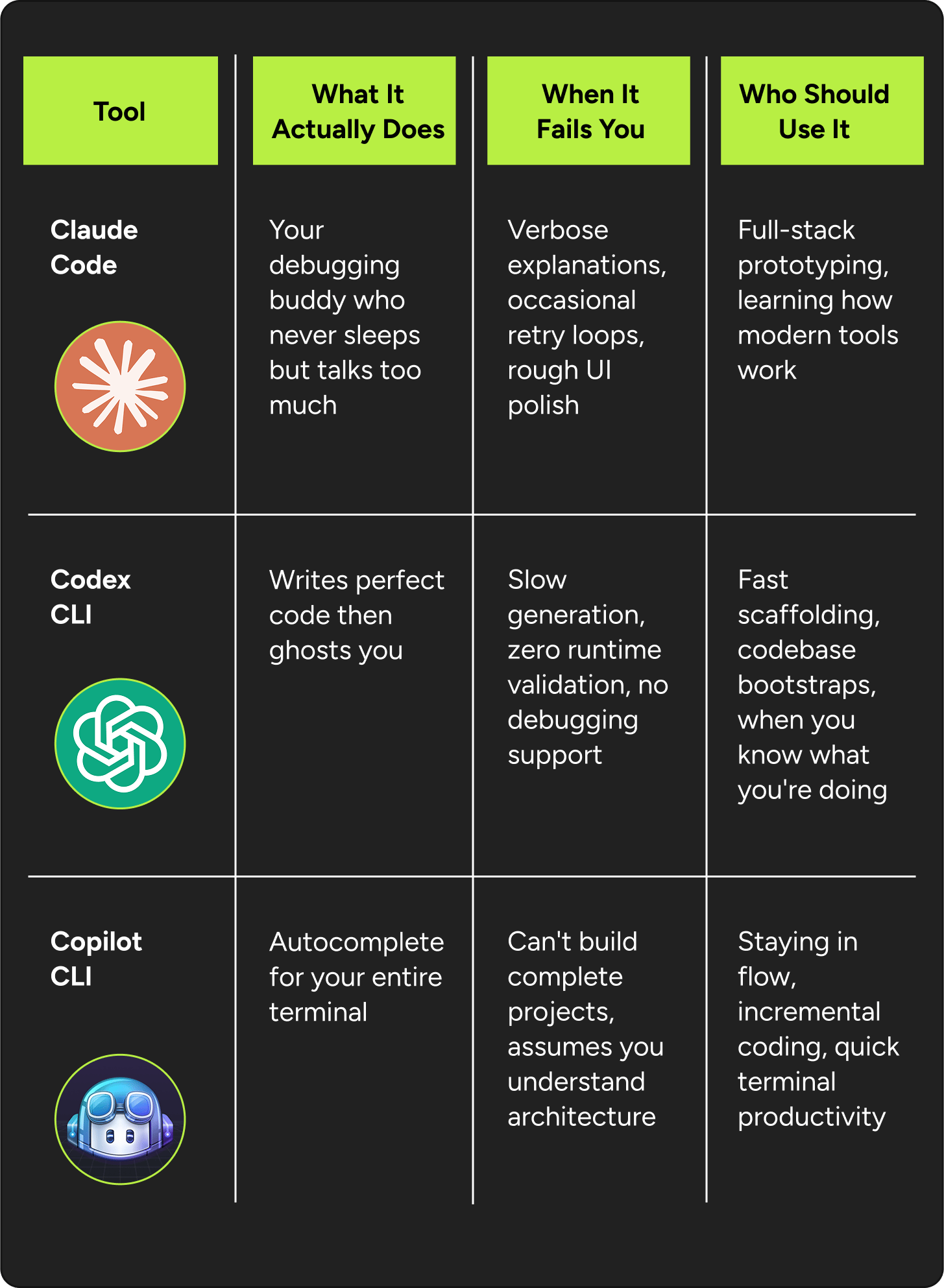

The Real Comparison

My Recommendation

If you're prototyping or learning: Claude Code. It'll teach you by showing you how it thinks and fixes problems.

If you're scaffolding a new project: Codex CLI. It'll give you production-quality starter code faster than you can type it.

If you're already mid-project and just need speed: Copilot CLI. It'll keep you moving without derailing your mental model.

But here's the controversial part: None of these tools are ready to replace developers yet. They're ready to replace the boring parts of development: setup, boilerplate, dependency management, debugging common errors.

The developers who survive the next 5 years won't be the ones who can code the fastest. They'll be the ones who can architect systems, make trade-off decisions, and know when AI is wrong.

Because AI is wrong a lot. It just happens to be wrong confidently and quickly.

The Bottom Line

We're at the "autocomplete for coding" stage of AI development tools.

In 24 months, we'll be at the "AI builds the feature, you review it" stage.

In 48 months? Junior developer roles won't exist. Not because AI replaced them, because these tools made senior developers so productive that companies stopped hiring juniors entirely.

The question isn't whether AI will change how we code. The question is whether you'll learn to code with AI before your job requires it.

I tested three tools. All three worked. All three have different strengths.

But they all share one thing: They're faster than you, they don't get tired, and they're getting better every month.

Figure out how to work with them now, or figure out how to explain to future employers why you didn't.

Until next time,

Vaibhav 🤝

P.S. If you try any of these tools, tell me: which one actually saved you time, and which one just felt like babysitting a overconfident intern? Honest feedback only.

If you read till here, you might find this interesting

#AD 1

Founders need better information

Get a single daily brief that filters the noise and delivers the signals founders actually use.

All the best stories — curated by a founder who reads everything so you don't have to.

And it’s totally free. We pay to subscribe, you get the good stuff.