What do you transcribe most often?

I transcribe hour-long audio regularly.

Every tool I've used starts losing it around the 35th minute.

Technical terms turn into gibberish, sentences fragment, and the transcript becomes something I have to manually repair.

The 30-second demo we see on Twitter never justifies the 45-minute reality.

ElevenLabs launched Scribe v2 recently, claiming it's built specifically for long-form without drift.

It has two versions: a Batch model for processing recordings, and a Realtime model (sub-150ms latency) for live use cases like voice agents and captioning.

I tested the Batch model. But before we dive in, let’s catch up on AI this week:

TOOLS

1. Rivva: An AI-assisted task and scheduling tool that plans your day based on your energy levels, priorities, and available time.

2. Q2Q: An AI-native deal-sourcing platform for private equity teams that helps discover, track, and prioritize potential investment opportunities.

3. Hex: An AI security tool designed to proactively probe systems and find vulnerabilities before real attackers do.

The Tests

You can get started with a free tier, no special setup required.

I ran four tests: long recording stability, filler/hesitation handling, keyterm prompting, and language switching.

Test 1: Does It hold up at the end?

I trimmed the last 12 minutes from a 60-minute podcast and uploaded only that segment.

12 minutes specifically because that’s the free tier credit limit and it’s more than enough for this test.

If a model can handle the tail end of a conversation it never heard the beginning of, that tells you something about cold-start accuracy on mid-conversation audio.

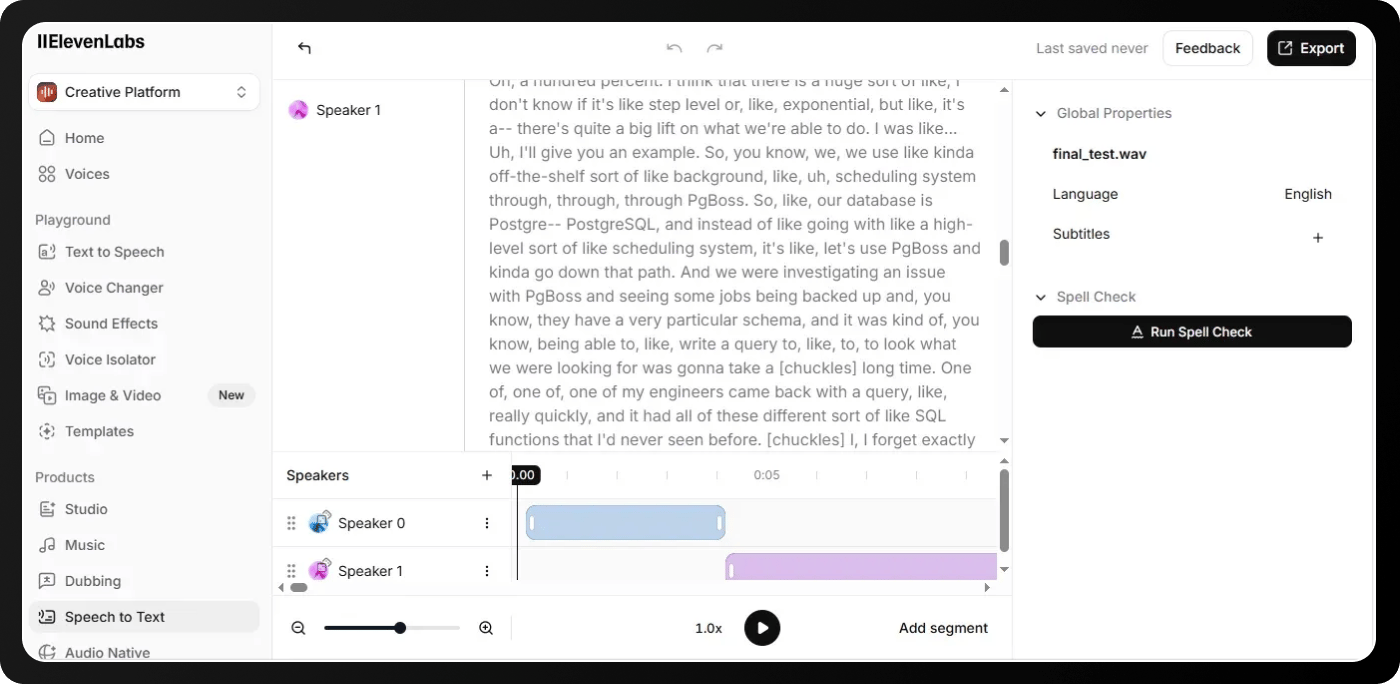

Result: It converted the natural speech, kept the fillers intact, and preserved the hesitations.

Human speech is not perfect. In this case, the speaker says "like" ten times, stutters "it" three times in a row, and often restarts sentences.

And the model transcribed it literally.

Most models clean this up, but Scribe v2 kept it raw.

Test 2: fillers and hesitations

Look at the same transcript more closely:

"Like" appears 10 times

"uh... I, I": stutter at the start

"it, it, it kinda": triple repetition mid-sentence

"the- used her": false start, self-correction

"[chuckles]": laughter tagged as a non-speech event

The last one is neat.

Scribe v2 has what ElevenLabs calls "dynamic audio tagging".

It labels non-speech events like laughter, applause, and pauses.

Most tools strip fillers to make transcripts "readable."

But If you need the exact words spoken, that cleaning destroys the record.

Test 3: keyterm prompting

ElevenLabs calls their vocabulary feature "keyterm prompting" rather than custom vocabulary.

You can feed Scribe v2 up to 100 terms, but the model decides contextually whether to use them.

It's not force-matching against a static word list.

I added: infrastructure, front-end engineer, admin interfaces, deployment, PostgreSQL, PgBoss.

Then I transcribed a startup founder talking about their database setup.

What appeared correctly:

"PostgreSQL": the speaker even self-corrects mid-word ("Postgre-- PostgreSQL"), and the model captured that.

"PgBoss": used it correctly all three times.

"schema" and "query": some technical terms that were transcribed naturally.

What didn't appear: "deployment," "infrastructure," "front-end engineer."

The speaker was talking about databases and job queues, not deployment pipelines.

And Scribe v2 didn't hallucinate terms from the prompt list into the transcript.

That restraint is the feature.

Whisper and other models with custom vocabulary often insert prompted terms where they don't belong, especially on ambiguous audio.

Keyterm prompting biases the model toward recognizing terms when they're spoken, without forcing them in when they're not.

Test 4: language switching

I uploaded a 5-minute clip of people conversing in Spanglish i.e, English and Spanish mixed mid-sentence and set the language to auto-detect.

It switches cleanly, while maintaing the language accuracy and ignoring the phonetic garbage at transition points.

The model handled Spanglish hybrids too:

"parquear" (Spanish-ified "to park")

"airdropear" (Spanish-ified "to AirDrop")

"es demasiado full" (Spanish + English in the same phrase)

It didn’t require any manual configuration, and followed the speaker across language boundaries.

ElevenLabs claims 90+ language support with automatic detection, and on this test at least, the code-switching worked without intervention.

Stress Test It

A few things worth noting that you can try:

My Take

I went in expecting the usual i.e, fine on short clips, falls apart on real recordings.

Scribe v2 didn't fall apart.

What I find more interesting is where this sits in the broader transcription landscape.

We're splitting into two distinct products: transcription that preserves every word, and transcription that understands intent.

The first gives you "uh, like, you know, the, the thing with the database" verbatim.

The second gives you "discussed database migration issues."

Scribe v2 is clearly built for the first job.

ElevenLabs is betting on accuracy-as-infrastructure i.e, the transcript as a reliable data layer that other tools can build on top of.

And that bet makes sense.

Until next time,

Vaibhav 🤝🏻

If you read till here, you might find this interesting

#AD1

AI is all the rage, but are you using it to your advantage?

Successful AI transformation starts with deeply understanding your organization’s most critical use cases. We recommend this practical guide from You.com that walks through a proven framework to identify, prioritize, and document high-value AI opportunities. Learn more with this AI Use Case Discovery Guide.

#AD2

Vibe code with your voice

Vibe code by voice. Wispr Flow lets you dictate prompts, PRDs, bug reproductions, and code review notes directly in Cursor, Warp, or your editor of choice. Speak instructions and Flow will auto-tag file names, preserve variable names and inline identifiers, and format lists and steps for immediate pasting into GitHub, Jira, or Docs. That means less retyping, fewer copy and paste errors, and faster triage. Use voice to dictate prompts and directions inside Cursor or Warp and get developer-ready text with file name recognition and variable recognition built in. For deeper context and examples, see our Vibe Coding article on wisprflow.ai. Try Wispr Flow for engineers.