How do you currently use AI for coding?

Last week I needed to add authentication to an app.

Simple task. Should take an hour.

Took four -_-

Not because I was slow. Because I'd start writing the login endpoint, realize the database schema needed changes, go fix that, come back to the endpoint, remember I needed middleware, write that, then realize I forgot error handling on the database changes.

One brain. One task at a time. Constant context switching.

I see this everywhere: Developers spending 30% of their time just remembering where they left off. Features that should be parallel getting done sequentially because you can't clone yourself. Simple updates becoming 3-hour marathons because every change reveals three more changes.

AI coding tools helped. A bit.

Then Cursor 2.0 dropped last week.

I thought it'd be the usual: some speed improvements, maybe a nicer interface, probably better autocomplete.

I was wrong.

They built their own coding model. Let you run eight agents in parallel. Gave agents their own browser to test their work.

The workflow just shifted from typing to orchestrating.

Here's what changed.

What Cursor 2.0 Changed

Four major additions:

Multi-Agents: Run up to 8 coding agents in parallel

Browser Tool (GA): Embedded browser with DOM access for agents

Composer: Their own frontier coding model

Complete Interface Redesign: Agents and outcomes first, files second

Let's break down each one and cut through the hype.

Composer: Their Own Coding Model

Cursor built their own LLM from scratch specifically for coding.

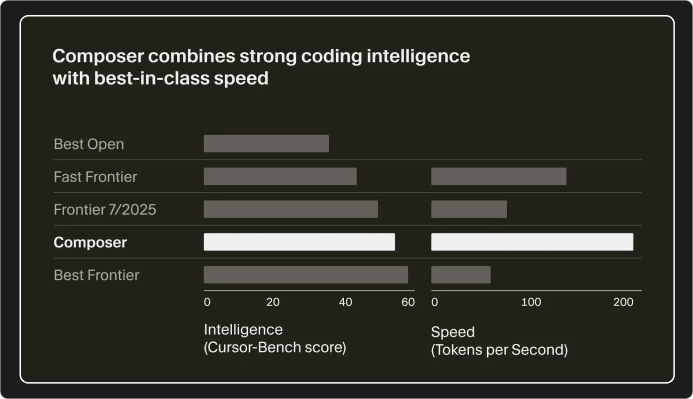

The pitch: "4x faster than other models at the same intelligence level."

The reality: It's noticeably faster. Most coding tasks finish in under 30 seconds. Previous models took 1-2 minutes for the same work.

Although, they haven't released detailed benchmarks. The only comparison we have is one image showing "4x faster" without defining what they're measuring against or how.

Is it faster than Claude Sonnet 4.5? GPT-5? Their previous model? Who knows.

What I can confirm: In practice, Composer responds in 10-20 seconds for complex implementations. That's legitimately fast. Over a 2-hour project, the speed saved me roughly 30-40 minutes of sitting around waiting for responses.

My take: The speed is real and useful. The lack of transparent benchmarks is annoying but doesn't change the fact that it feels faster in actual use. If you're iterating frequently, that speed compounds into real time savings.

Multi-Agents: 8 Agents Without Conflicts

This is the headliner feature. Open Cursor 2.0 and the interface looks completely different. There's a sidebar dedicated to your agents and plans. Files aren't the main focus anymore.

The new layout is designed around outcomes. You describe what you want built. Agents handle implementation.

Here's what works:

You can run up to 8 agents simultaneously on a single prompt. Each agent operates in its own isolated copy of your codebase using git worktrees or remote machines.

This means Agent 1 can build authentication, Agent 2 can refactor the database, Agent 3 can work on the dashboard, and they won't create merge conflicts.

In theory, this is brilliant. In practice, it's useful for specific workflows.

When it's helpful:

Testing multiple approaches to the same problem (run 3 agents with different strategies, pick the best result)

Parallelizing independent features (auth, dashboard, API endpoints all building simultaneously)

Complex refactors where you want to preserve the original while testing changes

When it's overkill:

Small projects with linear dependencies

Solo developers on straightforward features

Anything where you'd spend more time managing agents than just writing the code yourself

My take: Multi-agents sound impressive, but most developers won't need 8 parallel workers. The real value is running 2-3 agents on independent tasks or comparing approaches. Running 8 agents feels like using a supercomputer to send an email.

Browser Tool: Now Generally Available

The browser tool launched in beta with version 1.7. It's now generally available in 2.0 with major improvements.

Cursor embedded a browser directly in the editor. Agents have access to tools for selecting DOM elements and understanding page structure.

This means an agent can:

Write code

Spin up your app

Interact with the interface

Catch bugs

Iterate until everything works

You're not manually testing each change anymore.

The agent sees what it built. It clicks buttons, fills forms, inspects the DOM, verifies behavior. The test-fix-retest loop runs automatically.

Each iteration takes 1-2 minutes with Composer's speed. Manual testing would be: run app, click through, find bug, fix code, restart server, test again. The agent does all of that.

A Practical Workflow That I Setup

Here's what I did to build a full-stack task manager in roughly 2 hours:

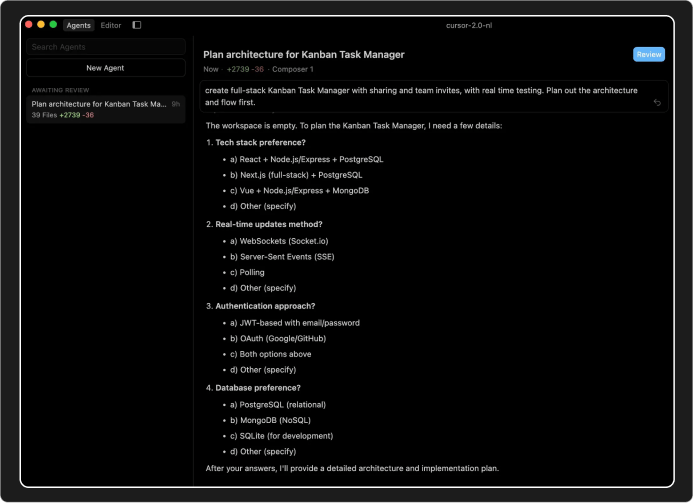

Step 1: Start with Plan mode

Don't jump straight to code.

Open Cursor. Start a new agent session. Switch to Plan Mode.

Describe what you want to build in detail. The more specific you are, the better the plan.

Bad prompt: "Build a login page."

Good prompt: "Build a login page with email/password fields, form validation using Zod, and JWT authentication that connects to our existing /api/auth endpoint. Handle token refresh. Show proper error states."

Composer has access to your entire codebase through semantic search. It understands context across files. Use that. Reference existing patterns, mention specific files or functions, point to examples elsewhere in your code.

The agent will create an architectural plan. Database schema. API endpoints. Component structure. State management approach.

Review this plan carefully.

Check the database schema. Are the API endpoints well-designed? Is the component structure logical? Are security considerations addressed?

If something seems off, revise the plan now. It's much easier to fix architectural issues during planning than after implementation.

Step 2: Let him cook now

Once you approve the plan, tell the agent to start implementation.

It will reference the plan throughout the build. You don't need to micromanage.

You have options for execution:

Build in the foreground while you watch

Build in the background while you do other work

Run parallel agents to create multiple implementations, then review and pick the best one

The agent works methodically. Project structure. Database initialization. Authentication endpoints. Task API endpoints. React components. Frontend-backend connections. Styling.

Each complex section takes 2-3 minutes. Previous Cursor versions took 1-2 minutes per response for similar complexity. Composer responds in 10-20 seconds.

Step 3: Use Browser testing

Once the UI is built, use the browser tool to test.

Open it with cmd + shift + P, type "Cursor Browser", and start.

Give specific testing instructions:

"Test the signup and login flows with valid and invalid inputs."

"Test all CRUD operations on tasks."

"Verify the UI is responsive on mobile."

The agent will automatically:

Start your dev server

Open the browser

Navigate and interact with your app

Catch bugs and visual issues

Fix problems and re-test

Let this cycle run. The agent iterates until tests pass.

This saved me at least 30 minutes on a recent project. The agent caught edge cases I wouldn't have noticed immediately.

Step 4: Review everything

After browser testing completes, review all the code.

Use the improved code review interface to see all changes.

Look for:

Code quality and patterns

Security vulnerabilities

TypeScript type safety

Error handling completeness

Consistency across the codebase

Make small adjustments where needed. Add comments. Improve types. Refine error messages.

What’s Not There (yet)

You still need to be a developer. This isn't a tool for non-technical people to build apps. You need to understand code, make architectural decisions, and review implementations. Cursor 2.0 makes you faster, not unnecessary.

Multi-agents are overkill for most projects. Running 8 parallel agents sounds impressive but is useful maybe 10% of the time. Most developers will use 1-2 agents max.

Composer benchmarks are vague. "4x faster" without transparent comparisons is marketing, not data. The speed is real in practice, but Cursor should publish actual benchmarks.

The learning curve exists. If you're used to traditional IDEs, shifting to "managing agents" requires rethinking your workflow. It's not just Cursor with new buttons.

Closing thoughts

Cursor 2.0 is what happens when a company takes AI coding seriously instead of adding "AI features" to their existing product.

They rebuilt the interface around agents. They built their own model for speed. They added browser testing so agents can verify their own work. They created Plan Mode to prevent architectural disasters.

The workflow fundamentally shifts from typing code to planning and reviewing code. You spend less time implementing and more time making architectural decisions and verifying quality.

For developers building web apps or complex features, Cursor 2.0 is legitimately faster than any other coding tool I've used. The time savings are real. The code quality is high. The browser testing just works.

But if you're expecting to describe an app in plain English and get a production-ready startup, you'll be disappointed. This is a tool for developers to build faster, not a replacement for knowing how to code.

My honest take: If you're a developer who codes frequently, try Cursor 2.0. The Plan Mode + Browser Tool workflow alone is worth it for complex projects. If you're a casual coder or working on simple scripts, the free tier of Claude or GPT-5 is probably enough.

The AI coding revolution isn't about replacing developers. It's about making good developers 10x faster. Cursor 2.0 delivers on that promise.

Until next time,

Vaibhav 🤝

If you read till here, you might find this interesting

#AD 1

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

#AD 2

The AI Race Just Went Nuclear — Own the Rails.

Meta, Google, and Microsoft just reported record profits — and record AI infrastructure spending:

Meta boosted its AI budget to as much as $72 billion this year.

Google raised its estimate to $93 billion for 2025.

Microsoft is following suit, investing heavily in AI data centers and decision layers.

While Wall Street reacts, the message is clear: AI infrastructure is the next trillion-dollar frontier.

RAD Intel already builds that infrastructure — the AI decision layer powering marketing performance for Fortune 1000 brands. Backed by Adobe, Fidelity Ventures, and insiders from Google, Meta, and Amazon, the company has raised $50M+, grown valuation 4,900%, and doubled sales contracts in 2025 with seven-figure contracts secured.

Shares remain $0.81 until Nov 20, then the price changes.

👉 Invest in RAD Intel before the next share-price move.

This is a paid advertisement for RAD Intel made pursuant to Regulation A+ offering and involves risk, including the possible loss of principal. The valuation is set by the Company and there is currently no public market for the Company's Common Stock. Nasdaq ticker “RADI” has been reserved by RAD Intel and any potential listing is subject to future regulatory approval and market conditions. Investor references reflect factual individual or institutional participation and do not imply endorsement or sponsorship by the referenced companies. Please read the offering circular and related risks at invest.radintel.ai.