Which AI video model are you using most right now?

Video is hard.

Harder than text and even images.

Text models have had years of iterations behind them. Video models are ~2 years old.

Despite that, 2025 delivered massive leaps in media production.

I tested 10+ video models over the past few months. Here are my winners.

🎁 A quick gift before we begin

We put together an AI Survival Hackbook for 2026: a practical guide on how AI changed work between 2019–2025, what will matter next year, and how professionals are quietly using AI for leverage (not hype).

It includes:

Clear breakdowns of what shifted in 2025

Ready-to-use prompts & workflows

Real automation ideas you can set up

It’s free for the next two weeks.

Best for Character Consistency

Kling launched on O1 December 1, 2025, as the first “unified multimodal video model with director-like memory.”

For character work, it takes a slight advantage over Veo 3.1.

Caveat: Not a major leap from Kling 2.5 if you're already using it. But combined with other launch week features, it's become my go-to for video generation.

Runner up: Veo 3.1 (beautiful but less character tracking)

Best for Physics/Realism

It launched in November and smashed the benchmarks. 1,247 Elo points, and #1 on Artificial Analysis Text-to-Video.

Gen-4.5 maintains Gen-4's speed and delivers breakthrough quality.

Its physics understanding is scary good.

Objects move with believable force. Liquids behave realistically. Fabrics, hair, textures stay consistent even during fast or complex motion.

And their References tool solves for the world consistency.

Caveat: Expensive. $0.08/image for max quality on Standard plan.

And has its limitations, like causal reasoning issues (effects before causes), object permanence problems and success bias where difficult actions succeed too often

Runner-up: Sora 2 (better audio, worse control)

Best for Audio-Visual Sync

Alibaba launched WAN 2.5 September 2025.

It has native audio generation with perfect lip-sync.

Previous AI videos had mismatched audio.

Characters' mouths not aligning with speech. Background music out of sync.

WAN 2.5 solved it through joint multimodal training.

The model generates three audio elements simultaneously: ambient sound, background music, and voice narration that matches on-screen action.

You can specify emotions, tone, rhythm, pacing, and volume.

The system delivers natural, emotionally aligned speech from whispers to dramatic shouts.

Runner up: Veo 3 (synchronized audio but less control over specific elements)

Best for Creative Control

The References tool is the unlock here. Character/scene consistency without complex workflows.

Act-One (October 2024) and Act-Two (this year) enabled animating characters without motion-capture equipment.

Just you, a webcam, and your performance.

Everything Everywhere All at Once used Runway for VFX. The Late Show with Stephen Colbert uses it for editing.

Caveat: Learning curve. Best deployed with some VFX/video production knowledge.

Runner-up: Sora 2 (Storyboards feature for frame-by-frame planning)

Best for Long Videos

OpenAI launched the duration update in October 2025.

15 seconds for all users. 25 seconds for Pro subscribers ($200/month).

It was previously capped at 10 seconds.

The Storyboards feature (launched December) lets you sketch videos second by second, frame by frame.

Pro users can also build entire narrative arcs: establishing shot, action, and payoff without constant jump-cutting.

Caveat: It still makes mistakes. OpenAI is still dealing with copyright issues.

Runner-up: Kling 2.1 (Extend feature can push to 3 minutes total)

Best for Studio Use

Production-ready outputs.

AMC Productions (studio behind ‘Everything Everywhere All at Once’) use it already.

It is fast, controllable and has flexible generation that sits beside live-action, animated, and VFX content.

Frame-to-frame stability ensures smooth, natural motion without jitter or artifacts. Physics accuracy means objects have weight, momentum, fluid dynamics that feel real.

Runner-up: Kling O1 (cheaper, faster, less polished for professional use)

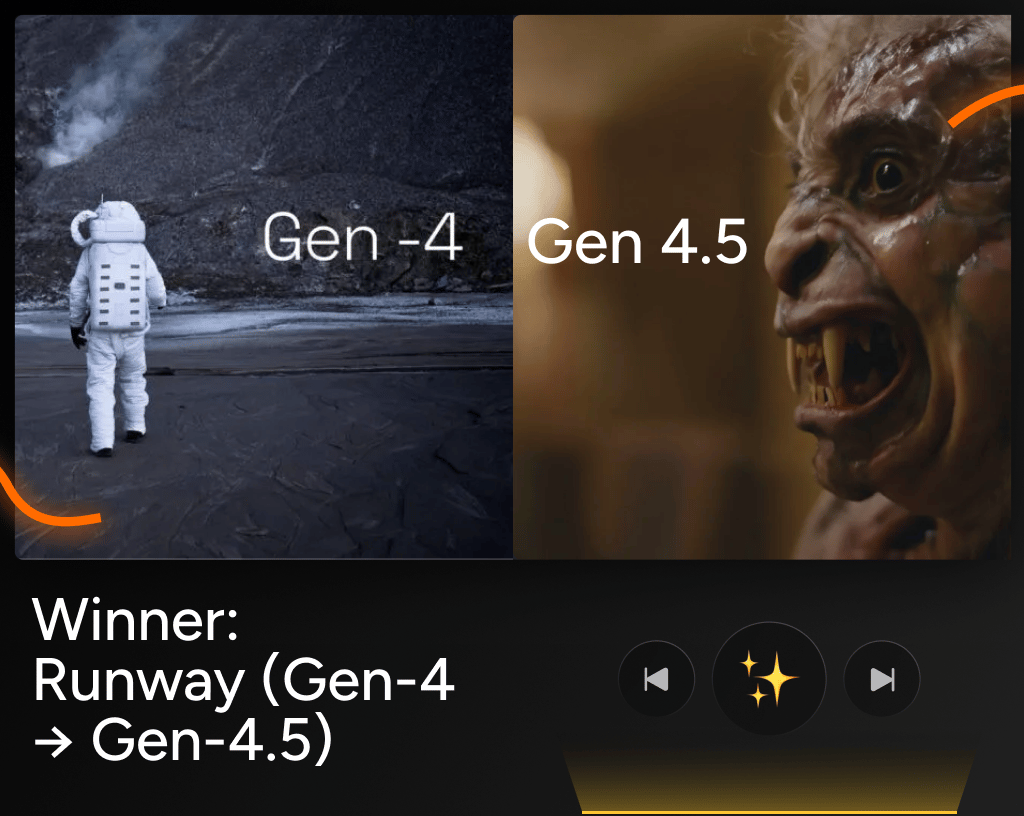

Most Improved

Timeline:

March 2025: Gen-4 launched with world consistency.

April 2025: Gen-4 Turbo released as faster, more cost-effective version.

November 2025: Gen-4.5 hits #1 on Video Arena leaderboard.

86 days between Gen-4 and Gen-4.5.

The velocity is insane. Gen-4.5 was codenamed "David" (David and Goliath reference). "An overnight success that took seven years," according to CEO Cristóbal Valenzuela.

Runner-up: Kling (2.5 → O1 in 3 months)

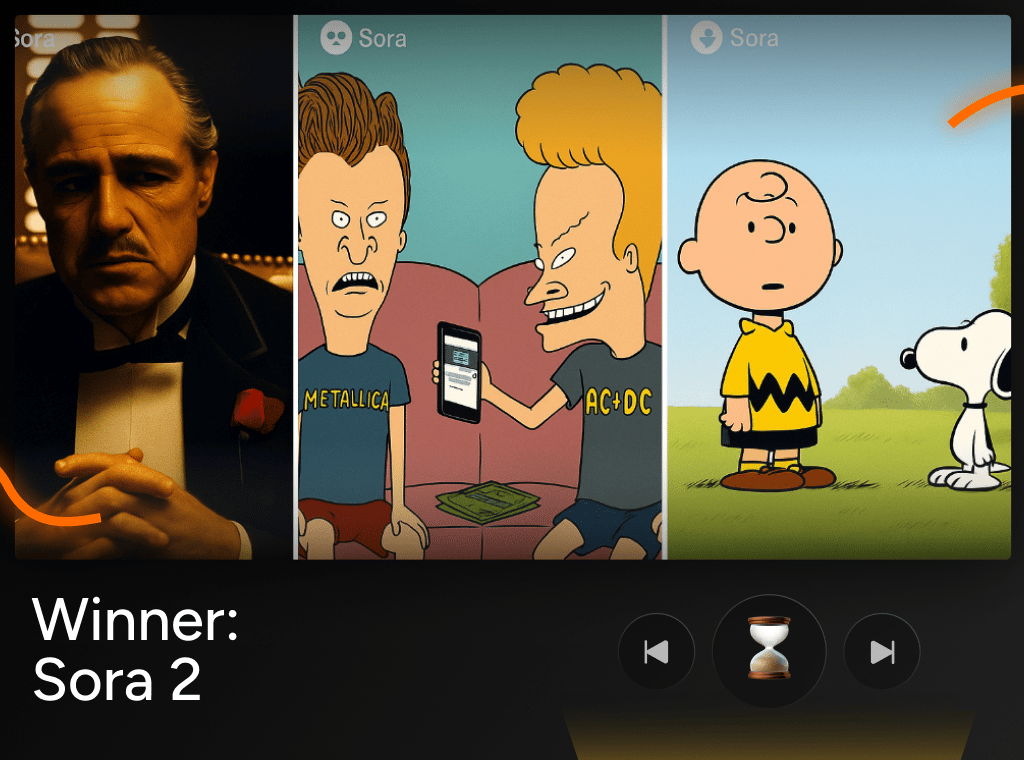

Bust of the Year

Sam Altman posted an image of the Death Star from Star Wars symbolizing "ultimate power." He called it "PhD-level expert in anything."

OpenAI hyped GPT-5 and Sora 2 for months.

Shortly after the launch in August, app ratings crashed to 2.9 stars.

Users described it as "boring, unpredictable, expensive, overhyped and underwhelming."

Strict content restrictions were a turnoff.

Here's the irony: Sora 2 actually improved. The video quality is too good.

But the launch execution was so bad, the hype so overblown and the UX was so frustrating that it became the poster child for 2025's "AI hype correction."

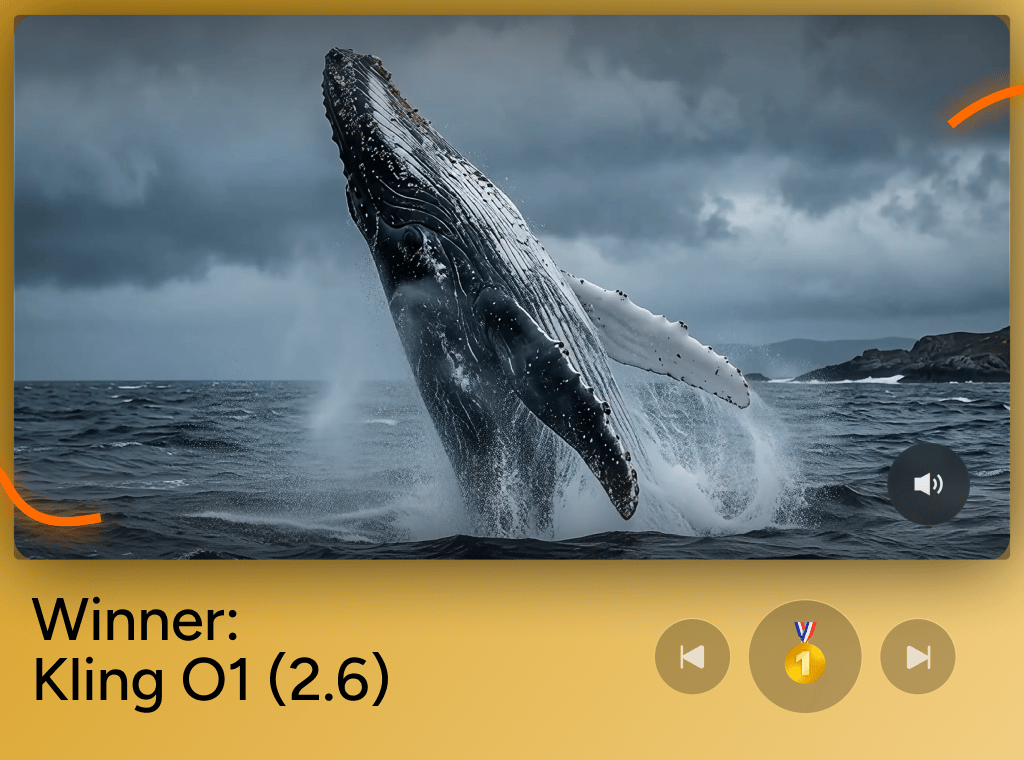

Best Overall Video Model

Kling wins on what matters for most creators: accessible, fast, handles complex human movement better than anything else.

Body tracking, facial consistency, maintaining character integrity through impossible action sequences, it's very good at most things.

A proper all-rounder.

Going into 2026

2025 was the year video AI got really real.

From an entertainment perspective, we're already there.

Virtual influencers, meme creators, YouTube shorts: AI generated videos are everywhere.

I use it all the time and usually the output quality is good enough to post.

But here's what 2026 needs to solve: seamless integration into production workflows.

Right now, it's janky. Has a bad UX. And is extremely expensive.

I feel 2026 will see fewer breakthrough models and it’ll be more about optimization and Incremental improvements.

But if I'm wrong and 2026 brings another GPT-3 moment for video gen, happy to be proven wrong.

Either way, it's an exciting year ahead.

Until next time,

Vaibhav 🤝🏻