Which matters more to your AI projects right now?

Your internet drops. In an underground metro, a basement library, that one dead zone on your commute. Happens all the time, right?

You pull out your phone. "Hey Siri, set an alarm for 6am." Nothing. It just stares back at you, useless.

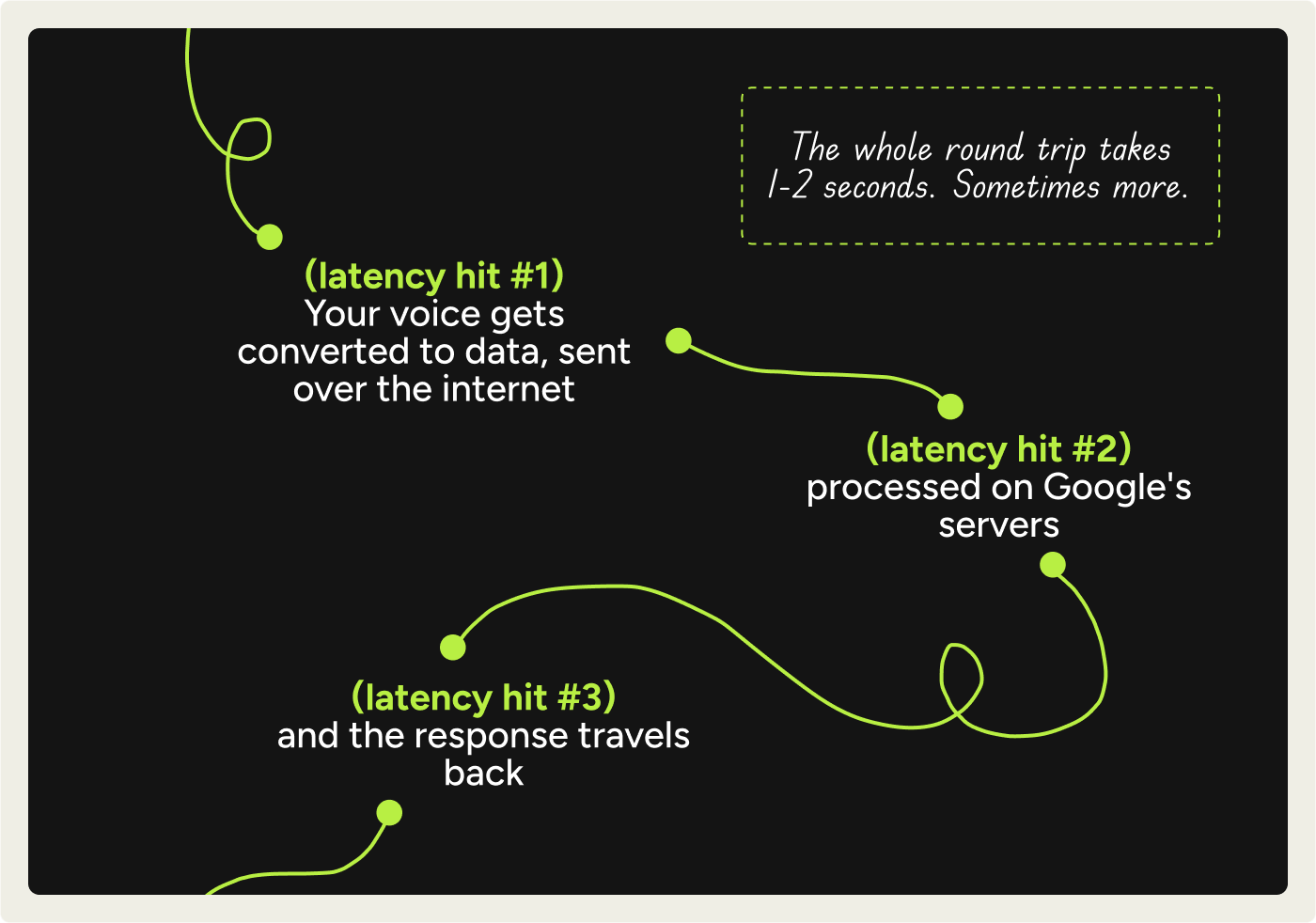

Every time you talk to Siri or Google Assistant, your voice isn't being processed on your phone.

It's being uploaded to a server farm somewhere, analyzed by massive computers, then sent back with instructions.

No internet = no brain.

Google just fixed that.

Cloud Dependency Problem

Here’s what's actually happening when you use voice assistants today:

You say "book a meeting with Sarah at 3pm."

You're also paying for this. Not directly, but someone is. Every API call costs money.

If you're building an app, those costs add up fast.

And your data is leaving your device. Every. Single. Time.

For a lot of use cases, this is fine. If you're asking about the weather in Delhi or want to know who won the 1986 World Cup, sure, hit the cloud.

But what about setting an alarm?

It’s a local operation. It doesn’t need the internet.

It just needs to be fast, private, and reliable.

Function Calling: The Missing Piece

Let’s first understand what "function calling" means:

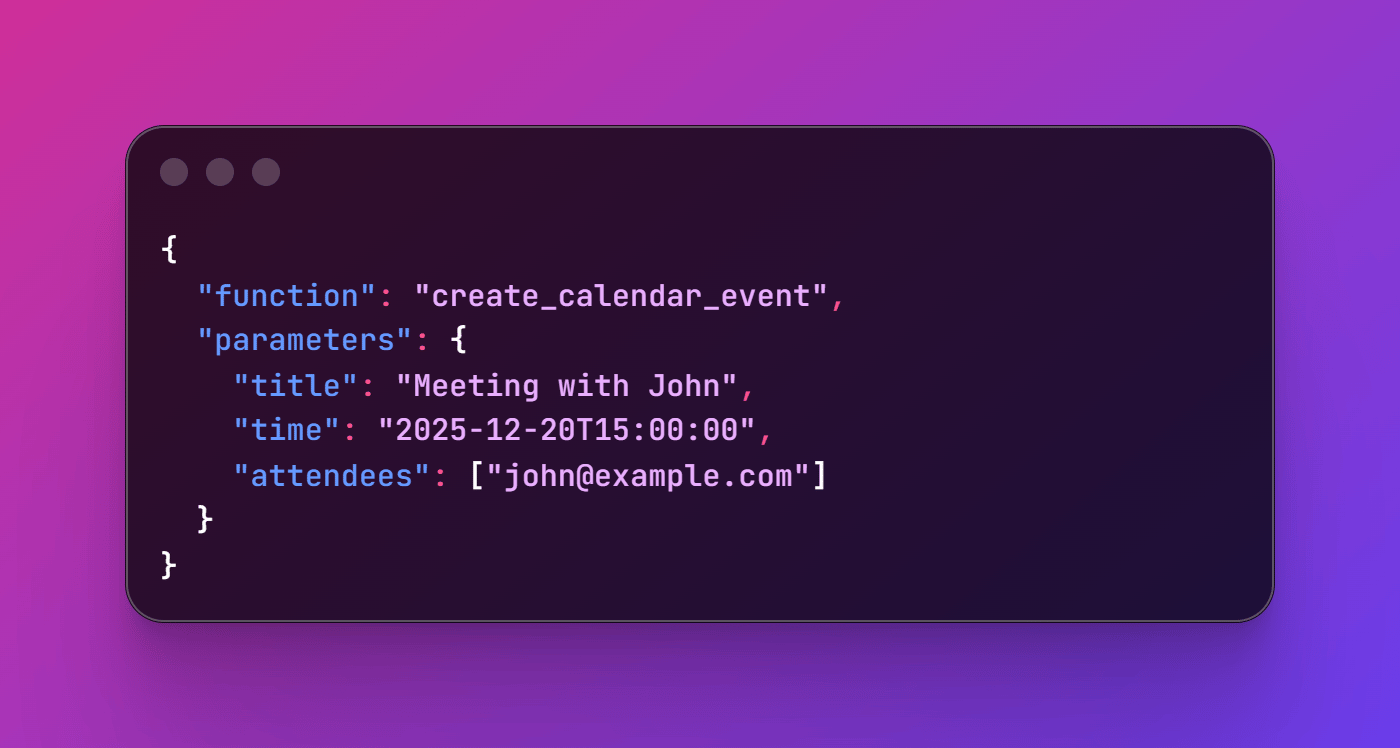

When you tell an AI "book a meeting with John at 3pm tomorrow," you don't want it to just say "okay, I'll book that meeting."

You want it to actually DO it.

That requires the AI to:

1. Understand your intent

2. Extract the relevant details (who, when, what)

3. Output structured data your app can execute

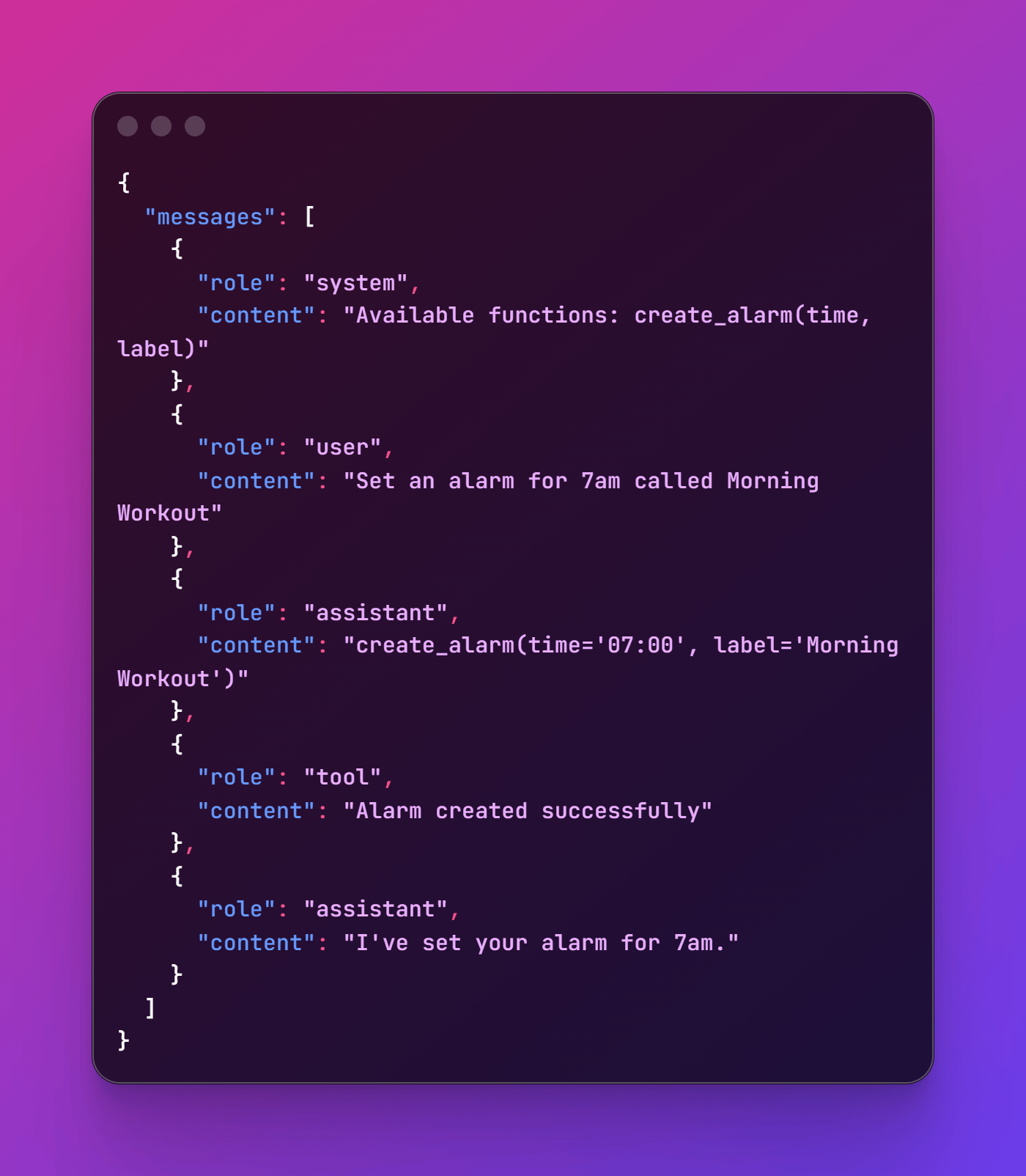

Here's what that looks like under the hood:

Your code receives this, runs the actual calendar function, and boom! Meeting scheduled.

This isn't new. OpenAI does it. Anthropic does it. Google's cloud APIs do it.

What's new is doing it on your phone. Without internet. In milliseconds.

FunctionGemma: 270 million reasons to care

Google just released FunctionGemma. It's a 270 million parameter model that does function calling entirely on-device.

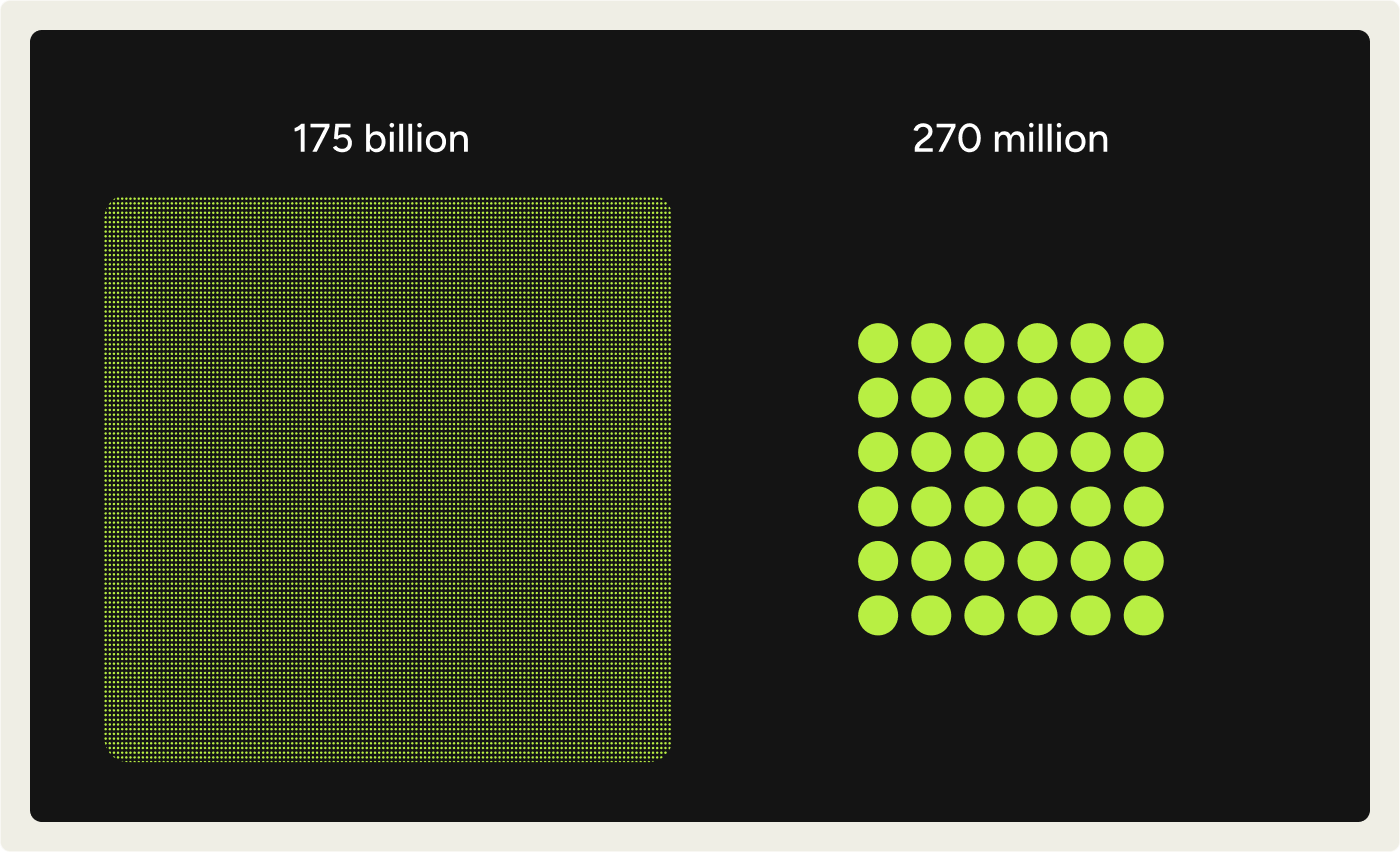

To put that in perspective: ChatGPT has around 175 billion parameters.

So it’s very tiny. Small enough to run on your phone without killing your battery. Specialized enough to be really good at one specific thing.

And the results speak for themselves.

Base accuracy on mobile actions: 58%

Not great. But here's where it gets interesting.

After fine-tuning for specific functions: 85%

It means you can take this general model and teach it YOUR specific API surface your functions, your app's actions and get reliable, production-ready performance.

What Changes

Think about the applications that suddenly become viable:

Voice assistants that work everywhere. No internet? Okay. Your phone's smart home controller still understands "turn off the bedroom lights." and your expense tracker still logs "spent fifteen dollars on coffee."

Gaming with natural language. Right now, if you want NPCs in a game to understand natural speech, you need network calls. That's too slow for real-time gameplay. But if the AI runs locally, you can process commands at 60fps. "Guard the north entrance" or "trade me your best sword" just... work!

Privacy-first applications. Medical apps. Finance trackers. Therapy journaling. Anything where users don't want their data touching a server. FunctionGemma never sends anything off-device.

Apps that don't break. Your internet goes down. Your smart home still works. Your voice-controlled productivity tools keep running. Your alarm system doesn't stop understanding you.

The training setup

If you want to build something, here’s what you need to know:

Ben Burtenshaw from Hugging Face released a complete training pipeline the same day FunctionGemma launched.

Working code you can run today.

Three ways to fine-tune:

Path 1: Google Colab Free GPU runtime. Takes about 30 minutes to fine-tune on the sample dataset. Perfect for experimenting.

Path 2: Hugging Face Jobs Single-file script that runs on HF infrastructure. You specify the GPU tier, pass your token, and the job handles everything else.

Path 3: Local with UV Run uv run sft-tool-calling.py and it installs dependencies automatically. No manual environment setup.

The training uses LoRA (Low-Rank Adaptation). You don’t need to retrain all 270 million parameters.

You just add small adapter layers that specialize the model for your specific functions.

The critical detail: dataset format.

FunctionGemma expects conversations structured like this:

Get this format wrong and the model won't learn the pattern.

Burtenshaw's dataset shows you exactly how to structure it. If you're building custom function calling, study his examples before creating training data.

The Limitations

FunctionGemma won't write your essay, explain quantum physics or handle complex reasoning chains and nuanced intent.

And that's the entire point.

You don't need a genius to turn on your flashlight.

You need something fast, reliable, and specialized.

Cloud models will always have more general knowledge and handle edge cases better.

But if your app has 20 well-defined functions with clear schemas, a 270M specialized model will call them faster, cheaper, and more privately than any cloud API.

The 58% → 85% accuracy jump after fine-tuning proves this.

General capability is useful. Specialized training for your specific use case is more useful.

Build it this weekend

The model is live on Hugging Face and Kaggle. The training guides are public. The Mobile Actions dataset is available.

Download the Google AI Edge Gallery app and try the Mobile Actions demo.

See what 270 million parameters of specialized function calling feels like on your phone.

Then pick a project:

1. Privacy-first expense tracker User says "log lunch twenty dollars cash." Model calls add_expense(amount=20, category="food", payment="cash"). Everything stored locally in SQLite. No server. No API fees. No data leaving the device.

2. Offline smart home fallback When your internet dies, voice control shouldn't. Build a command router that maps natural language to home automation functions. Lights, thermostat, locks. Works in airplane mode.

3. Voice-controlled study timer "Start a 25-minute focus session." "Take a 5-minute break." "Log this study session for Biology." All offline. All instant. Perfect for library basements with no signal.

4. App launcher by intent "Open Spotify." "Start Maps to home." "Call Mom." Map natural language to open_app(app_id) or start_call(contact). Tiny function surface. Very reliable. Way faster than hunting through your app drawer.

5. Smart toggle controller WiFi, Bluetooth, flashlight, Do Not Disturb. Just toggles. Function: set_toggle(name, state). Sounds simple, but it feels powerful because it's instant and works offline.

The Bigger Picture

For the last decade, the answer to "where should AI processing happen?" has been "the cloud." Bigger models, more compute, better results.

That made sense when edge devices were weak and models were massive.

But phones are powerful now. And we've learned how to make small, specialized models that are really good at specific tasks.

FunctionGemma is proof that you can run useful AI entirely on-device. Fast enough for real-time applications. Accurate enough for production use. Private enough for sensitive data.

Ready to build?

Try the model first: Download FunctionGemma on Hugging Face and run it locally in under 5 minutes. Kaggle version here if you prefer notebooks.

Want to fine-tune it? Ben Burtenshaw's complete training setup includes working code for Colab, Hugging Face Jobs, and local execution. Everything you need in one repo.

Need training data? The Mobile Actions dataset shows you exactly how to structure conversations for function calling. Study this before building your own dataset.

Full documentation: Google's official guide covers deployment options, optimization tips, and integration examples.

The tools are ready. The training is straightforward. The use cases are everywhere.

What's missing is what you'll make with it.

Until next time,

Vaibhav 🤝🏻

If you read till here, you might find this interesting

#AD 1

Forrester Expert Webinar - AI Enters the Content Workflow Conversation

Find out how to manage and monetize your content library on January 14th as industry pioneers from Forrester Research and media executives formerly of ESPN, Disney, and Comcast reveal how to get on the cutting edge of content operations with the help of AI.

#AD 2

Your competitors are already automating. Here's the data.

Retail and ecommerce teams using AI for customer service are resolving 40-60% more tickets without more staff, cutting cost-per-ticket by 30%+, and handling seasonal spikes 3x faster.

But here's what separates winners from everyone else: they started with the data, not the hype.

Gladly handles the predictable volume, FAQs, routing, returns, order status, while your team focuses on customers who need a human touch. The result? Better experiences. Lower costs. Real competitive advantage. Ready to see what's possible for your business?