Which one feels MOST confusing right now?

The past two weeks, I’ve spent more time wrestling with eval pipelines, caching behavior, flaky agent outputs, and hallucinating UI generators

…than actually writing prompts.

This edition is my field notes for engineers and product folks trying to use LLMs in real systems instead of demos.

Why this exists at all?

I thought building AI products meant “prompting until it works.”

Then two things hit me at once:

The same model behaved differently on Tuesday vs Friday.

My cost charts made no sense; identical requests had very different latencies.

Both problems traced back to one root failure = I kept building systems the same way we build deterministic software, while depending on a non-deterministic engine.

Two capabilities separate hobbyism from engineering here:

Evals (how do we know the model works?)

Prompt caching (how does inference actually run under the hood, and how do we not pay twice for the same work?)

These are unglamorous foundations that determine whether your AI system scales or collapses.

The two silent failure modes: guessing and waste

Guessing (a.k.a. vibes-driven development)

Most teams change a prompt, eyeball five examples, decide “looks good,” and ship.

It feels scientific right up until someone asks: “What exactly improved, and how do you know?”

Without evals, all AI tuning is theatre.

Waste (a.k.a. you unknowingly burn compute)

I assumed caching worked inside a conversation, change a prompt, and the system reuses the old work.

Wrong.

Prompt caching is content-addressable, not session-bound.

Your cache hit depends on whether: another request (possibly from another user) had the same prefix in the past.

Once you understand that, prompt design stops being “copy tweaking” and turns into operating system-like thinking.

Evals: how to replace vibes with evidence

Good evals start with error analysis.

The simplest workflow:

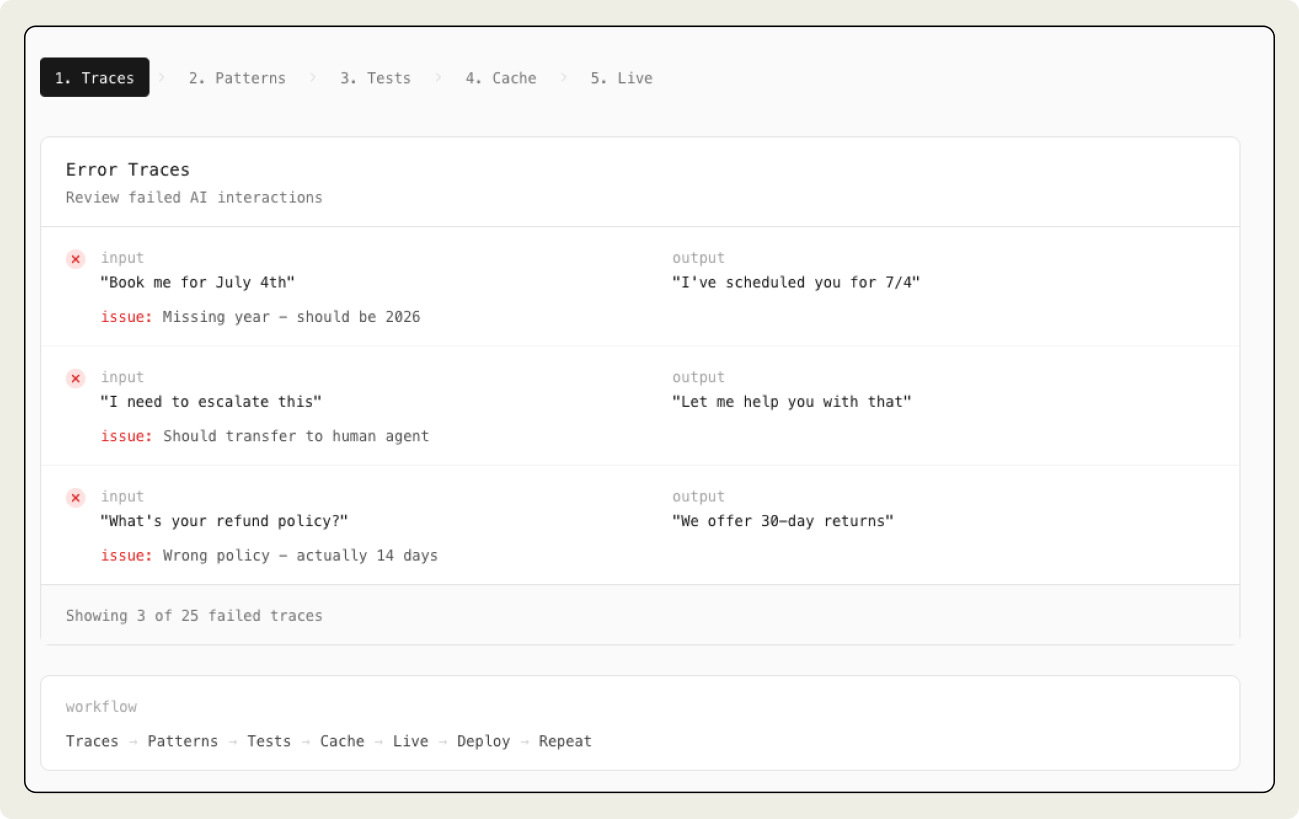

Step 1: Inspect traces

You need a viewer (LangSmith/Phoenix/Braintrust work) but a minimal internal one is better.

Scroll 100–200 interactions.

Write raw, descriptive notes: “The agent ignored an obvious escalation point”, “Tone mismatch on price pushback”, “Hallucinated policy that doesn’t exist.”

This is open coding, observing without predefined buckets.

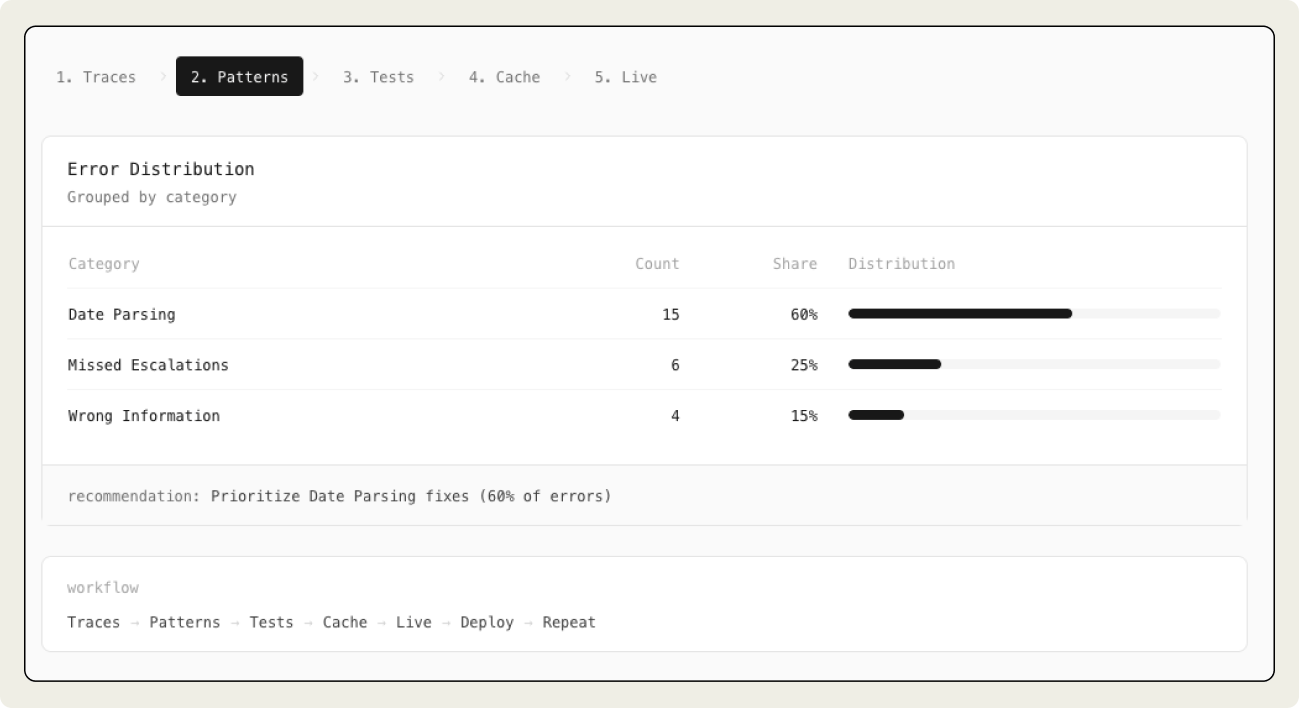

Step 2: Group notes

Cluster observations until 5-10 categories emerge:

Date parsing errors

Handoff timing failures

Repetition loops

Hallucinated context

Missed re-engagement

This turns qualitative pain into a fault taxonomy.

Step 3: Prioritise with counts

A pivot table reveals effort direction:

60 percent → date logic

25 percent → handoff failures

15 percent → repetition loops

Focus becomes statistical, not emotional.

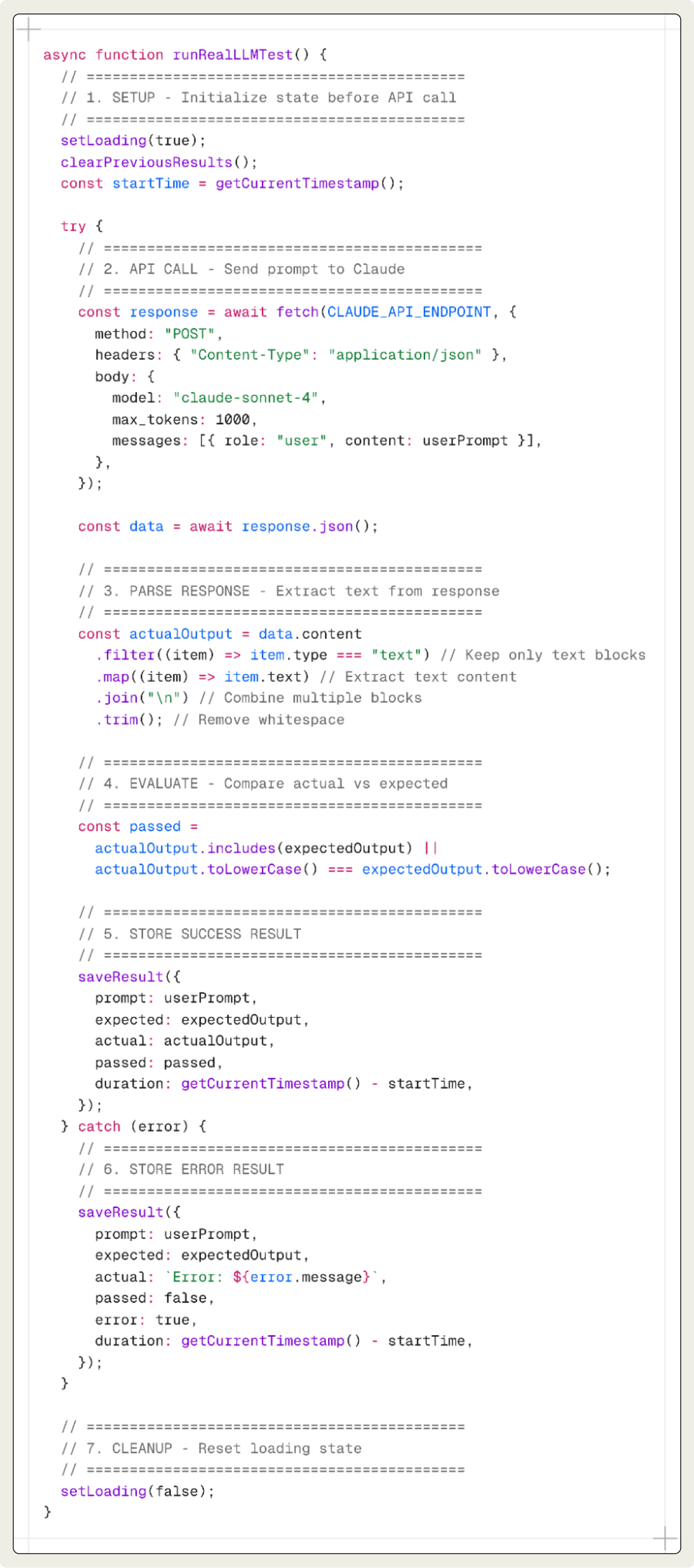

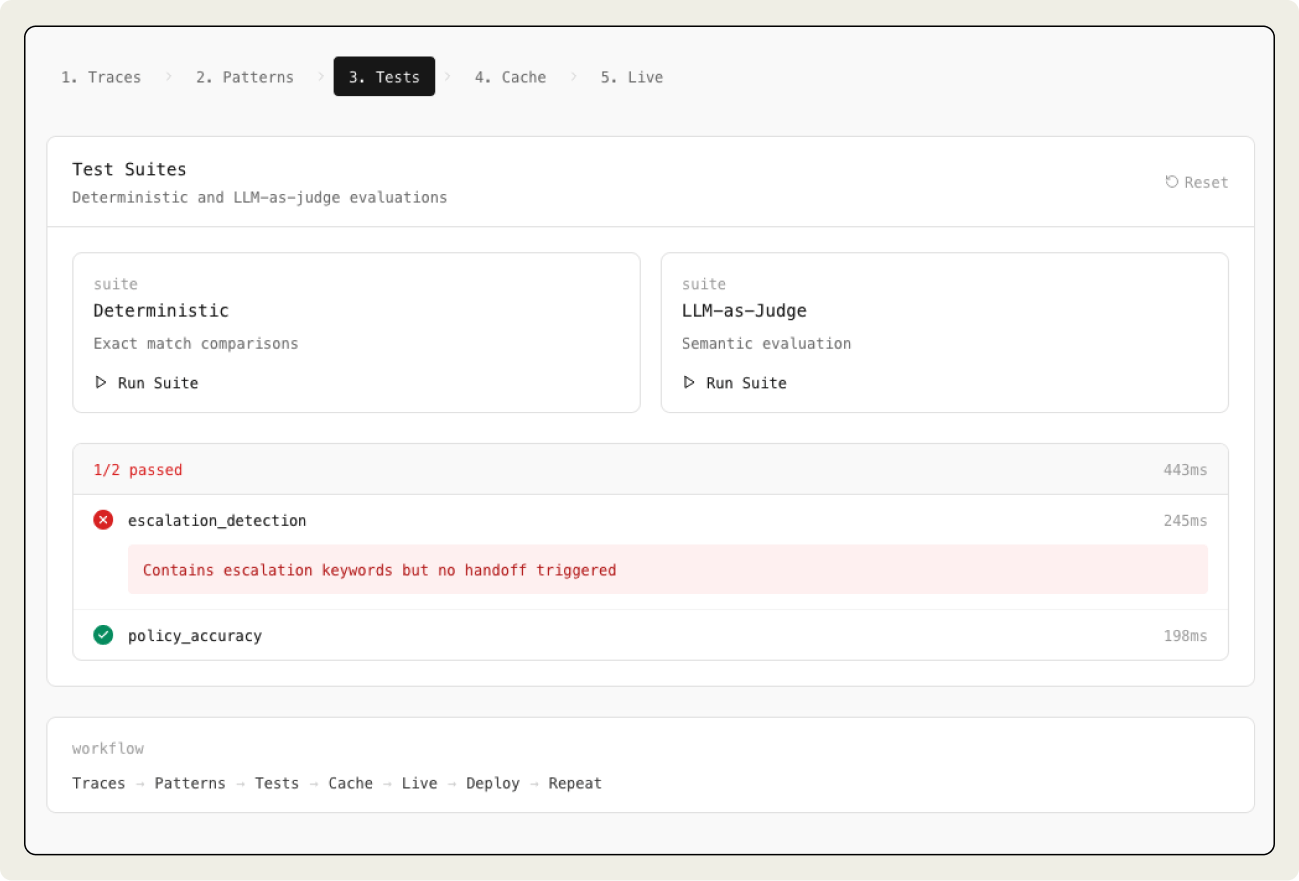

Step 4: Build evals

Two kinds matter:

(a) Deterministic failures → code-based evals. If there is exactly one right answer (e.g., “extract July 4, 2026 from this sentence”), assert outputs using a golden dataset.

(b) Subjective failures → LLM-as-judge. Where humans disagree, build:

A labelled dataset of PASS/FAIL decisions

The reasoning notes behind those labels

A judge prompt that applies that reasoning consistently

Binary PASS/FAIL forces clarity in a way 1–5 scoring never does.

Figure 1.0: Building deterministic Evals

Figure 2.0: Building Subjective Failures

Step 5: Integrate into CI

Run deterministic evals every commit. Run judge-based evals nightly or pre-deployment.

That’s how AI development becomes repeatable.

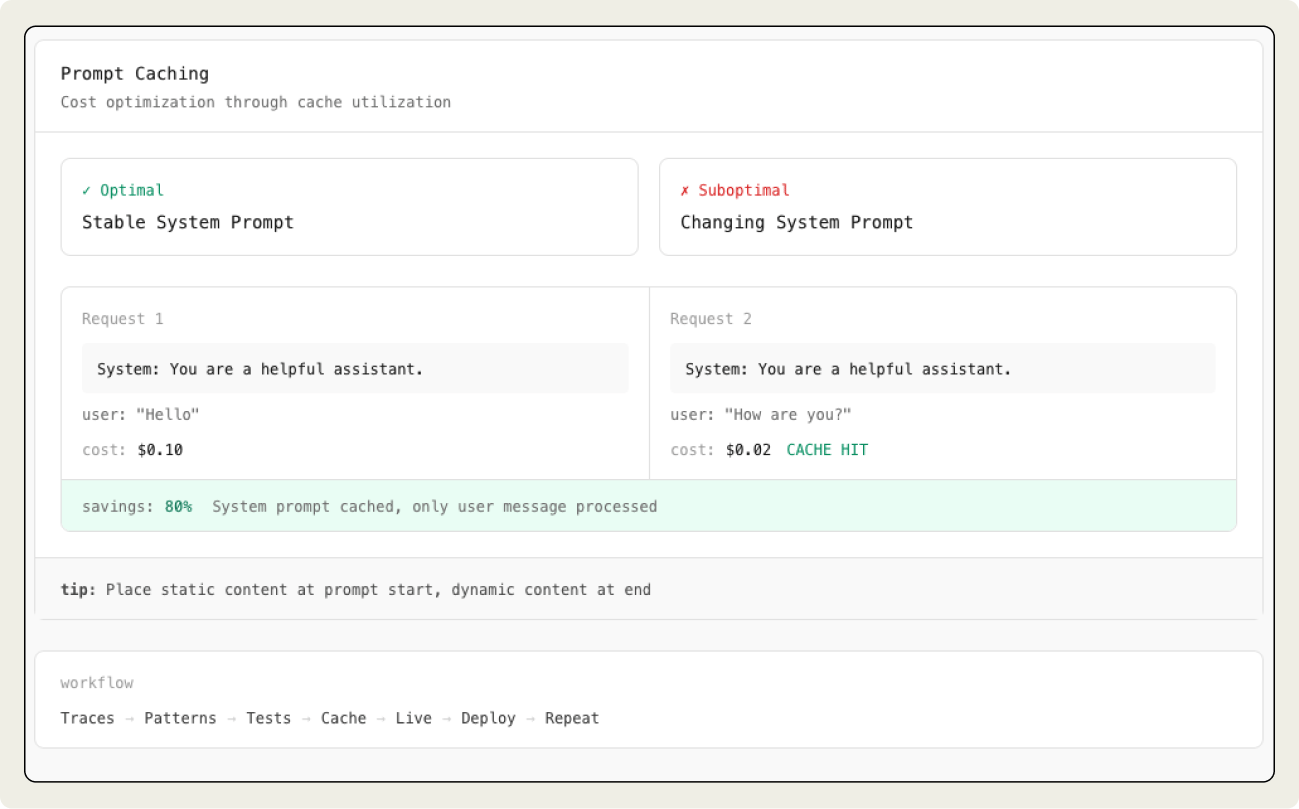

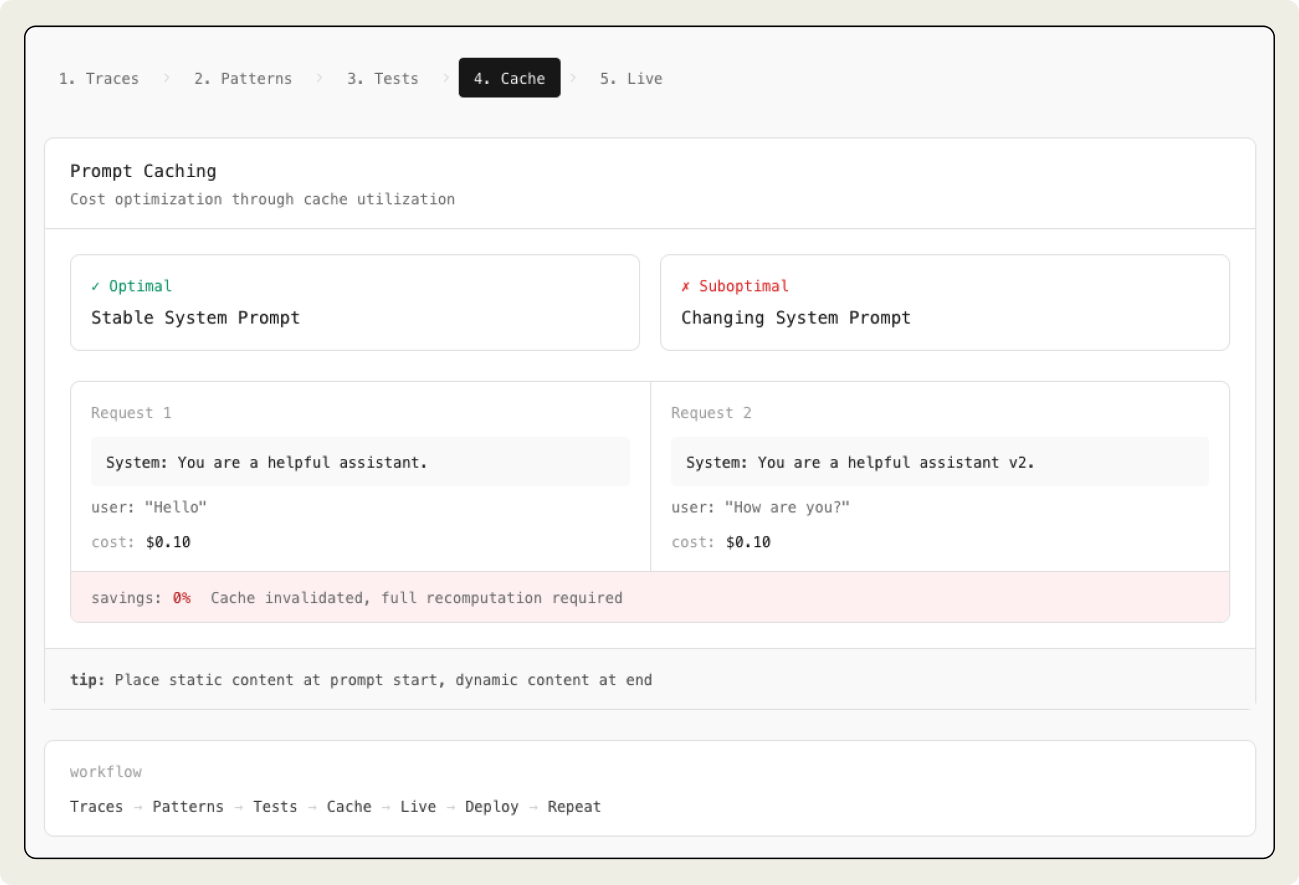

Prompt caching: why your intuition is wrong

Caching is not “remember this chat.” It’s actually “reuse KV tensors for identical prefixes across any request.”

Two useful misconceptions:

Misconception 1: caching works at the request level

Reality: it works across users, across time, across workloads. If ten customers share a system prompt, only one pays the full token cost.

Misconception 2: caching saves outputs

Reality: engines reuse KV cache blocks, the precomputed attention tensors for every token.

This works because inference engines behave more like OS schedulers than single-session processes.

Why do engineers need to care?

Because caching radically changes cost curves:

If your system prompt is stable → you get 5x–10x cheaper inference.

If your system prompt mutates per user → you break the hash chain → zero reuse.

A few pragmatic rules (the ones that actually matter):

The longest stable prefix is the only thing that caches. If you append or reorder anything above it, your whole chain breaks.

Context must be append-only.Deleting old tool outputs destroys prefix compatibility.

Serialization must be deterministic. JSON key ordering differences produce cache misses.

Tool definitions belong at start or consistently appended. Move them and you break everything downstream.

Viewed this way, caching is more like memory paging:

Providers preallocate fixed block storage

Token chunks hash into content-addressed KV blocks

Requests reuse blocks via hash lookup

Missing blocks are computed only once

Understanding this helps you architect prompts the way DBAs architect indexes.

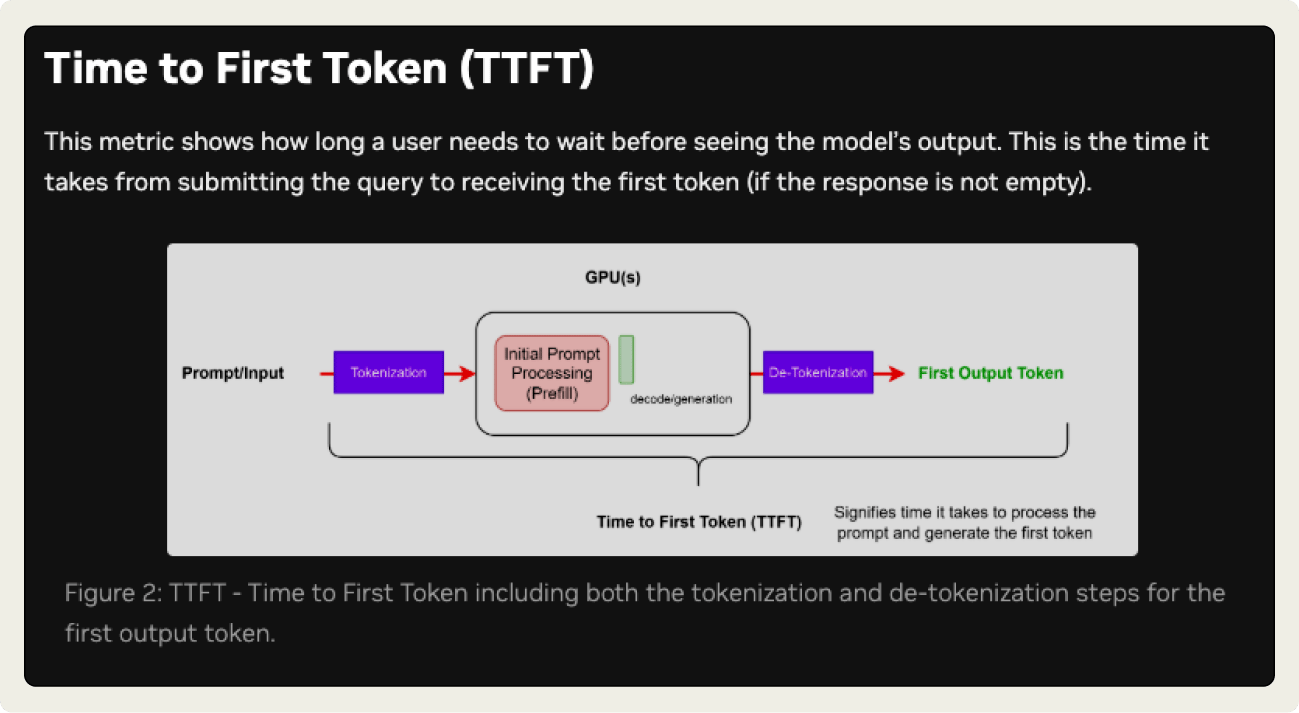

Behind the scenes - common LLM inference metrics

A simple blueprint any team can apply

If I were restarting a new AI product tomorrow, I’d begin with this 6-step loop:

Instrument traces from day one.

Perform open coding until the fault taxonomy emerges.

Turn top 3 categories into deterministic evals or judge evals.

Refactor prompts into a stable prefix + append-only memory.

Run evals in CI to detect regressions early.

Repeat every 2-4 weeks.

That loop replaces intuition with an engineering treadmill.

I’m attaching the exact artifact/output below, use it as a reference, then run the same loop on your own system. You’ll learn more by reproducing it than reading about it.

So, what matters next, you might think?

Evals are your lens on correctness. Caching is your lever on cost and latency.

Together, they turn AI development from: “Prompt until it feels right” into “Observe → Diagnose → Measure → Improve → Automate.”

Once this clicks, the excitement becomes about how predictable the model becomes when treated like a system.

Until next time,

Vaibhav 🤝🏻

If you read till here, you might find this interesting

#AD

Shoppers are adding to cart for the holidays

Over the next year, Roku predicts that 100% of the streaming audience will see ads. For growth marketers in 2026, CTV will remain an important “safe space” as AI creates widespread disruption in the search and social channels. Plus, easier access to self-serve CTV ad buying tools and targeting options will lead to a surge in locally-targeted streaming campaigns.

Read our guide to find out why growth marketers should make sure CTV is part of their 2026 media mix.

#AD 2

Learn how to make every AI investment count.

Successful AI transformation starts with deeply understanding your organization’s most critical use cases. We recommend this practical guide from You.com that walks through a proven framework to identify, prioritize, and document high-value AI opportunities.

In this AI Use Case Discovery Guide, you’ll learn how to:

Map internal workflows and customer journeys to pinpoint where AI can drive measurable ROI

Ask the right questions when it comes to AI use cases

Align cross-functional teams and stakeholders for a unified, scalable approach