If you had to hire an engineer right now, who would you hire?

Two frontier models dropped in the same week. Gemini 3 on November 18. Claude Opus 4.5 on November 24.

We covered Gemini previously, and Opus 4.5 dropped right after.

Both claimed the top spot on LMArena and claimed to be better than ever at coding.

In fact, Anthropic said Opus 4.5 outscored every human candidate that applied at Anthropic in a take-home assignment.

So we put it to test.

The benchmarks

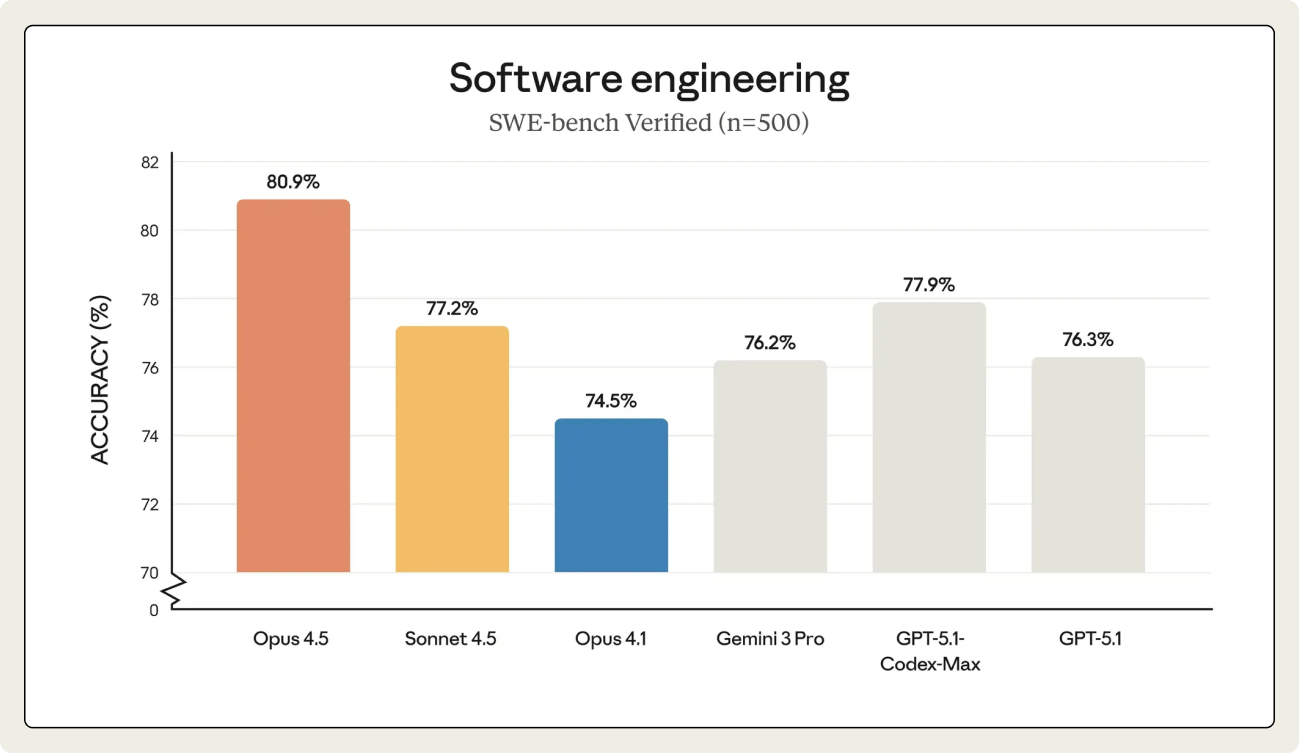

Opus 4.5 hit 80.9% on SWE-bench Verified.

First model to break 80%.

This benchmark tests whether models can fix real GitHub issues from open source projects.

The competition:

⦁ Sonnet 4.5: 76.6%

⦁ GPT-5.1-Codex-Max: 72-75%

⦁ Gemini 3 Pro: 74-78%

Opus also uses 76% fewer output tokens than Sonnet 4.5 while matching or beating its performance.

Fewer tokens means lower costs and faster responses.

Unexpected price drop

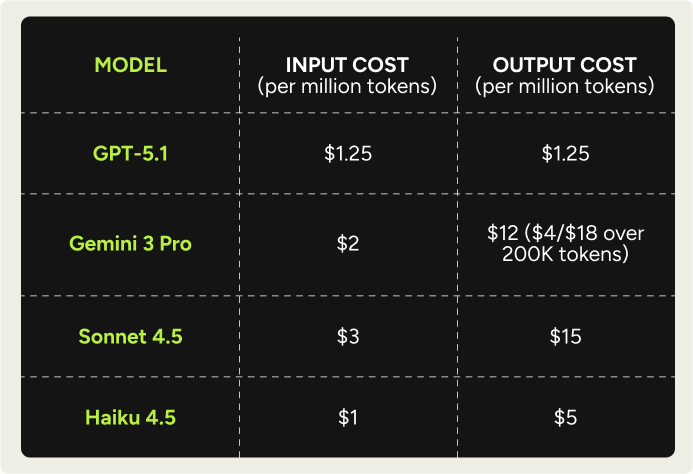

Opus 4.5 costs $5 per million input tokens and $25 per million output tokens.

The previous Opus 4.1 was $15 and $75.

That's a 67% price cut on a model that performs better across every benchmark.

Current pricing landscape:

Opus still remains the most expensive but the gap just narrowed drastically.

Testing methodology

We ran two tests. Same prompts for Gemini 3 and Opus 4.5.

Test 1 measures depth and completeness. Can the model deliver executable code versus strategic advice?

Test 2 measures constraint adherence and practical judgment. Can the model stay within limits while providing useful guidance?

Test 1: Django Monolith Migration

The Prompt: "I'm migrating a 50k LOC Django monolith to microservices. The codebase has 12 Django apps with circular import dependencies, a single PostgreSQL DB with 47 tables, no existing API documentation, tests covering ~40% of code. Create a phased migration plan. For each phase specify: what gets extracted, what breaks, how to maintain backwards compatibility, and the rollback strategy. Assume 2 senior devs and 3 months."

Gemini 3: 150 lines

Four-phase strategy document. Tool recommendations: pydeps, Kong, Debezium. Clean structure. Ends by asking if you want more detail on Phase 2.

Opus 4.5: 760 lines

Bash scripts for dependency mapping. SQL queries for foreign key analysis. Python code for saga patterns. Docker-compose.yml ready to run. Integration tests. On-call runbooks.

Both models got the core insight right. Full migration in 3 months is unrealistic. Aim for 2-3 extracted services instead.

Opus went further. Added a pre-migration assessment week. Risk registry with likelihood and impact scores. Explicit breakdown of achievable versus unrealistic goals.

Gemini tells you what to do. Opus shows you how to do it.

Test 2: Production Database Crisis

The Prompt: "My production database is down. Walk me through what to do first. 50 words max."

Gemini 3: 70-80 words

Five steps: status checks, connectivity, logs, restart, stakeholder communication.

Offers to help interpret error logs. Exceeds word limit by 40%.

Opus 4.5: ~50 words

Five steps with a command example (systemctl status). Includes disk space check. Ends with "What's your database system?" Meets the constraint.

Both gave similar triage logic.

Check if running, read logs, verify connectivity, restart if safe.

The difference: Constraint adherence and practical details.

Opus stayed within limits. Gemini overshot by 40%.

Opus also dropped a real command and flagged disk space. Gemini added stakeholder communication. Smart but arguably not "what to do first" in technical triage.

What it means

For the junior dev: The "grunt work" (writing boilerplate, basic migrations) is gone.

To get hired in 2025, writing code won’t suffice.

You need to prove you can audit the code of a machine that is faster than you.

For the leaders: In our migration test, Opus cost a few dollars to do work that would have taken a human two days.

The most expensive compute is still cheap compared to the cheapest salary.

When to use Opus

Anthropic says Opus 4.5 is their most capable model. But at $5/$25 per million tokens, it's still the most expensive.

When does paying more get you more?

We think it comes down to three scenarios:

1. Architecture decisions - When you need working code, not just advice

2. Complex debugging - When the problem spans multiple systems and files

3. Feature scoping - When tradeoffs matter more than speed

That’s theory.

So we put Opus 4.5 and Gemini 3 through two real-world tests to see if it actually delivers on these promises.

Who gets the job?

For the last two years, we’ve treated AI as a "Copilot", something that tab-completes our thoughts.

Opus 4.5 and Gemini 3 proves that era is over.

It is now completing your Jira tickets instead of just sitting in your extension bar and helping you complete sentences.

If you want to keep your job, learn to supervise both.

Which model did you end up hiring then? Let me know in the replies.

Until next time,

Vaibhav 🤝🏻

If you read till here, you might find this interesting

#AD

AI-native CRM

“When I first opened Attio, I instantly got the feeling this was the next generation of CRM.”

— Margaret Shen, Head of GTM at Modal

Attio is the AI-native CRM for modern teams. With automatic enrichment, call intelligence, AI agents, flexible workflows and more, Attio works for any business and only takes minutes to set up.

Join industry leaders like Granola, Taskrabbit, Flatfile and more.

#AD 2

One major reason AI adoption stalls? Training.

AI implementation often goes sideways due to unclear goals and a lack of a clear framework. This AI Training Checklist from You.com pinpoints common pitfalls and guides you to build a capable, confident team that can make the most out of your AI investment.

What you'll get:

Key steps for building a successful AI training program

Guidance on overcoming employee resistance and fostering adoption

A structured worksheet to monitor progress and share across your organization