What do you use AI for?

Y’all seem to have loved the breakdown of the Anthropic Report.

And ChatGPT came out with two more reports and I went down a rabbit hole reading them.

One measured what 700 million ChatGPT users actually do.

The other tested AI on 1,320 professional tasks from financial analysts, mechanical engineers, nurses, and lawyers.

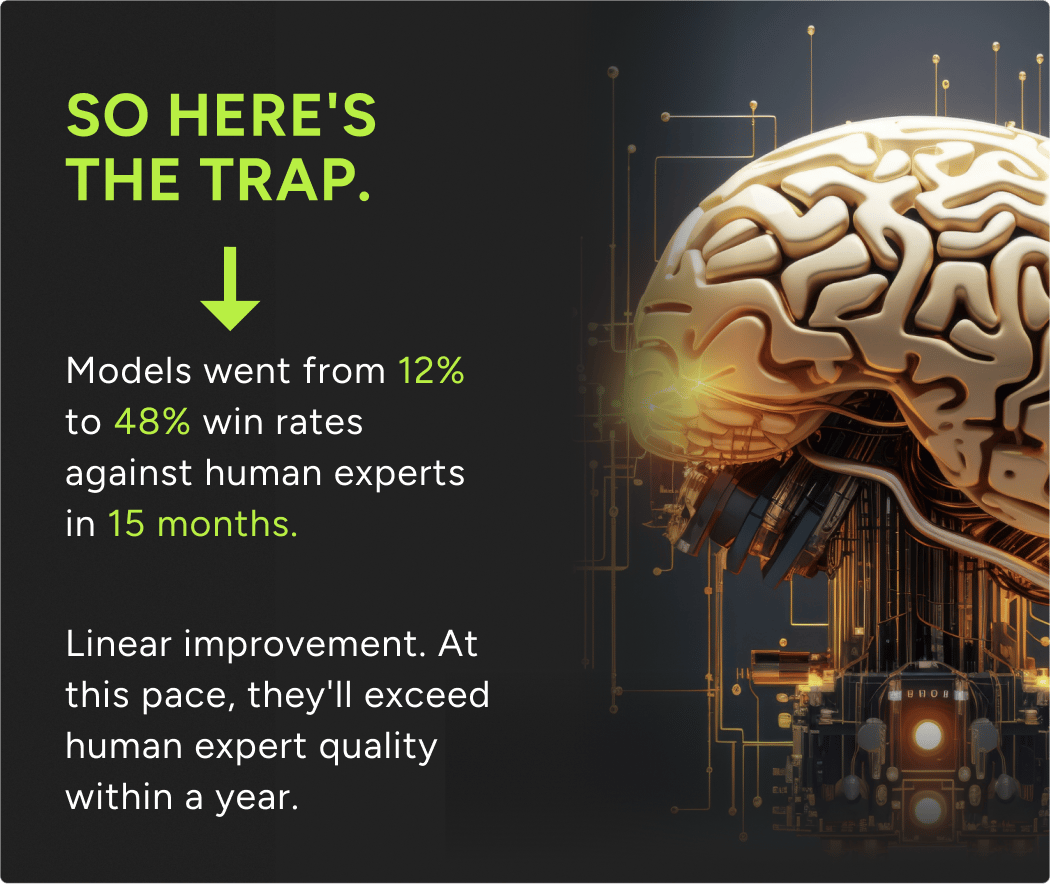

AI just matched human experts at professional work. And we're using it in exactly the wrong way.

I can go on and on but before that, let’s cover what happened since the last edition 👀

AI Tools That Made Me Question My Life Choices This Week

1. Snapdeck: Lovable for Slides

SnapDeck creates professional presentations with AI-powered slide generation, intuitive editing, and customizable designs. Build complete decks by typing in plain English or pulling content from websites and Notion. Features automated styling, animations, version control, and interactive web slides.

My take: Another AI presentation tool claiming to kill PowerPoint, except this one's tagline is literally "Lovable for Slides." When your entire pitch is being the Lovable equivalent for decks, you're basically admitting you're riding someone else's hype.

2. Creatium: Interactive AI Learning Platform

Creatium converts passive video lectures into interactive experiences with AI coaching, simulations, and role-plays. The platform claims 28% improvement in test scores and 5-8x faster content creation. Creates gamified learning content from simple text prompts, targeting corporate training and educational institutions.

My take: Creatium just weaponized boredom against itself. They're turning mind-numbing corporate training videos into something people might actually remember instead of sleep through.

3. Perplexity Search API: Developer Access to Web-Scale Search

Perplexity launched a Search API giving developers access to its infrastructure that indexes hundreds of billions of web pages. The API provides real-time updates, sub-document precision, and structured responses designed for AI applications. Priced at $5 per 1,000 requests with early customers including Zoom and Copy.ai.

My take: Perplexity just declared war on Google's search monopoly by giving every developer the keys to web-scale search. When a startup offers what Google hoards for itself, you know the internet's power structure is shifting.

Okay getting back to the report.

Here's what broke my brain about these papers.

OpenAI recruited professionals averaging 14 years experience.

Goldman Sachs. Meta. Department of Defense.

Had them create real tasks from their jobs.

Then had OTHER experts blindly grade AI outputs versus human outputs.

Let that sink in.

Not "could theoretically do this someday." Right now. Today.

On work that takes professionals 7 hours on average to complete.

Work worth $400 billion in annual wages.

And yet 73% of ChatGPT usage isn't work at all.

Yup…

We finally have AI that can match human experts at professional work, and most people are using it to...ask for recipe suggestions?

Get practical guidance?

Search for information?

It gets weirder.

When people DO use ChatGPT for work, 56% of messages are "complete this task for me" versus 35% "help me think through this."

And that task-completion number?

Jumped from 27% to 39% in eight months.

But here's the part that doesn't make sense: users rate the "help me think" interactions as HIGHER quality.

They prefer them. They're just not using them as much.

Why would you increasingly use the thing you rate as lower quality?

Because it's faster. Because you don't have to think. Because the output is "good enough."

And 46% of all ChatGPT users are under 26.

So in ten years, when they're the senior professionals, the managers, the decision-makers...what expertise will they have actually built?

The researchers tested this, by the way.

They took professional tasks and deliberately removed context.

Didn't tell the AI where to find data in reference files. Didn't specify how to approach the problem. Made it figure things out like a real professional would need to.

Performance collapsed.

Turns out AI is incredible at following detailed instructions.

Terrible at handling ambiguity.

And professional work? It's MOSTLY ambiguity.

Your client doesn't hand you perfect specifications.

Your boss doesn't give you step-by-step instructions.

You have to figure out what problem actually needs solving.

What questions matter. What tradeoffs exist.

And nobody's talking about this.

Let me show you something else that's strange.

When ChatGPT launched, 80% of users had typically masculine names.

Today? 52% have typically feminine names. The gender gap reversed.

But women use ChatGPT more for writing and practical guidance.

Men use it more for technical tasks and multimedia.

And adoption is exploding 4x faster in low and middle-income countries than wealthy ones. Access is democratizing. Everyone's getting the same tools.

But countries with higher AI adoption use it more for "Asking" (collaborative, learning-focused).

Countries with lower adoption default to "Doing" (just give me the answer).

Access isn't the same as use case.

Here's what really bothers me.

The GDPval researchers found something ridiculous: GPT-5 put black squares in over 50% of its PDFs. Formatting errors in 86% of PowerPoints.

Then they gave it one simple prompt: "Check your formatting by rendering files as images."

Black squares vanished.

PowerPoint errors dropped to 64%.

Win rates jumped 5 percentage points.

The models are WAY more capable than most people know how to unlock.

Think about what that means. We have tools that can match human experts.

But only if you know how to prompt them correctly.

How to scaffold them. How to check their work.

And those skills? They come from understanding the work itself.

From having done it enough times to recognize good output from bad.

From building judgment through experience.

Great, right? Productivity gains. Work 1.4x faster at 1.6x lower cost.

Except that assumes you can review the work. Spot the errors. Know what good looks like. Specify problems clearly when context is missing.

And we're watching an entire generation skip building those capabilities.

The data shows educated users in highly-paid occupations use AI differently. They use it more for "Asking." They're using AI to get better at work they already know how to do.

Everyone else is using AI to complete work they're not learning to evaluate.

Both groups have access. Both are "using AI."

But only one is building the capability to specify good work, recognize bad output, and handle the messy reality where nothing is well-defined and ambiguity is everywhere.

That's the actual problem.

Not whether AI can do the work. It can. We have the data now.

The question is: who decides what work to do? Who evaluates if it's actually good? Who handles the situations where the context is missing and someone needs to figure out what problem we're even trying to solve?

Those are judgment calls. They come from experience. From doing the work. From making mistakes and learning from them.

And if you skip that part because AI can "do it for you," you end up dependent on a tool for decisions it's not equipped to make.

My answer

I check AI output the same way I'd check a junior employee's work. Because I did the work myself first, thousands of times, until I built the judgment to tell good from bad.

What's yours?

If you read till here, you might find this interesting

#AD 1

AI Agents That Cut Support Costs By Up To 80%

AI Agents Designed For Complex Customer Support

Maven AGI delivers enterprise-grade AI agents that autonomously resolve up to 93% of support inquiries, integrate with 100+ systems, and go live in days. Faster support. Lower costs. Happier customers. All without adding headcount.

#AD 2

Stop being the bottleneck

Every leader hits the same wall: too many priorities, not enough bandwidth. Wing clears that wall with a full-time virtual assistant who runs the drag layer so you lead, not chase.

Offload scheduling, inbox, follow-ups, vendor wrangles

Keep your stack, your process, your control

Scale scope as you scale revenue

This isn’t gig work. It’s dedicated support that shows up every day and allows founders to delegate without losing control.